The repository contains lists of papers on causality and how relevant techniques are being used to further enhance deep learning era computer vision solutions.

The repository is organized by Maheep Chaudhary and Haohan Wang as an effort to collect and read relevant papers and to hopefully serve the public as a collection of relevant resources.

-

The Seven Tools of Causal Inference with Reflections on Machine Learning

-

Maheep's notes

The author proposes the 7 tools based on the 3 ladder of causation, i.e. Associaion, Intervention and Counterfactual. the paper proposes a diagram which describes that we have some assumptions from which we answer our query and from our data we validate our assumptions, i.e. "Fit Indices".The author proposes the 7 tools as :-

1. Transparency and Testability : Transparency indicates that the encoded form is easily usable and compact. The testability validates that the assumption encoded are compatible with the available data

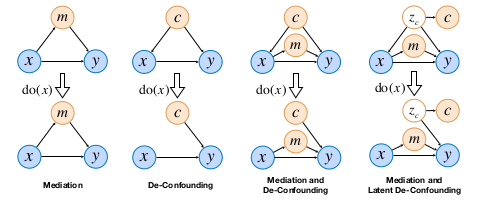

2. Do-Calculas and the control of Confounding : It is used for intervention, mainly used when we are trying to shift from 1st layer to 2nd.

3. The Algorithmization of Counterfactuals : When we can analyse the counterfactual reasoning using the experimental or observational studies.

4. Mediation Analysis and the Assessment of Direct and Indirect Effects : We find out the direct and indirect effects, such as what fraction of effect does X on Y mediated by variable Z.

5. Adaptability, External Validity and Sample Selection Bias : Basically it deals with the robustness of the model and offers do-calculas for overcoming the bias due to environmental changes.

6. Recovering from Missing Data : The casual inference is made to find out the data-generating principles through probablisitic relationship and therefore promises to fill the missing data.

7. Causal Discovery : The d-separation can ebable us to detect the testable implications of the casual model therefore can prune the set of compatible models significantly to the point where causal queries can be estimated directly from that set.

-

-

On Pearl’s Hierarchy and the Foundations of Causal Inference

-

Maheep's notes

The pearl causal hierarchy encodes different concepts like : association. intervention and counterfactual.

Corollary1 : It is genrally impossible to draw higher-layer inferences using only lower-layer informatio but the authors claim that they have atleast developed a framework to move from layer 1 to layer 2 just from the layer 1 data using Causal Bayesian Network that uses do-calculas, i.e. intervention to get insight of layer 2 using the layer 1 data.

A SCM includes 4 variables, i.e. exongenous "U", endogneous "V", set of functions and P(U).

A structural model is said to be markovian if the variable in the exogenous part are independent.

Observing : Joint porobability distribution for the Y(u) = y

Interventional SCM : SCM computed by fixing some varibales X = x. where X is in V.

Potential Response : It is the solution of Y caluclated thorugh the set of eqautions of SCM when we intervene on it. Intervening : observing just when we do(X = x) Effectiveness : P(v|do(x)) is effectiveness when for every v, P(v|do(x)) = 1

Collapse : The layers collapse when we can compute the results of the upper layer using the lower layer. For ex:- if Layer 2 collapses to layer 1, then it implies that we can draw all possible causal conculsion with mere correlation.

Theorem 1: PCH never collapses.

It could be easily observed/seen that SCM agrees on all lower layers but disagrees on all higher layers. A typical rdata-generating SCM encodes rich information at all threee layers but even very small changes might have substantial effect, which is generally seen in the higher layers.

Truncated Factorization Product is the equation stating P(v|do(x)) = pi P(v|pai)

When two variables are correlated it does not mean that one is causing the other, i.e. P(y|do(x)) = P(y) and P(x|do(y)) =P(x), in this case what happens is there is another unobserved variable that is influencing both x and y variable which is often indicated by the bi-directed line in the graph.

Factorization implied by the semi-markovian model does not act like chain rule, i.e. P(e|a,b,c,d) = P(a)P(b|a)P(c|b,a)P(d|c,b,a) but the factorization looks something like: P(e|d,c,b,a) = P(a)P(b|a)P(c|a)P(e|b.c) which implies that b and c are only affected by a also seen by a direct edge in SCM.

-

-

Unit selection based on counterfactual logic

-

Maheep's notes

The unit selection problem entails two sub-problems, evaluation and search. The evaluation problem is to find an objective function that, ensure a counterfactual behaviour when optimized over the set of observed characteristics C for the selected group. The search task is to devise a search algorithm to select individuals based both on their observed characteristics and the objective function devised above.

The paper only focuses on the evaluation sub-problem and focuses on the previous effort to solve this problem, i.e. A/B testing so as to maximizes the percentage of compliers and minimizes the percentages of defiers, always-takers, and never-takers. But the author argues that the proposed term for it does not satisfy the criteria as P(positive response|c, encouraged) - P(positive response|c, not encouraged) represents "compilers + always-takers" - "defiers + always-takers", therefore the author suggest to have two theorems, i.e. "monotonicity" and "gain equality" which can easily optimize the A/B testing. Monotonicity expresses the assumption that a change from X = false to X = true cannot, under any circumstance make Y change from true to false. Gain-equality states that the benefit of selecting a complier and a defier is the same as the benefit of selecting an always-taker and a never-taker (i.e., β + δ = γ + θ).

Taking into account the following theorems the author proposes a alternate term of A/B testing which stands for maximizing the benefit.

argmax c βP (complier|c) + γP (always-taker|c) + θP (never-taker|c) + δP (defier|c)

where benefit of selecting a complier is β, the benefit of selecting an always-taker is γ, the benefit of selecting a never-taker is θ, and the benefit of selecting a defier is δ. Our objective, then, should be to find c.

Theorem 4 says that if Y is monotonic and satisfies gain equallity then the benefit function may be defined as: -

(β − θ)P (y,x |z) + (γ − β)P (y,x′ |z) + θ

"Third, the proposed approach could be used to evaluate machine learning models as well as to generate labels for machine learning models. The accuracy of such a machine learning model would be higher because it would consider the counterfactual scenarios."

-

-

Unit Selection with Causal Diagram

-

Maheep's notes

Same as above

Additionoal content continues here..........

After proposing the technioques to account for unit selection in general the author proposes a new kind of problem by introducing the confounders in the causal Diagram. The following discovery was partially made by the paper "Causes of Effects: Learning Individual responses from Population Data ". The author proposes a new equation to handle these scenarios.

W + σU ≤ f ≤ W + σL if σ < 0,W + σL ≤ f ≤ W + σU if σ > 0, where "f" is the objective function. Previously in normal case the objective fucntion is bounded by the equation:

max{p 1 , p 2 , p 3 , p 4 } ≤ f ≤ min{p 5 , p 6 , p 7 , p 8 } if σ < 0,

max{p 5 , p 6 , p 7 , p 8 } ≤ f ≤ min{p 1 , p 2 , p 3 , p 4 } if σ > 0,

In the extension of the same the author proposes the new situations which arise such as the when "z" is partially observable. and if "z" is a pure mediator.

The author then discusses about the availablity of the observational and experimantal data. If we only have experimantal data then we can simply remove the observationa terms in the theorem

max{p 1 , p 2 } ≤ f ≤ min{p 3 , p 4 } if σ < 0,

max{p 3 , p 4 } ≤ f ≤ min{p 1 , p 2 } if σ > 0,

but if we have only onservational data then we can take use of the observed back-door and front-door variables to generate the experimental data, but if we have partially observable back-dorr and front-door variables then we can use the equation:

LB ≤ P (y|do(x)) ≤ UB

The last topic which author discusses about is the reduciton of the dimensionality of the variable "z" which satisfies the back-door and front-door variable by substituting the causal graph by substiuting "z" by "W" and "U" which satisfies the condition that "no_of_states_of_W * no_of_states_of_U = no_of_states_of_z".

-

-

The Causal-Neural Connection: Expressiveness, Learnability, and Inference

-

Maheep's notes

The author proposes Neural Causal Models, that are a type of SCM but are capable of amending Gradient Descent. The author propses the network to solve two kinds of problems, i.e. "causal effect identification" and "estimation" simultaneously in a Neural Network as genrative model acting as a proxy for SCM.

"causal estimation" is the process of identifying the effect of different variables "Identification" is obtained when we apply backdoor criterion or any other step to get a better insight. The power of identification has been seen by us as seen in the papers of Hanwang Zhang.

Theorem 1: There exists a NCM that is in sync with the SCM on ladder 3

-

-

The Causal Loss: Driving Correlation to Imply Causation(autonomus)

-

Maheep's notes

The paper introduces a loss function known as causal loss which aims to get the intervening effect of the data and shift the model from rung1 to rung2 of ladder of causation. Also the authors propose a Causal sum Product Network(CaSPN).

Basically the causal loss measures the prob of a variable when intervened on another variable. They extend CaSPN from iSPN be reintroducing the conditional vaaribales, which are obtained when we intervene on the observational data. They argue that the CaSPN are causal losses and also are very expressive.

The author suggests a way(taken from iSPN) consitional variables will be passed with adjacency matrix while weight training and target varibales are applied to the leaf node.

They train the CaSPN, NN with causal loss, standard loss and standard loss + alpha*causal loss and produce the results. Also they train a Decision tree to argue that their technique also works on Non-Differential Networks, therefore they propose to substitute the Gini Index with the Causal Decision Score which measures the average probability of a spit resulting in correct classification.

-

-

Double Machine Learning Density Estimation for Local Treatment Effects with Instruments

-

Maheep's notes

The LTE measures the affect of among compilers under assumptions of monotonicity. The paper focuses on estimating the LTE Density Function(not expected value) using the binary instrumental variable which are used to basically counteract the effect of unobserved confounders.

Instrumental Variables : These are the variables to counteract the affect of inobserved confounders. To be an instrumental varibale these are the following conditions it should consist of:

Relevance: The instrument Z has a causal effect on the treatment X.

Exclusion restriction: The instrument Z affects the outcome Y only through the treatment X.

Exchangeability (or independence): The instrument Z is as good as randomly assigned (i.e., there is no confounding for the effect of Z on Y).

Monotonicity: For all units i, Xi(z1)⩾Xi(x2) when z1⩾z2 (i.e., there are no units that always defy their assignment).

The author develops two methods to approximate the density function, i.e. kernel - smoothing and model-based approximations. For both approaches the author derive double/deboased machine learning estimators.

Kernel Smoothing method: They smoothes the density by convoluting with a smooth kernel function............................

Model-based approximators: It projects the density in the dfinite-dimenional density class basedon a distributional distance measure..........

The author argues that by obtaining the PDF may give very valuable information as compared to only estimating the Cumlative Distribution Function.

-

-

-

Maheep's notes

The paper focuses on shedding the light upon action-guiding explanations or causal explanations by defining three types of explanations i.e.Sufficient Explanations(SE), Counterfactual Explanations(CE) and Actual Causations(AC). These all kinds of explanations are based on intervention on feature to generate explanation. The AC lies between the SE and CE, as it consist of the features when changed do not change the prediction but are sufficient for explanation. The author introduces the concept of Independence, which states that a causal model that agrees with a function that mimics it mapping the input to output by making sure that the Endogenous Variables(V), V = Input U {output}, where every V have only exogenous(U) parents and not any endogenous.

In addition to that the author argues for a very important point, i.e.

successful explanation must show factors that may not be manipulated for the explanation to hold, which is distinct from stating which variables must be held fixed at their actual values.

To give a more detailed view of the terms defined above, the author defines them in a detailed way:

1.) Sufficient Explanations: The author describes the phenomenon when it is explained that forXhaving values asxfixed then theYwill take valueyis known as weak sufficiency, but if only a subset of values inXare set fixed tox, ensureY = yregardless of the values other leftover variables takes, is known as Direct Sufficiency. In addition to that variablesN, that are not to be manipulated for the explanations or in other words safeguarded from intervention andYtakes the valuey, the notion is called Strong Sufficiency. The varibales inNcan be thought of as a network that transmits the Causal Inference onY. The actual sufficient explanation can be thought as the pair of(X = x, N)whereXis strongly sufficient forY = y. The sufficient explanation may dominate other one if the variables in it explains the same and has a subset of the varibles in the other equation.

2.) Counterfactual Explanations: It informs us the variables that needs to be changed fromX = xtoX = x'so as to change the output fromY = ytoY = y', which is divided into two parts: direct counterfactual dependence which deals by the above fact but fixing all the other variables constant and counterfactaul dependence which also holds the above fact but does not intervene on any other variables. The author generalizes these two methods by introducing a new varibaleWthat contains the fixed variables. In the latter caseWremians null but in former case it contains all the varibles excluding the ones intervened upon, where the dominance of one explanation over another takes place as in the SEThe author concludes the paper by defining Actual Causation and concludes that sufficient explanations are of little value for action-guidance, compared to counterfactual aspect. Contrary to CE the AC guides towards actions that would not ensure the actual output under the same conditions as the actual action. The author actual causation naturally accommodates this replacement as well,define fairness by for actual causation occurs along a network

Ndemanding that protected variables do not cause the outcome along an unfair network, i.e., a network that consists entirely of unfair paths.

-

-

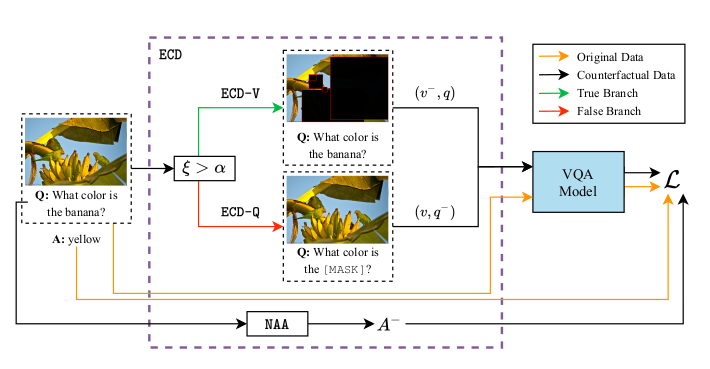

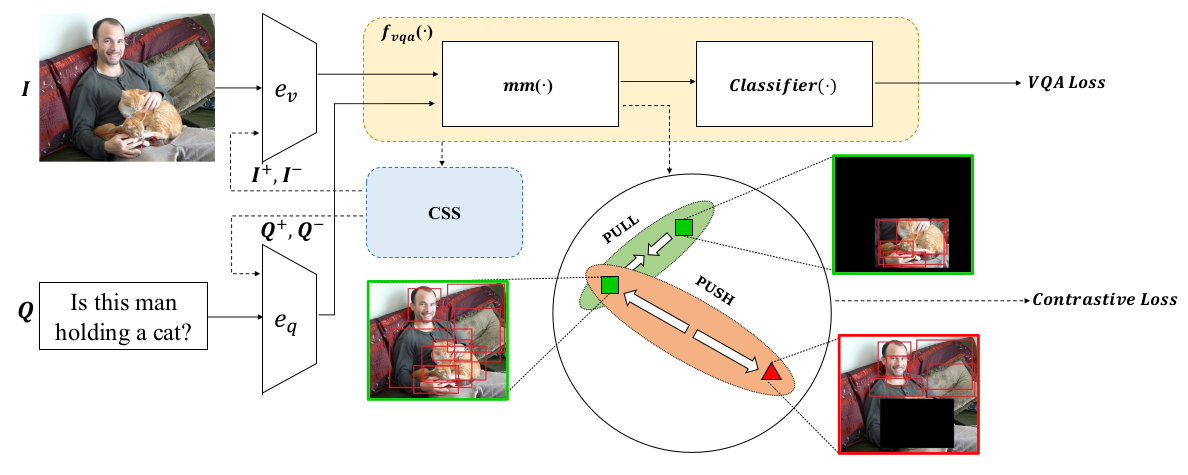

Counterfactual Samples Synthesizing and Training for Robust Visual Question Answering

-

Maheep's notes

In this research paper the author focuses on 2 major questions, i.e.

1) Visual-explainable: The model should rely on the right visual regions when making decisions.

2) Question-sensitive: The model should be sensitive to the linguistic variations in questions.

The author proposes a technique, i.e. CSST which consist of CSS and CST which do counterfactual in VQA as the CSS generates the counterfactual samples by masking critical objects in the images and words. The CST model tackles the second challenge of sensitivity is handled by the CST which make the model learn to distinguish between the origianl samples and counterfactal ones. Addtionally it trains the model to learn both kind of samples, i.e. origianl and counterfactual ones, making the model robust.

-

-

How Should Pre-Trained Language Models Be Fine-Tuned Towards Adversarial Robustness?

-

Maheep's notes

The fine-tuning of pre-trained language models has a great success in many NLP fields but it is strikingly vulnerable to adversarial examples, as it suffers severely from catastrophic forgetting: failing to retain the generic and robust linguistic features that have already been captured by the pre-trained model. The proposed model maximizes the mutual information between the output of an objective model and that of the pre-trained model conditioned on the class label. It encourages an objective model to continuously retain useful information from the pre-trained one throughout the whole fine-tuning process.

I(S; Y, T ) = I(S; Y ) + I(S; T|Y ),

The author proposes by this equation that the two models overlap, i.e. the objective model and the pretrained model. S represents the features extracted the model by the objective model and T is the features extracted by the pretrained model.

-

-

Counterfactual Zero-Shot and Open-Set Visual Recognition

-

Maheep's notes

The author proposes a novel counterfactual framework for both Zero-Shot Learning (ZSL) and Open-Set Recognition (OSR), whose common challenge is generalizing to the unseen-classes by only training on the seen-classes. all the unseen-class recognition methods stem from the same grand assumption: attributes (or features) learned from the training seen-classes are transferable to the testing unseen-classes. But this does not happen in practise.

ZSL is usually provided with an auxiliary set of class attributes to describe each seen- and unseen-class whereas the OSR has open environment setting with no information on the unseen-classes [51, 52], and the goal is to build a classifier for seen-classes. Thae author describers previous works in which the generated samples from the class attribute of an unseen-class, do not lie in the sample domain between the ground truth seen and unseen, i.e., they resemble neither the seen nor the unseen. As a result, the seen/unseen boundary learned from the generated unseen and the true seen samples is imbalanced.

The author proposes a technique using counterfactual, i.e. to generate samples using the class attributes, i.e. Y and sample attrubute Z by the counterfactual, i.e. X would be x̃, had Y been y, given the fact that Z = z(X = x) and the consistency rule defines that if the ground truth is Y then x_bar would be x. The proposed genrative causal model P(X|Z, Y ) generate exapmles for ZSL and OSR.

-

-

Counterfactual VQA: A Cause-Effect Look at Language Bias

-

Maheep's notes

Besides, counterfactual training samples generation [12, 1, 58, 19, 31] helps to balance the training data, and outperform other debiasing methods by large margins on VQA-CP.

The statement specifies the reason why the author in the origianl paper mentioned that we can generate missing labels with that process in Machine Leanring. They formulate the language bias as the direct causal effect of questions on answers, and mitigate the bias by subtracting the direct language effect from the total causal effect. They proposed a very simple method to debias the NLP part in VQA using the Causal Inference, i.e. they perform VQA using different layers for different part, i.e. for visual, question and visual+question which is denoted by Knowledge base K. They argue that if we train a model like this then we would have result with Z_q,k,v, then to get the Total Indirect Effect, they train another model with parameters as Z_q,v*k* and are subtracted from each other. to eliminate the biasness of the language model.

-

-

CONTERFACTUAL GENERATIVE ZERO-SHOT SEMANTIC SEGMENTATION

-

Maheep's Notes

The paper proposes a zero-shot semantic segmentation. One of the popular zero-shot semantic segmentation methods is based on the generative model, but no one has set their eyes on the statstical spurious correlation. In this study the author proposes a counterfactual methods to avoid the confounder in the original model. In the spectrum of unsupervised methods, zero-shot learning always tries to get the visual knowledge of unseen classes by learning the mapping from word embedding to visual features. The contribution of the paper accounts as: (1) They proposed a new strategy to reduce the unbalance in zero-shot semantic segmentation. (2) They claim to explain why recent different structures of models can develop the performance of traditional work. (3) They extend the model with their structures and improve its performance.The model will contain a total of 4 variables R, W, F and L. The generator will to generate the fake features using the word embeddings and real features of the seen class and will generate fake images using word embeddings after learning. However, this traditional model cannot capture the pure effect of real features on the label because the real features R not only determine the label L by the link R !L but also indirectly influence the label by path R ! F ! L. This structure, a.k.a. confounder. Therefore they remove the R!F!L and let it be W!F!L, removing the confounding effect of F. Also they use GCN to generate the image or fake features from eord embeeddings using the GCN which alos provides to let the generator learn from similar classes.

-

-

Adversarial Visual Robustness by Causal Intervention

-

Maheep's notes

The paper focuses on adverserial training so as to prevent from adverserial attacks. The author use instrumental variable to achieve casual intervention. The author proposes 2 techniques, i.e.-

Augments the image with multiple retinoptic centres

-

Encourage the model to learn causal features, rather than local confounding patterns.

They propose the model to be such that max P (Y = ŷ|X = x + delta) - P(Y = ŷ|do(X = x + delta)), subject to P (Y = ŷ|do(X = x + delta)) = P (Y = ŷ|do(X = x)), in other words they focus on annhilating the confounders using the retinotopic centres as the instrumental variable.

-

-

-

What If We Could Not See? Counterfactual Analysis for Egocentric Action Anticipation

-

Maheep's notes

Egocentric action anticipation aims at predicting the near future based on past observation in first-person vision. In addition to visual features which capture spatial and temporal representations, semantic labels act as high-level abstraction about what has happened. Since egocentric action anticipation is a vision-based task, they consider that visual representation of past observation has a main causal effect on predicting the future action. In the second stage of CAEAA, we can imagine a counterfactual situation: “what action would be predicted if we had not observed any visual representation?"They ask this question so as to only get the effect of semantic label. As the visual feature is the main feature the semantic label can act as a confouder due to some situations occuring frequently. Therfore the author proposes to get the logits "A" from the pipeline without making any changes to the model and then also getting the logits "B" when they provide a random value to visual feature denoting the question of counterfactual, i.e. “what action would be predicted if we had not observed any visual representation?" getting the unbiased logit by:

Unbiased logit = A - B

-

-

Transporting Causal Mechanisms for Unsupervised Domain Adaptation

-

Maheep's notes

Existing Unsupervised Domain Adaptation (UDA) literature adopts the covariate shift and conditional shift assumptions, which essentially encourage models to learn common features across domains, i.e. in source domain and target domain but as it is unsupervised, the feature will inevitably lose non-discriminative semantics in source domain, which is however discriminative in target domain. This is represented by Covariate Shift: P (X|S = s) != P (X|S = t), where X denotes the samples, e.g., real-world vs. clip-art images; and 2) Conditional Shift: P (Y |X, S = s) != P (Y |X, S = t). In other words covariate dhift defiens that the features or images of both the target and source domain will be different. The conditional shift represents the logit probability from same class images in source and target domain will vary. The author argues that the features discartrded but are important say "U" are confounder to image and features extracterd. Therefore the author discovers k pairs of end-to-end functions {(M i , M i inverse )}^k in unsupervised fashion, where M(Xs) = (Xt) and M i_inverse(Xt) = Xs , (M i , M i 1 ) corresponds to U i intervention. Specifically, training samples are fed into all (M i , M i 1 ) in parallel to compute L iCycleGAN for each pair. Only the winning pair with the smallest loss is updated. This is how they insert images with same content but by adding U in both target and source domain using image generation.

-

-

WHEN CAUSAL INTERVENTION MEETS ADVERSARIAL EXAMPLES AND IMAGE MASKING FOR DEEP NEURAL NETWORKS

-

Maheep's notes

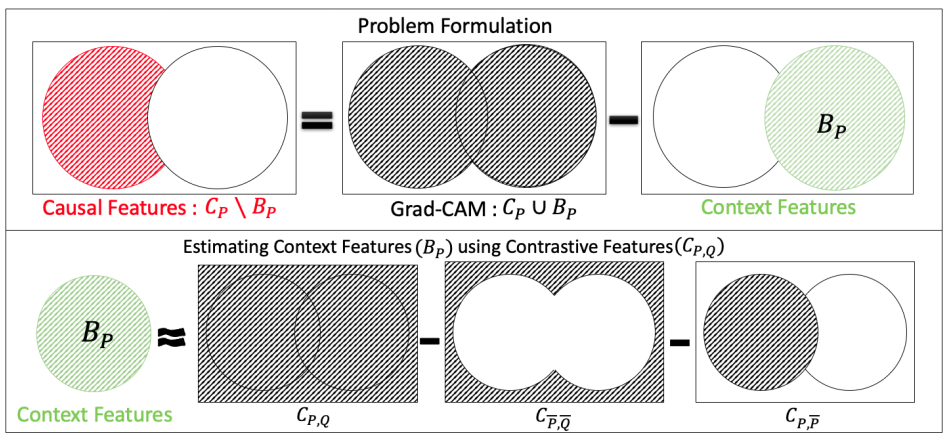

To study the intervention effects on pixel-level features for causal reasoning, the authors introduce pixel-wise masking and adversarial perturbation. The authors argue that the methods such as Gradient information from a penultimate convolutional layer was used in GradCAM are good to provide the saliency map of the image but it is not justifiable inmany situaitons as the Saliency maps onlyCAM establish a correlation for interpretability while it is possible to trace a particular image region that is responsible for it to be correctly classified; it cannot elucidate what would happen if a certain portion of the image was masked out.Effect(xi on xj , Z) = P (xj |do(xi_dash ), Z_Xi) - P (xj |Z_Xi ) ......................................(1) The excepted casual effect has been defined as: E_Xi[Effect(xi on xj , Z)] = (P(Xi = xi |Z)*(equation_1))

The author proposes three losses to get the above equaitons, i.e. the effect of pixels. The losses are interpretability loss, shallow reconstruction loss, and deep reconstruction loss. Shallow reconstruction loss is simply the L 1 norm of the difference between the input and output of autoencoder to represent the activations of the network. For the second equation they applied the deep reconstruction loss in the form of the KL-divergence between the output probability distribution of original and autoencoder-inserted network.

These losses are produced afer perturbtaing the images by maksing the images and inserting adverserial noise.

-

-

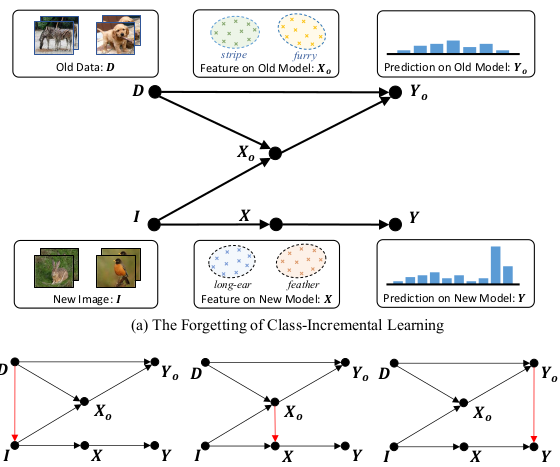

Interventional Few-Shot Learning

-

Maheep's notes

In this paper the author argues that in the prevailing Few-Shot Learning (FSL) methods: the pre-trained knowledge of the models is used which is indeed a confounder that limits the performance. They develop three effective IFSL algorithmic implementations based on the backdoor adjustment, the fine-tuning only exploits the D’s knowledge on “what to transfer”, but neglects “how to transfer”. Though stronger pretrained model improves the performance on average, it indeed degrades that of samples in Q dissimilar to S. The deficiency is expected in the meta-learning paradigm, as fine-tune is also used in each meta-train episodeThe author proposes the solution by proposing 4 variables, i.e. "D", "X", "C", "Y" where D is the pretrained model, X is the feature representaiton of the image, C is the low dimesion representation of X and Y are the logits. The author says the D affects both the X and C, also X affects C, X and C affects the logit Y. The autho removes the affect of D on X using backdoor.

-

-

CLEVRER: COLLISION EVENTS FOR VIDEO REPRESENTATION AND REASONING

-

Maheep's notes

The authors propose CoLlision Events for Video REpresentation and Reasoning (CLEVRER) dataset, a diagnostic video dataset for systematic evaluation of computational models on a wide range of reasoning tasks. Motivated by the theory of human causal judgment, CLEVRER includes four types of question: descriptive (e.g., ‘what color’), explanatory (‘what’s responsible for’), predictive (‘what will happen next’), and counterfactual (‘what if’).The dataset is build on CLEVR dataset and has predicitive both predictive and counterfactual questions, i.e. done by, Predictive questions test a model’s capability of predicting possible occurrences of future events after the video ends. Counterfactual questions query the outcome of the video under certain hypothetical conditions (e.g. removing one of the objects). Models need to select the events that would or would not happen under the designated condition. There are at most four options for each question. The numbers of correct and incorrect options are balanced. Both predictive and counterfactual questions require knowledge of object dynamics underlying the videos and the ability to imagine and reason about unobserved events.

The dataset is being prepared by using the pysics simulation engine.

-

-

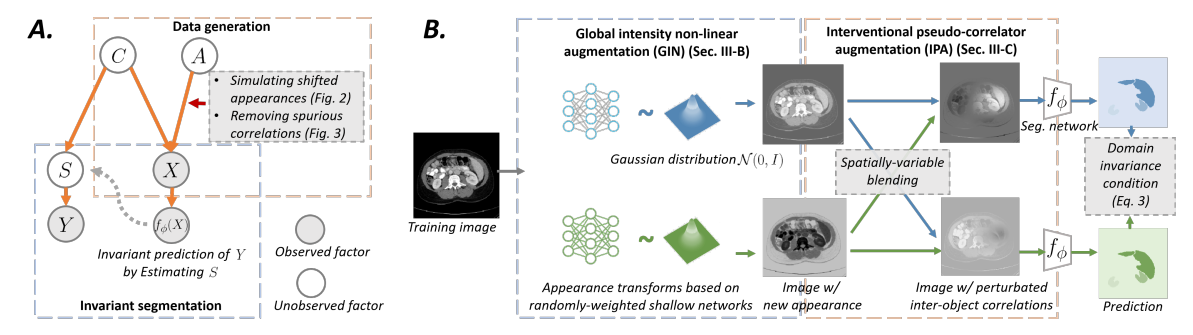

Towards Robust Classification Model by Counterfactual and Invariant Data Generation

-

Maheep's notes

The paper is about augmentaiton using the counterfactual inference by using the human annotations of the subset of the features responsible (causal) for the labels (e.g. bounding boxes), and modify this causal set to generate a surrogate image that no longer has the same label (i.e. a counterfactual image). Also they alter non-causal features to generate images still recognized as the original labels, which helps to learn a model invariant to these features.They augment using the augmentaions as: None, CF(Grey), CF(Random), CF(Shuffle), CF(Tile), CF(CAGAN) and the augmentaions which alter the invariant features using: F(Random) F(Shuffle) F(Mixed-Rand) F(FGSM)

-

-

Unbiased Scene Graph Generation from Biased Training

-

Maheep's Notes

The paper focuses on scene graph generation (SGG) task based on causal inference. The author use Total Direct Effect for an unbiased SGG. The author proposes the technique, i.e.- To take remove the context bias, the author compares it with the counterfactual scene, where visual features are wiped out(containing no objects).

The author argues that the true label is influenced by Image(whole content of the image) and context(individual objects, the model make a bias that the object is only to sit or stand for and make a bias for it) as confounders, whereas we only need the Content(object pairs) to make the true prediction. The author proposes the TDE = y_e - y_e(x_bar,z_e), the first term denote the logits of the image when there is no intervention, the latter term signifies the logit when content(object pairs) are removed from the image, therfore giving the total effect of content and removing other effect of confounders.

-

-

Counterfactual Visual Explanations

-

Maheep's Notes

The paper focuses on Counterfactual Visual Explanations. The author ask a very signifiant question while developing the technique proposed in the paper, i.e. how I could change such that the system would output a different specified class c'. To do this, the author proposes the defined technique: -- He selects ‘distractor’ image I' that the system predicts as class c' and identify spatial regions in I and I' such that replacing the identified region in I with the identified region in I' would push the system towards classifying I as c'.

The author proposes the implementation by the equation:

f(I*) = (1-a)*f(I) + a*P(f(I'))

where

I*represents the image made using theIandI'*represents the Hamdard product.

f(.)represents the spatial feature extractor

P(f(.))represents a permutation matrix that rearranges the spatial cells off(I')to align with spatial cells off(I)The author implements it using the two greedy sequential relaxations – first, an exhaustive search approach keeping a and P binary and second, a continuous relaxation of a and P that replaces search with an optimization.

-

-

Counterfactual Vision and Language Learning

-

Maheep's Notes

The paper focuses on VQA models using the counterfactual intervention to make it robust. They ask a crucial question, i.e. “what would be the minimum alteration to the question or image that could change the answer”. The author uses the observational data as well as the counterfactual data to predict the answer. To do this, the author proposes the defined technique: -- The author replaces the embedding of the question or image using another question or image so as to predict the correct answer and minimize counterfactual loss.

-

-

Counterfactual Vision-and-Language Navigation via Adversarial Path Sampler

-

Maheep's Notes

The paper focuses on Vision-and-Language Navigation (VLN). The author combine the adversarial training with counterfactual conditions to guide models that might lead to robust model. To do this, the author proposes the defined techniques: -- The author APS, i.e. adversarial path sampler which samples batch of paths P after augmenting them and reconstruct instructions I using Speaker. With the pairs of (P,I), so as to maximize the navigation loss L_NAV.

- The NAV, i.e. navigation model trains so as to minimize the L_Nav making the whole process more robust and increasing the performance.

The APS samples the path based on the visual features v_t which are obtained using the attention on the feature space f_t and history h_t-1 and previous action taken a_t-1 to output the path using the predicted a_t and the features f_t.

-

-

Beyond Trivial Counterfactual Explanations with Diverse Valuable Explanations

-

Maheep's Notes

The paper system DiVE in a world where the works are produced to get features which might change the output of the image and learns a perturbation in a disentangled latent space that is constrained using a diversity-enforcing loss to uncover multiple valuable explanations about the model’s prediction. The author proposes these techniques to get the no-trivial explanations and making the model more diversified, sparse and valid: -- DiVE uses an encoder, a decoder, and a fixed-weight ML model.

- Encoder and Decoder are trained in an unsupervised manner to approximate the data distribution on which the ML model was trained.

- They optimize a set of vectors E_i to perturb the latent representation z generated by the trained encoder.

The author proposes 3 main losses:

Counterfatual loss: It identifies a change of latent attributes that will cause the ML model f to change it’s prediction.Proximity loss: The goal of this loss function is to constrain the reconstruction produced by the decoder to be similar in appearance and attributes as the input, therfore making the model sparse.Diversity loss: This loss prevents the multiple explanations of the model from being identical and reconstructs different images modifing the different spurious correlations and explaing through them. The model uses the beta-TCVAE to obtain a disentangled latent representation which leads to more proximal and sparse explanations and also Fisher information matrix of its latent space to focus its search on the less influential factors of variation of the ML model as it defines the scores of the influential latent factors of Z. This mechanism enables the discovery of spurious correlations learned by the ML model.

-

-

SCOUT: Self-aware Discriminant Counterfactual Explanations

-

Maheep's Notes

The paper proposes to connect attributive explanations, which are based on a single heat map, to counterfactual explanations, which seek to identify regions where it is easy to discriminate between prediction and counter class. They also segments the region which discriminates between the two classes of a class image.The author implements using a network by giving a query image x of class y , a user-chosen counter class y' != y, a predictor h(x), and a confidence predictor s(x), x is then forwarded to get the F_h(x) and F_s(x). From F_h(x) we predict h_y(x) and h_y'(x) which are then combined with the original F_h(x) to produce the A(x, y) and A(x, y') to get the activation tensors and they are then combined with A(x, s(x)) to get the segmented region of the image which is discriminative of the counter class.

-

-

Born Identity Network: Multi-way Counterfactual Map Generation to Explain a Classifier’s Decision

-

Maheep's Notes

The paper proposes a system BIN that is used to produce counterfactual maps as a step towards counterfactual reasoning, which is a process of producing hypothetical realities given observations. The system proposes techniques: -

- The author proposes Counterfactual Map Generator (CMG), which consists of an encoder E , a generator G , and a discriminator D . First, the network design of the encoder E and the generator G is a variation of U-Net with a tiled target label concatenated to the skip connections. This generator design enables the generation to synthesize target conditioned maps such that multi-way counterfactual reasoning is possible.

- The another main technique porposes is the Target Attribution Network(TAN) the objective of the TAN is to guide the generator to produce counterfactual maps that transform an input sample to be classified as a target class. It is a complementary to CMG.

The author proposes 3 main losses:

`Counterfatual Map loss` : The counterfactual map loss limits the values of the counterfactual map to grow as done by proximity loss in DiVE.

`Adverserial loss` : It is an objective function reatained due to its stability during adversarial training.

`Cycle Consistency loss` : The cycle consistency loss is used for producing better multi-way counterfactual maps. However, since the discriminator only classifies the real or fake samples, it does not have the ability to guide the generator to produce multi-way counterfactual maps. - The author proposes Counterfactual Map Generator (CMG), which consists of an encoder E , a generator G , and a discriminator D . First, the network design of the encoder E and the generator G is a variation of U-Net with a tiled target label concatenated to the skip connections. This generator design enables the generation to synthesize target conditioned maps such that multi-way counterfactual reasoning is possible.

-

-

Introspective Distillation for Robust Question Answering

-

Maheep's Notes

The paper focuses on the fact that the present day systems to make more genralized on OOD(out-of-distribution) they sacrifice their performance on the ID(in-distribution) data. To achieve a better performance in real-world the system need to have accuracy on both the distributions to be good. Keeping this in mind the author proposes: -- The author proposes to have a causal feature to teach the model both about the OOD and ID data points and take into account the

P_OODandP_ID, i.e. the predictions of ID and OOD. - Based on the above predictions the it can be easily introspected that which one of the distributions is the model exploiting more and based on it they produce the second barnch of the model that scores for

S_IDandS_OODthat are based on the equationS_ID = 1/XE(P_GT, P_ID), whereXEis the cross entropy loss. further these scores are used to compute weightsW_IDandW_OOD, i.e.W_OOD = S_OOD/(S_OOD + S_ID)to train the model to blend the knowledge from both the OOD and ID data points. - The model is then distilled using the knowledge distillation manner, i.e.

L = KL(P_T, P_S), whereP_Tis the prediction of the teacher model and theP_Sis the prediction of the student model.

- The author proposes to have a causal feature to teach the model both about the OOD and ID data points and take into account the

-

-

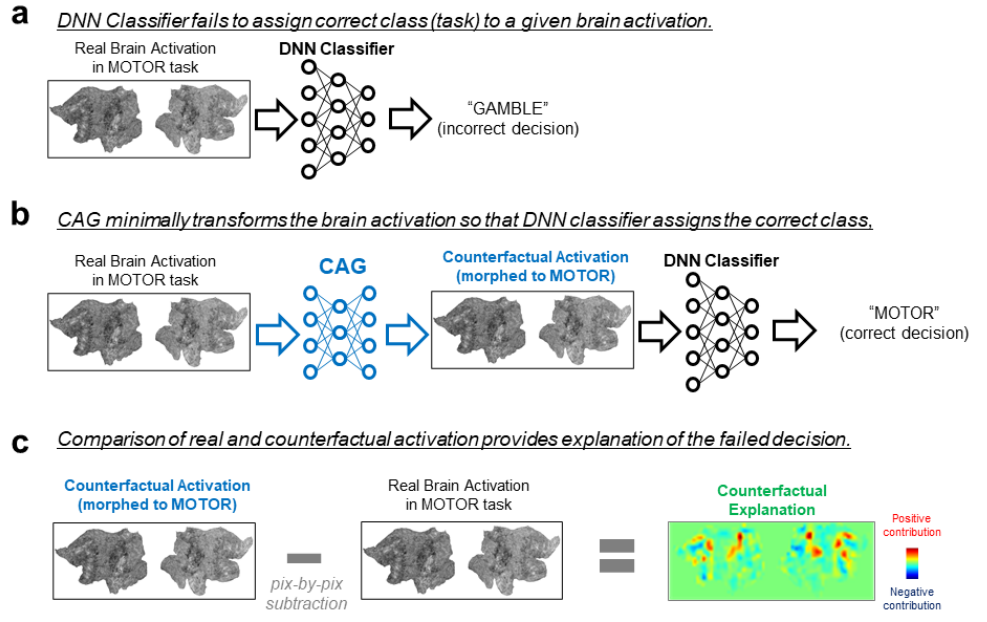

Counterfactual Explanation and Causal Inference In Service of Robustness in Robot Control

-

Maheep's Notes

The paper focuses on the generating the features using counterfactual mechanism so as to make the model robust. The author proposes to generate the features which are minimal and realistic in an image so as to make it as close as the training image to make the model work correctly making the model robust to adverserial attacks, therfore robust. The generator has two main components, a discriminator which forces the generator to generate the features that are similar to the output class and the modification has to be as small as possible.

The additonal component in the model is the predictor takes the modified image and produces real-world output. The implementation of it in mathematics looks like:

min d_g(x, x') + d_c(C(x'), t_c), where d_g is the distance b/w the modified and original image, d_c is the distance b/w the class space and C is the predictor that x' belongs to t_c class.

The loss defines as:total_loss = (1-alpha)*L_g(x, x') + (alpha)*L_c(x, t_c), where L_c is the lossxbelongs tot_cclass

-

-

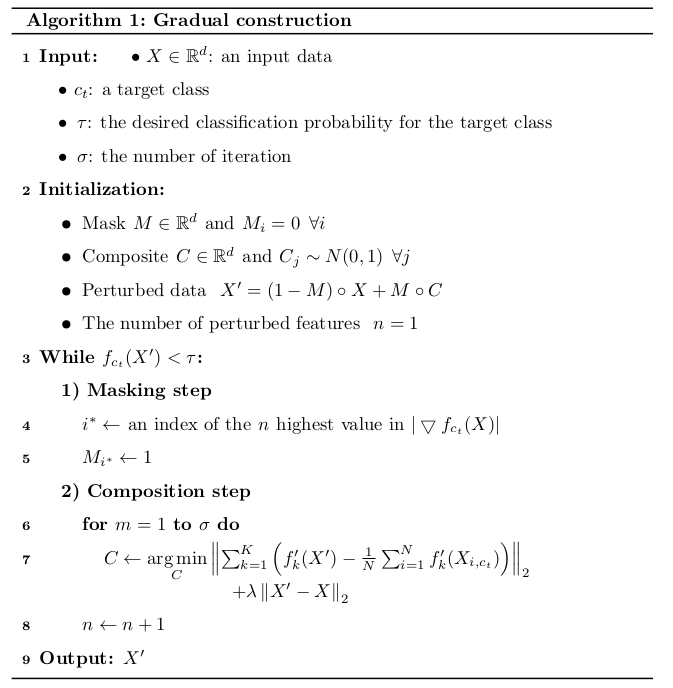

Counterfactual Explanation Based on Gradual Construction for Deep Networks

-

Maheep's Notes

The paper focuses on gradually construct an explanation by iterating over masking and composition steps, where the masking step aims to select the important feature from the input data to be classified as target label. The compostition step aims to optimize the previously selected features by perturbating them so as to prodice the target class.

The proposed also focuses on 2 things, i.e. Explainability and Minimality. while implementing the techniue the authors observe the target class which were being generated were getting much perturbated so as to come under asverserial attack and therfore they propose the logit space of x' to belong to the space of training data as follows:

argmin(sigma(f_k'(x') - (1/N)*sigma(f_k'(X_i,c_t))) + lambda(X' - X))

wheref'gives the logits for class k,X_i,c_trepresents the i-th training data that is classified into c_k class and the N is the number of modifications.

-

-

CoCoX: Generating Conceptual and Counterfactual Explanations via Fault-Lines

-

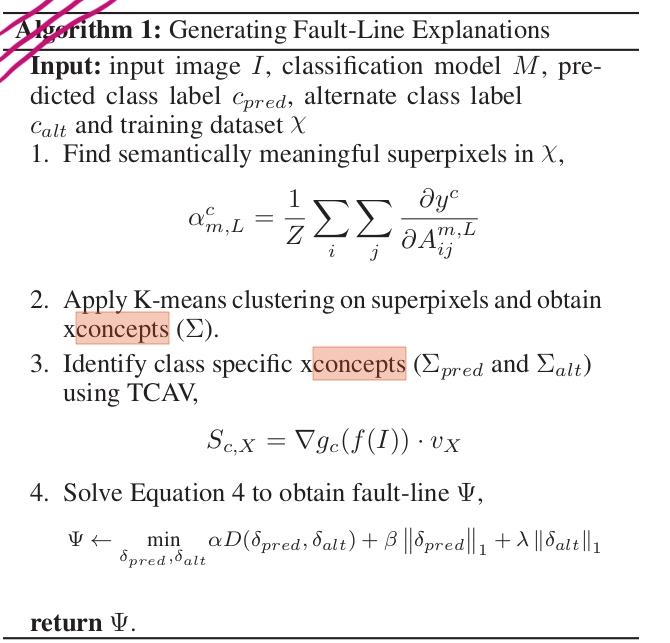

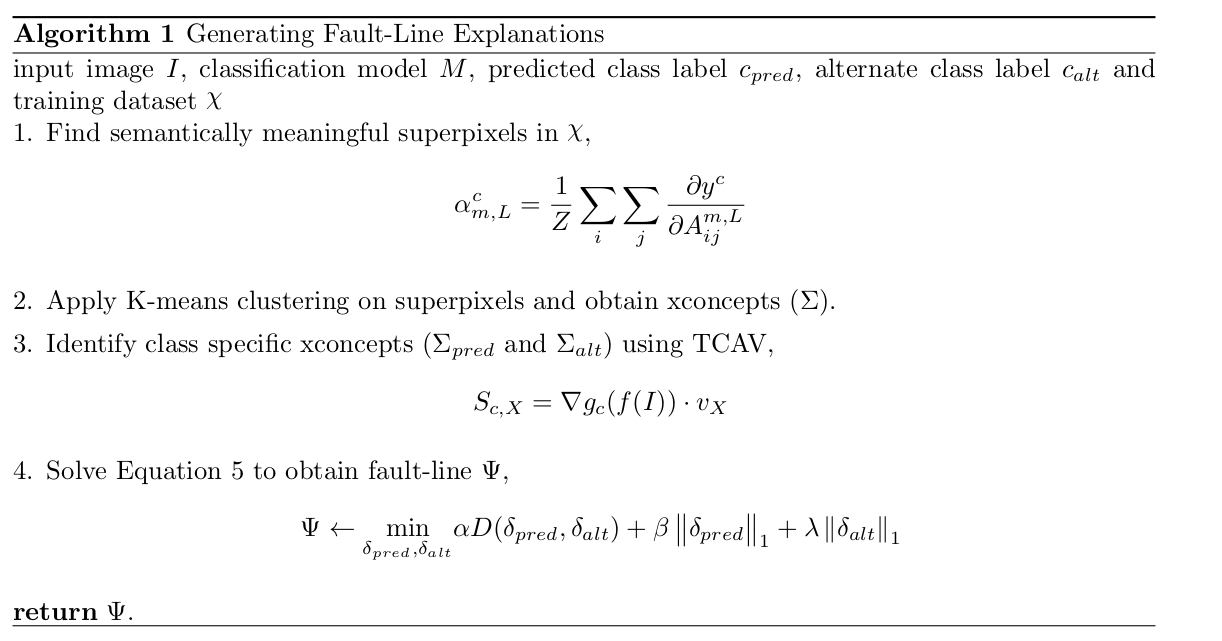

Maheep's Notes

The paper focuses a model for explaining decisions made by a deep convolutional neural network (CNN) fault-lines that defines the main features from which the humans deifferentiate the two similar classes. The author introduces 2 concepts: PFT and NFT, PFT are those xoncepts to be added to input image to change model prediction and for NFT it subtracts, whereas the xconcepts are those semantic features that are main features extracted by CNN and from which fault-lines are made by selecting from them.

The proposed model is implemented by taking the CNN captured richer semantic aspect and construct xconcepts by making use of feature maps from the last convolution layer. Every feature map is treated as an instance of an xconcept and obtain its localization map using the Grad-CAM and are spatially pooled to get important weights, based on that top p pixels are selected and are clustered using K-means. The selection is done using the TCAV tecnique.

-

-

-

Maheep's Notes

The paper is kind of an extension of the above paper(CoCoX), i.e. it also uses fault-lines for explainability but states a dialogue between a user and the machine. The model is made by using the fault-lines and the Theory of Mind(ToM).

The proposed is implemented by taking an image and the same image is blurred and given to a person, then the machine take out the crucial features by thinking what the person may have understood and what is the information it should provide. The person is given more images and then the missing parts are told to be predicted after the dialogue, if the person is able to predict the parts that it was missing before then the machine gets a positive reward and functions in a RL training technique way.

-

-

DeDUCE: Generating Counterfactual Explanations At Scale

-

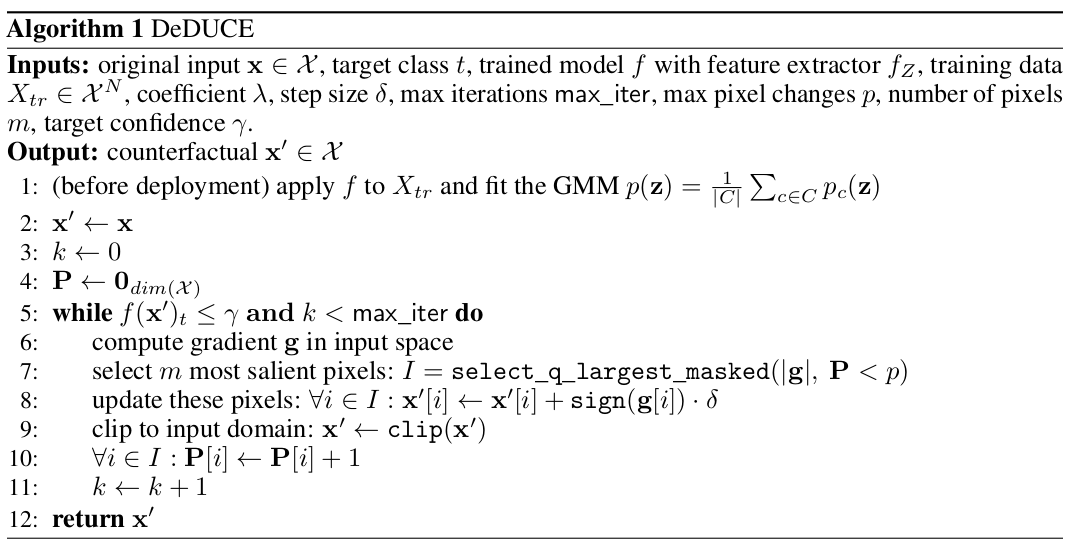

Maheep's Notes

The paper focues to detect the erroneous behaviour of the models using counterfatctual as when an image classifier outputs a wrong class label, it can be helpful to see what changes in the image would lead to a correct classification. In these cases the counterfactual acrs as the closest alternative that changes the prediction and we also learn about the decision boundary.

The proposed model is implemented by identifying the Epistemic uncertainity, i.e. the useful features using the Gaussian Mixture Model and therfore only the target class density is increased. The next step would be to change the prediction using a subtle change therefore the most slaient pixel, identified usign the gradient are changed.

-

-

Designing Counterfactual Generators using Deep Model Inversion

-

Maheep Notes

The paper focues on the scenario when the we have access only to the trained deep classifier and not the actual training data. The paper proposes a goal to develop a deep inversion approach to generate counterfactual explanations. The paper propses methods to preserve metrics for semantic preservation using the different methods such as ISO and LSO. The author also focuses on manifold consistency for the counterfactual image using the Deep Image Prior model. -argmin(lambda_1*sigma_on_l(layer_l(x'), layer_l(x)) + lambda_2*L_mc(x';F) + lambda_3*L_cf(F(x'), y'))

where,

layer_l:The differentiable layer "l" of the neural network, it is basically used for semantic preservation.

L_mc: It penlaizes x' whcih do not lie near the manifold. L_mc can be Deterministic Uncertainty Quantification (DUQ).

L_fc: It ensures that the prediction for the counterfactual matches the desired target

-

-

ECINN: Efficient Counterfactuals from Invertible Neural Networks

-

Maheep's Notes

The paper utilizes the generative capacities of invertible neural networks for image classification to generate counterfactual examples efficiently. The main advantage of this network is that it is fast and invertible, i.e. it has full information preservation between input and output layers, where the other networks are surjective in nature, therfore also making the evaluation easy. The network claims to change only class-dependent features while ignoring the class-independence features succesfully. This happens as the INNs have the property that thier latent spaces are semantically organized. When many latent representations of samples from the same class are averaged, then class-independent information like background and object orientation will cancel out and leaves just class-dependent information

x' = f_inv(f(x) + alpha*delta_x)

where,

x':Counterfactual image.

f: INN and therforef_invis the inverse off.

delta_x: the infoprmation to be added to convert the latent space of image to that of counterfactual image.

||z + alpha_0*delta_x- µ_p || = ||z + alpha_0*delta_x - µ_q ||where the z + alpha_0*delta_x is the line separating the two classes and µ_q and µ_q are the mean distance from line. Therefore

alpha = alpha_0 + 4/5*(1-alpha_0)

-

-

EXPLAINABLE IMAGE CLASSIFICATION WITH EVIDENCE COUNTERFACTUAL

-

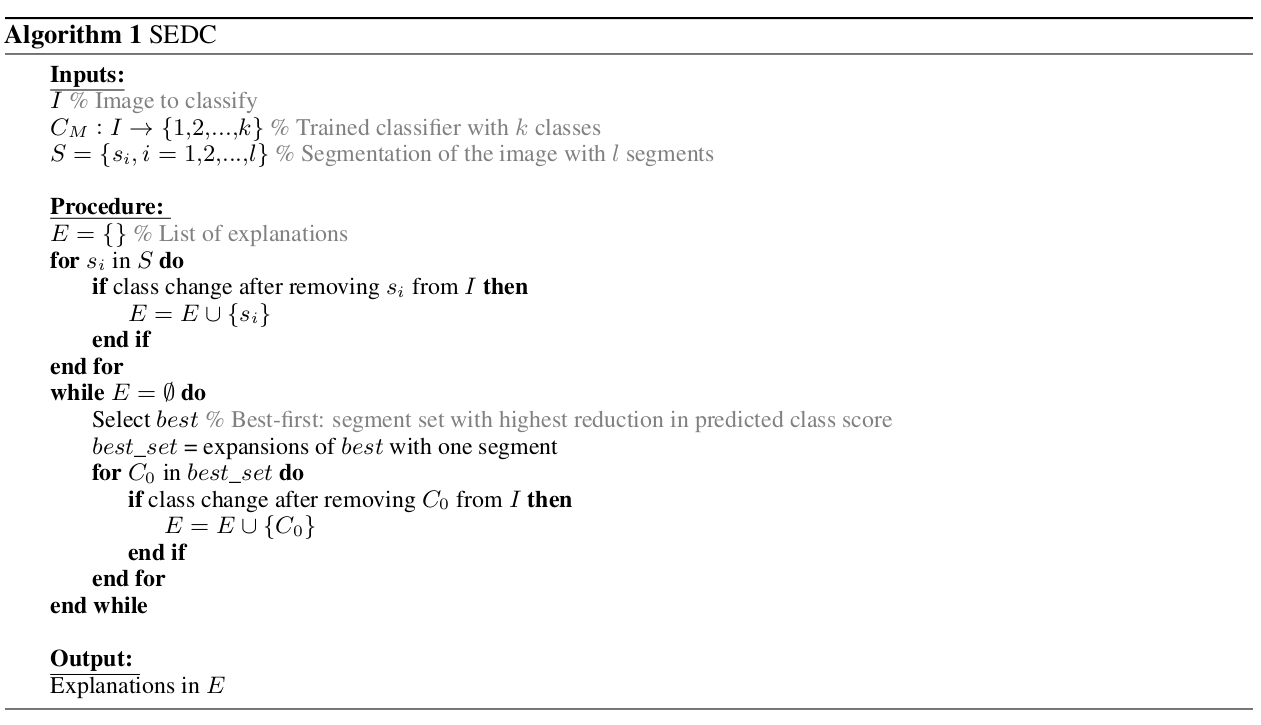

Maheep's Notes

The author proposes a SDEC model that searches a small set of segments that, in case of removal, alters the classification

The image is segemented with l segments and then the technique is implemented by using the best-first search avoid a complete search through all possible segment combinations. The best-first is each time selected based on the highest reduction in predicted class score. It continues until one or more same-sized explanations are found after an expansion loop. An additional local search can be performed by considering all possible subsets of the obtained explanation. If a subset leads to a class change after removal, the smallest set is taken as final explanation. When different subsets of equal size lead to a class change, the one with the highest reduction in predicted class score can be selected.

-

-

Explaining Visual Models by Causal Attribution

-

Maheep Notes

The paper focuses on the facts that there are limitations of current Conditional Image Generators for Counterfactual Generation and also proposes a new explanation technique for visual models based on latent factors.

The paper is implemented using the Distribution Causal Graph(DCG) where the causal graph is made but the nodes is represented the MLP, i.e. `logP(X = (x1,x2,x3...xn)) = sigma(log(P(X = xi|theta_i)))` and the Counterfactual Image Generator which translate the latent factor into the image using the original image as anchor while genrating it which is done using Fader Networks which adds a critic in the latent space and AttGAN adds the critic in the actual output.

-

-

Explaining the Black-box Smoothly-A Counterfactual Approach

-

Maheep's Notes

The paper focuses on explaining the outcome of medical imaging by gradually exaggerating the semantic effect of the given outcome label and also show a counterfactual image by introducing the perturbations to the query image that gradually changes the posterior probability from its original class to its negation. The explanation therfore consist of 3 properties:

1.) **Data Consistency**: The resembalance of the generated and orignal data should be same. Therefore cGAN id introduced with a loss asL_cgan = log(P_data(x)/q(x)) + log(P_data(c|x)/q(c|x))where P_data(x) is the data distribtion and learned distribution q(x), whreas P_data(c|x)/q(c|x) = r(c|x) is the ratio of the generated image and the condition.

2.) Classification model consistency: The generated image should give desired output. Therefore the condition-aware loss is introduced, i.e.L := r(c|x) + D_KL (f(x')||f (x) + delta),, where f(x') is the output of classifier of the counterfactual image is varied only by delta amount when added to original image logit. They take delta as a knob to regularize the genration of counterfactual image.

3.) Context-aware self-consistency: To be self-consistent, the explanation function should satisfy three criteria(a) Reconstructing the input image by setting = 0 should return the input image, i.e., G(x, 0) = x.

(b) Applying a reverse perturbation on the explanation image x should recover x.To mitigate this conditions the author propose an identity loss. The author argues that there is a chance that the GAN may ignore small or uncommon details therfore the images are compared using semantic segemntation with object detection combined in identity loss. The identity loss is : L_identity = L_rec(x, G(x, 0))+ L_rec(x, G(G(x,delta), -delta))

-

-

Explaining the Behavior of Black-Box Prediction Algorithms with Causal Learning

-

Maheep's Notes

The paper using proposes causal graphical models so as to indicate which of the interpretable features, if any, are possible causes of the prediction outcome and which may be merely associated with prediction outcomes due to confounding. The choose causal graphs consistent with observed data by directly testing focus on type-level explanation rather token-level explanations of particular events. The token-level refers to links between particular events, and the type-level refers to links between kinds of events, or equivalently, variables. Using the causal modelling they focus on obtaining a model that is consistent with the data.

They focus on learning a Partial Ancestral Graph(PAG) G, using the FCI algorithm and the predicted outcome Y' whereas Z are the high-level which are human interpretable and not like pixels.

V = (Z,Y')

Y' = g(z1,.....zs, epsilon)On the basis of possible edge types, they find out which high level causes, possible causes or non-causes of the balck-box output Y'.

-

-

Explaining Classifiers with Causal Concept Effect (CaCE)

-

Maheep's Notes

The paper proposes a system CaCE, which focuses on confounding of concepts, i.e higher level unit than low level, individual input features such as pixels by intervening on concepts by taking an important assumption that intervention happens atomically. The effect is taken asEffect = E(F(I)|do(C = 1)) - E(F(I)|do(C = 0))where F gives output on image I and C is the concept. This can be done at scale by intervening for a lot of values in a concept and find the spurious corrlation. But due to the insufficient knowlegde of the Causal Graph teh author porposes a VAE which can calculate the precise CaCE by by generating counterfactual image by just changing a concept and hence computing the difference between the prediction score.

-

-

Fast Real-time Counterfactual Explanations

-

Maheep's Notes

The paper proposes a transformer is trained as a residual generator conditional on a classifier constrained under a proposal perturbation loss which maintains the content information of the query image, but just the class-specific semantic information is changed. The technique is implemented as :

1.) Adverserial loss: It measures whether the generated image is indistinguishable from the real world images

2.) Domain classification loss: It is used to render the generate image x + G(x,y') conditional on y'.L = E[-log(D(y'|x + G(x,y')))]where G(x, y') is the perterbuation introduced by generator to convert image from x to x'

3.) Reconstruction loss: The Loss focuses to have generator work propoerly so as to produce the image need to be produced as defined by the loss.L = E[x - (x + G(x,y') + G(x + G(x,y'), y))]4.) Explanation loss: This is to gurantee that the generated fake image produced belongs to the distribution of H.L = E[-logH(y'|x + G(x,y'))]

5.) Perturbation loss: To have the perturbation as small as possible it is introduced.L = E[G(x,y') + G(x + G(x,y'),y)]

All these 5 losses are added to make the final loss with different weights.

-

-

GENERATIVE_COUNTERFACTUAL_INTROSPECTION_FOR_EXPLAINABLE_DEEP_LEARNING

-

Maheep's Notes

The paper propose to generate counterfactual using the Generative Counterfactual Explanation not by replacing a patch of the original image with something but by generating a counterfactual image by replacing minimal attributes uinchanged, i.e. A = {a1, a2, a3, a4, a5....an}. It is implemented by: -

min(lambda*loss(I(A')) + ||I - I(A')), where loss is cross-entropy for predicting image I(A') to label c'.

-

-

Generative_Counterfactuals_for_Neural_Networks_via_Attribute_Informed_Perturbations

-

Maheep's Notes

The paper focues on generating counterfactuals for raw data instances (i.e., text and image) is still in the early stage due to its challenges on high data dimensionality, unsemantic raw features and also in scenario when the effictive counterfactual for certain label are not guranteed, therfore the author proposes Attribute-Informed-Perturbation(AIP) which convert raw features are embedded as low-dimension and data attributes are modeled as joint latent features. To make this process optimized it has two losses: Reconstruction_loss(used to guarantee the quality of the raw feature) + Discrimination loss,(ensure the correct the attribute embedding) i.e.min(E[sigma_for_diff_attributes*(-a*log(D(x')) - (1-a)*(1-D(x)))]) + E[||x - x'||]where D(x') generates attributes for counterfactual image.

To generate the counterfactual 2 losses are produced,one ensures that the perturbed image has the desired label and the second one ensures that the perturbation is minimal as possible, i.e.

L_gen = Cross_entropy(F(G(z, a)), y) + alpha*L(z,a,z_0, a_0)

The L(z,a,z0,a0) is the l2 norm b/w the attribute and the latent space.

-

-

Question-Conditioned Counterfactual Image Generation for VQA

-

Maheep's Notes

The paper on generating the counterfactual images for VQA, s.t.

i.) the VQA model outputs a different answer

ii.) the new image is minimally different from the original

iii) the new image is realistic

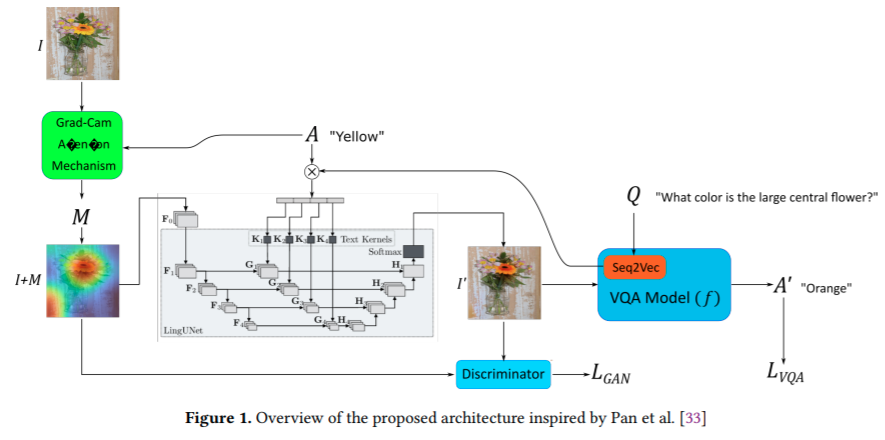

The author uses a LingUNet model for this and proposes three losses to make the perfect.

1.) Negated cross entropy for VQA model.

2.) l2 loss b/w the generated image and the original image. 3.) Discriminator that penalizes unrealistic images.

-

-

FINDING AND FIXING SPURIOUS PATTERNS WITH EXPLANATIONS

-

Maheep's Notes

The paper proposes an augmeting technique taht resamples the images in such a way to remove the spurious pattern in them, therfore they introduce their framework Spurious Pattern Identification and REpair(SPIRE). They view the dataset as Both, Just Main, Just Spurious, and Neither. SPIRE measures this probability for all (Main, Spurious) pairs, where Main and Spurious are different, and then sorts this list to find the pairs that represent the strongest patterns. After finding the pattern the dataset is redistributes as:

P(Spurious | Main) = P(Spurious | not Main) = 0.5

The second step consist of minimizing the potential for new SPs by setting theP(Main|Artifact) = 0.5).

SPIRE moves images from {Both, Neither} to {Just Main, Just Spurious} if p > 0.5, i.e. p = P(Main|Spurious) but if p < 0.5 then SPIRE moves images from {Just Main, Just Spurious} to {Both, Neither}.

-

-

Contrastive_Counterfactual_Visual_Explanations_With_Overdetermination

-

Maheep's Notes

The paper proposes a system CLEAR Image that explains an image’s classification probability by contrasting the image with a corresponding image generated automatically via adversarial learning. It also provides an event with a label of "*overdetermination*", which is given when the model is more than sure that the label is something. CLEAR Image segments x into different segments S = {s1 ,...,sn } and then applies the same segmentation to x' creating S' = {s'1,...., s'n}. CLEAR Image determines the contributions that different subsets of S make to y by substituting with the corresponding segments of S'. This is impelmeted by:

A counterfactual image is generated by GAN which is then segmented and those segments by a certian threshold replace the segment in the original image and therfore we get many perturbed images. Each perturbed image is then passed through the model m to identify the classification probability of all the classes and therfore the significance of every segment is obtained that is contributing in the layer. If the

-

-

Training_calibration‐based_counterfactual_explainers_for_deep_learning

-

Maheep's Notes

The paper proposes TraCE for deep medical imaging that trained using callibaration-technique to handle the problem of counterfactual explanation, particularly when the model's prediciton are not well-callibrated due to which it produces irrelevant feature manipulation. The system is implemeted using the 3 methods, i.e.

(1.) an auto-encoding convolutional neural network to construct a low-dimensional, continuous latent space for the training data

(2.) a predictive model that takes as input the latent representations and outputs the desired target attribute along with its prediction uncertainty

(3.) a counterfactual optimization strategy that uses an uncertainty-based calibration objective to reliably elucidate the intricate relationships between image signatures and the target attribute.

TraCE works on the following metrics to evaluate the counterfactual images, i.e.

Validity: ratio of the counterfactuals that actually have the desired target attribute to the total number of counterfactuals

The confidence of the image and sparsity, i.e. ratio of number of pixels altered to total no of pixels. Th eother 2 metrcs are proximity, i.e. average l2 distance of each counterfactual to the K-nearest training samples in the latent space and Realism score so as to have the generated image is close to the true data manifold.

TraCE reveals attribute relationships by generating counterfactual image using the different attribute like age "A" and diagnosis predictor "D".

delta_A_x = x - x_a';delta_D_x = x - x_d'

The x_a' is the counterfactual image on the basis for age and same for x_d'.

x' = x + delta_A_x + delta_D_xand hence atlast we evaluate the sensitivity of a feature byF_d(x') - F_d(x_d'), i.e. F_d is the classifier of diagnosis.

-

-

Generating Natural Counterfactual Visual Explanations

-

Maheep's Notes

The paper proposes a counterfactual visual explainer that look for counterfactual features belonging to class B that do not exist in class A. They use each counterfactual feature to replace the corresponding class A feature and output a counterfactual text. The counterfactual text contains the B-type features of one part and the A-type features of the remaining parts. Then they use a text-to-image GAN model and the counterfactual text to generate a counterfactual image. They generate the images using the AttGAN and StackGAN and they take the image using the function.

`log(P(B)/P(A))` where P(.) is the classifier probability of a class for obtaining the highest-scoring counterfactual image.

-

-

On Causally Disentangled Representations

-

Maheep's Notes

The paper focuses on causal disentanglement that focus on disentangle factors of variation and therefore proposes two new metrics to study causal disentanglement and one dataset named CANDLE. Generative factors G is said to be disentangled only if they are influenced by their parents and not confounders. The system is implemented as:

A latent model M (e,g, pX ) with an encoder e, generator g and a data distribution pX , assumes a prior p(Z) on the latent space, and a generator g is parametrized as p(X|Z), then posterior p(Z|X) is approzimated using a variational distribution q (Z|X) parametrized by another deep neural network, called the encoder e. Therefore we obtain a z for every g and acts as a proxy for it.

1.) **Unconfoundess metric**: If a model is able to map each Gi to a unique ZI ,the learned latent space Z is unconfounded and hence the property is known as unconfoundedness.

2.)**Counterfactual Generativeness**: a counterfactual instance of x w.r.t. generative factor Gi , x'(i.e., the counterfactual of x with change in only Gi) can be generated by intervening on the latents of x corresponding to Gi , ZIx and any change in the latent dimensions of Z that are x not responsible for generating G i , i.e. Z\I, should have no influence on the generated counterfactual instance x' w.r.t. generative factor Gi. It can be computed using the Avergae Causal Effect(ACE).

-

-

INTERPRETABILITY_THROUGH_INVERTIBILITY_A_DEEP_CONVOLUTIONAL_NETWORK

-

Maheep's Notes

The paper proposes a model that generates meaningful, faithful, and ideal counterfactuals. Using PCA on the classifier’s input, we can also create “isofactuals”, i.e. image interpolations with the same outcome but visually meaningful different features. The author argues that a system should provide power to the users to discover hypotheses in the input space themselves with faithful counterfactuals that are ideal. They claim that it could be easily done by combining an invertible deep neural network z = phi(x) with a linear classifier y = wT*phi(x) + b. They generate a counterfatual by altering a feature representation of x along the direction of weight vector, i.e.

z' = z + alpha*wwherex' = phi_inverse(z + alpha*w). Any change orthogonal to w will create an “isofactual. To show that their counterfactuals are ideal, therfore they verify that no property unrelated to the prediction is changed. Unrealted properties = e(x),e(x) = vT*z, where v is orthogonal to w.e(x') = vT*(z + alpha* w) = vT*z = e(x). To measure the difference between the counterfactual and image intermediate feature map h, i.e.m = |delta_h|*cos(angle(delta_h, h))for every location of intermediate feature map.

-

-

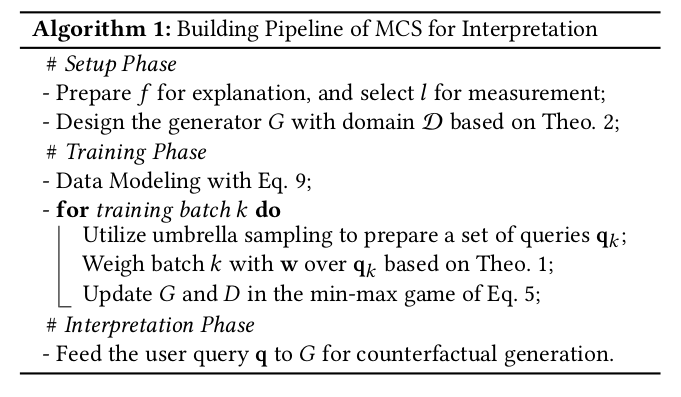

Model-Based Counterfactual Synthesizer for Interpretation

-

Maheep's Notes

The paper focues on eridicating the algorithm-based counterfactual generators which makes them ineffcient for sample generation, because each new query necessitates solving one specific optimization problem at one time and propose Model-based Counterfactual Synthesizer. Existing frameworks mostly assume the same counterfactual universe for different queries. The present methods do not consider the causal dependence among attributes to account for counterfactual feasibility. To take into account the counterfactual universe for rare queries, they novelly employ the umbrella sampling technique, i.e. by using the weighted-sum technique, calculating the weight of each biased distribution, we can then reconstruct the original distribution and conduct evaluations with the umbrella samples obtained. The counterfactual can be generated by giving a specific query q0, insttead of a label using the hypthetical distribution.

-

-

The Intriguing Relation Between Counterfactual Explanations and Adversarial Examples

-

Maheep's Notes

The paper provides the literature regarding the difference between the Counterfactual and Adverserial Example. Some of the points are:

1.) AEs are used to fool the classifier whereas the CRs are used to generate constructive explantions.

2.) AEs show where an ML model fails whereas the Explanations sheds light on how ML algorithms can be improved to make them more robust against AEs

3.) CEs mainly low-dimensional and semantically meaningful features are used, AEs are mostly considered for high-dimensional image data with little semantic meaning of individual features.

4.) Adversarials must be necessarily misclassified while counterfactuals are agnostic in that respect

5.) Closeness to the original input is usually a benefit for adversarials to make them less perceptible whereas counterfactuals focus on closeness to the original input as it plays a significant role for the causal interpretation

-

-

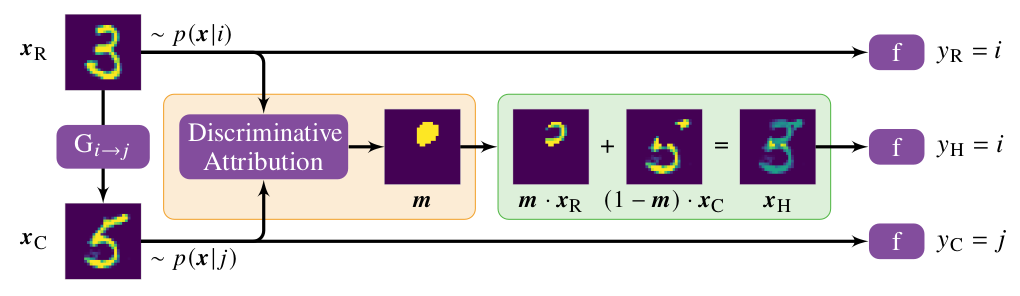

Discriminative Attribution from Counterfactuals

-

Maheep's Notes

The paper proposes a novel technique to combine feature attribution with counterfactual explanations to generate attribution maps that highlight the most discriminative features between pairs of classes. This is implemented as:

They use a cycle-GAN to translate real images x of class i to counterfactual images x'. Then both the images are fed into the Discriminative Attribution model which finds out the most discriminative features separting the 2 image. The most important part is masked out. The part is extracted from the original image x and is combined with the counterfactual image by intiallly masking the region to get the original image.

-

-

Causal Interventional Training for Image Recognition

-

Maheep's Notes

The paper focuses on proposing an augmentaiton technique which focuses on eradicating the bias that is bad and keeping the bias that is good for the model. Therefore the author proposes a causal graph consisting of x:image; y:label; C:context; A:good bias and B:bad bias. The author considers B as the confounding variable b/w the x and C, therefore tries to remove it using the backdoor criteria.

-

-

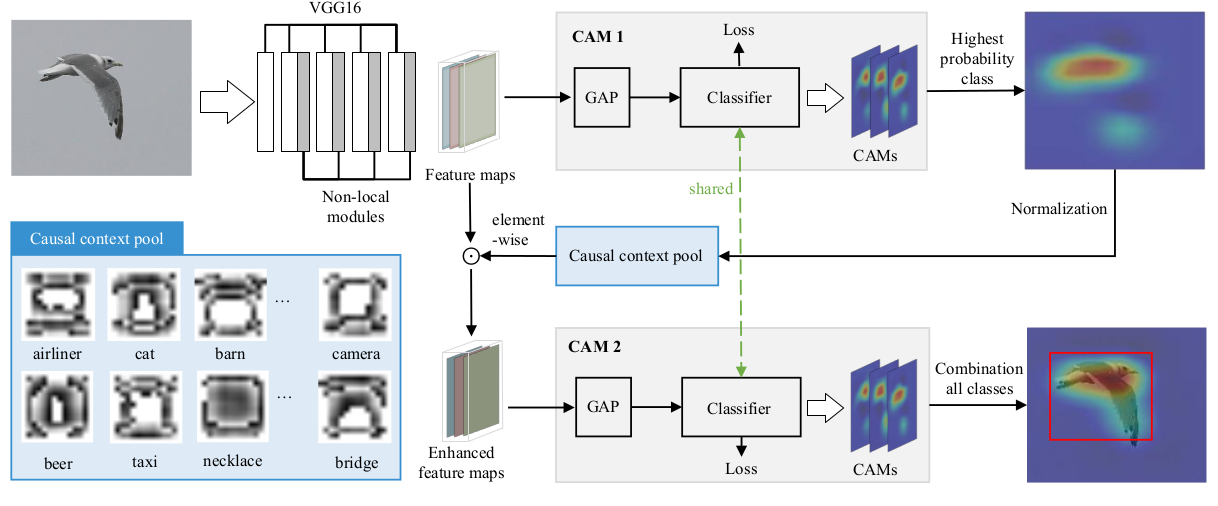

Improving_Weakly_supervised_Object_Localization_via_Causal_Intervention

-

Maheep's Notes

The paper proposes CI-CAM which explores the causalities among image features, contexts, and categories to eliminate the biased object-context entanglement in the class activation maps thus improving the accuracy of object localization. The author argues that in WSCOL context acts as a confounder and therefore eliminates it using backdoor-adjustment. The implement it by the following procedure: -

The architecture contains a backbone network to extract the features. The extracted features are then processed into CAM module where a GAP and classifier module outputs scores which are multipluied by weights to produce class activation maps.

The features are then passed through Causal Context Pool which stores the context of all images of every class, then other CAM module repeats the same procudure as of CAM1 and outputs image with class activation map.

-

-

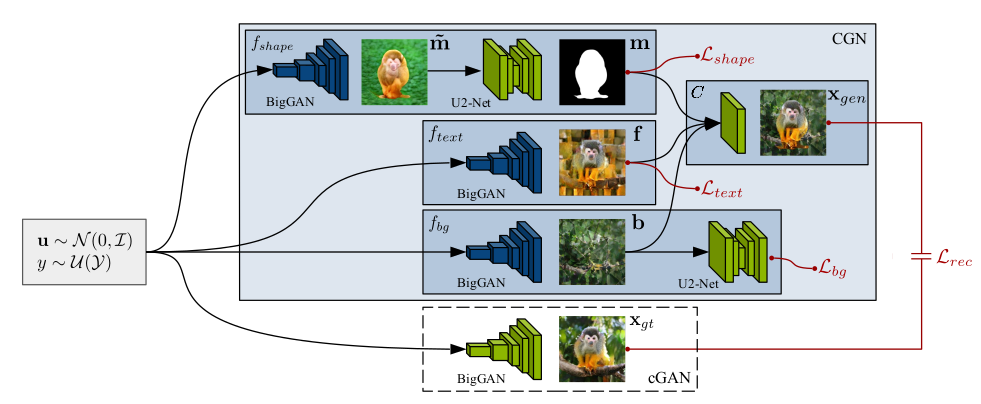

COUNTERFACTUAL GENERATIVE NETWORKS

-

Maheep's Notes

The paper aims to propose a method so as to train the model having robustness on OOD data. To achieve this the author uses the concept of causilty, i.e. *independent mechanism(IM)* to generate counterfactual images. The author considers 3 IM's:

1.) One generates the object’s shape.

2.) The second generates the object’s texture.

3.) The third generates the background.

In this way the author makes a connection b/w the fields of causality, disentangled representaion, and invariant classifiers. The author uses cGAN with these learned IM to generate images based on the attributes given above.

-

-

Discovering Causal Signals in Images

-

Maheep's Notes

A classifier is proposed that focuses on finding the causal direction between pairs of random variables, given samples from their joint distribution. Additionally they use causal direction classifier to effectively distinguish between features of objects and features of their contexts in collections of static images. In this framework, causal relations are established when objects exercise some of their causal dispositions, which are sometimes informally called the powers of objects. Based on it the author provides two hypothesis:

1.) Image datasets carry an observable statistical signal revealing the asymmetric relationship between object categories that results from their causal dispositions.

2.) There exists an observable statistical dependence between object features and anticausal features, basically anticausal features are those which is caused by the presence of an object in the scene. The statistical dependence between context features and causal features is nonexistent or much weaker.

The author proposes a Neural Causation Coefficient (NCC), able to learn causation from a corpus of labeled data. The author argues that the for joint distributions that occur in the real world, the different causal interpretations may not be equally likely. That is, the causal direction between typical variables of interest may leave a detectable signature in their joint distribution. Additionally they assume that wheneverX causes Y, the cause, noise and mechanism are independent but we can identify the footprints of causality when we try toY causes Xas the noise and Y will not be independent.

-

-

Learning to Contrast the Counterfactual Samples for Robust Visual Question Answering

-

Maheep's Notes

The paper proposes we introduce a novel self-supervised contrastive learning mechanism to learn the relationship between original samples, factual samples and counterfactual samples. They implement it by generating facutal and counterfactual image and try to increase the mutual information between the joint embedding ofQandV(mm(Q,V) = a), and joint embedding ofQandV_+ (factual)(mm(Q,V+) = p)by taking a cosine similarity b/w them. They also aim to decrease mutual information b/wmm(Q,V-) = nandaby taking cosine similarity(s(a,n)). The final formula becomes:

L_c = E[-log(e^s(a,p)/e^s(a,p)+e^s(a,n))]

The total loss becomesL = lambda_1*L_c + lambda_2*L_vqa

-

-

-

Maheep's Notes

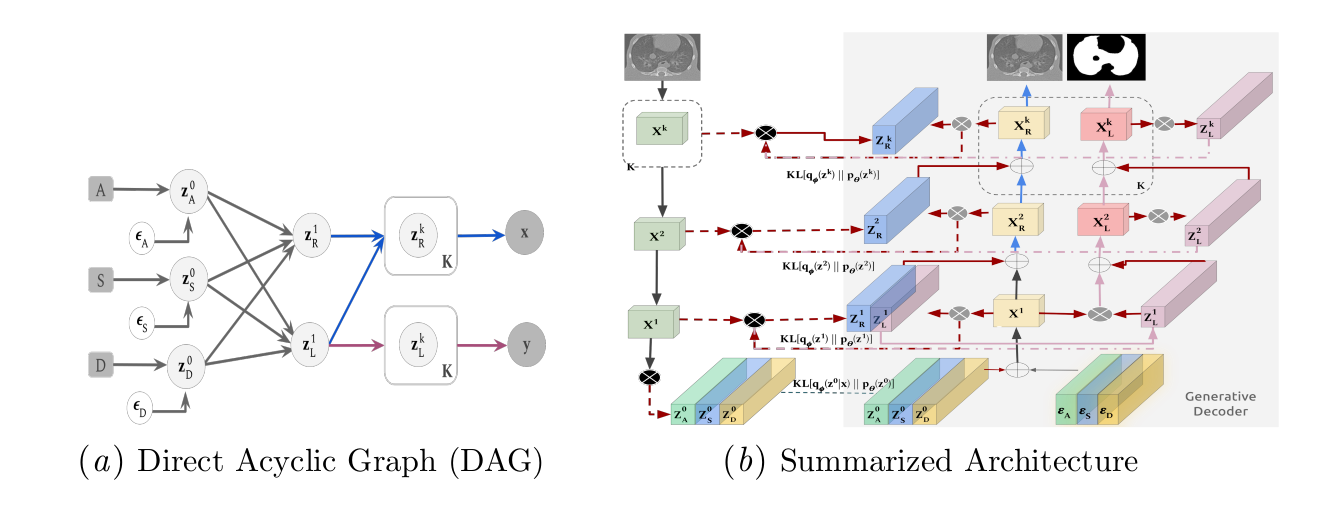

The paper focus on issue of generalization and therefore propose Latent Causal Invariance Model(LaCIM). The author introduce variables that are separated into (a) output-causative factors, i.e.Sand (b) others that are spuriously correlatedZfrom V(latent variable).

There exists a spurious correlation b/wSandZ. The author argues that we will get ap(y|do(s*)) = p(y|s*)

-

-

Two Causal Principles for Improving Visual Dialog

-

Maheep's Notes

The paper focuses to eliminate the spurious correltaions in the task of Visual Dialogue and therfore proposes 2 principles:

1.) The dialog history to the answer model provides a harmful shortcut bias threfore the direct effect of history on answer should be eliminated.

2.) There is an unobserved confounder for history, question, and answer, leading to spurious correlations from training data which should be identified and be eliminated using the backdoor method.

Now the main crunch of the paper arises as the confounder is unobserved so how can we apply the backdoor method? To solve it the author argues that this confounder comes from the annotator and thus can be seen in thea_i(answer) is a sentence observed from the “mind” of user u during dataset collection. Then,sigma(P(A)*P(u|H)),His history andAis answer can be approximated assigma(P(A)P(a_i|H)).They further usep(a_i|QT), whereQTis Question Type to approximateP(a_i|H)because of two reasons: First,P (a_i|H)essentially describes a prior knowledge abouta_iwithout comprehending the whole{Q, H, I} triplet.

-

-

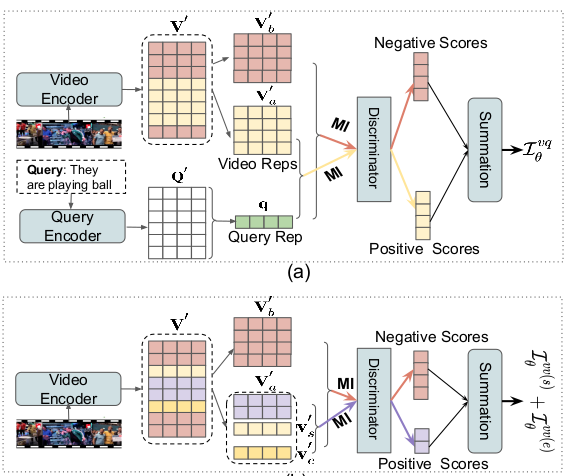

Weakly-Supervised Video Object Grounding via Causal Intervention

-

Maheep's Notes

The paper aims to localize objects described in the sentence to visual regions in the video by deconfounding the object-relevant associations given the video-sentence annotations. The author argues that the frame is made up of the content(C), i.e. factors that cause the object’s visual appearances in spatial and temporal throughout the video are grouped into a category and Style(S) is the background or scenes. The author argues that the S does not play any role in object grounding and only act a confounder. In addition to that there exist one more confounder, i.eZthat occurs due to some specific objects occuring frequently. The style confounder is replaced by using the contrastive learning, where the counterfactual examples are created by taking the vectors from a memory bank by taking the top sleected top regions for described object and then the selected regions and frames are grouped together into frame-level content(H_c) and region-level content(U_c), and the rest of the regions are grouped as U_s and H_s. These regions are the converted to counterfactual using these memory vectors which were created by taking the randomly selected regions in training set. The most similar one and replaces the original one, to generate examples to have them hard to distinguish from real ones contrastive learning is used. The equation looks like:IE(p|do(U_s = U_s_generated)) < IE(p|do(U_c = U_c_generated))

IE(p|do(H_s = H_s_generated)) < IE(p|do(H_c = H_c_generated))

where theIEis Interventional Effect. As for the next confounder they uses the textual embedding of o_k(object) essentially provides the stable cluster center in common embedding space for its vague and diverse visual region embeddings in different videos. Therefore, by taking the textual embedding of the object as the substitute of every possible object z and apply backdoor adjustment.

-

-

Towards Unbiased Visual Emotion Recognition via Causal Intervention

-

Maheep's Notes

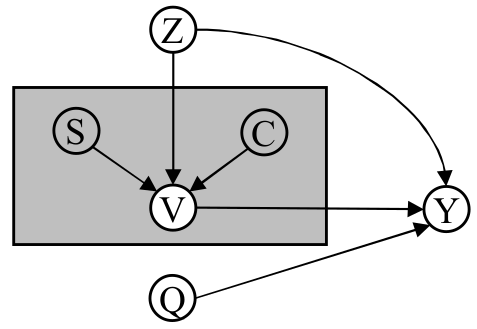

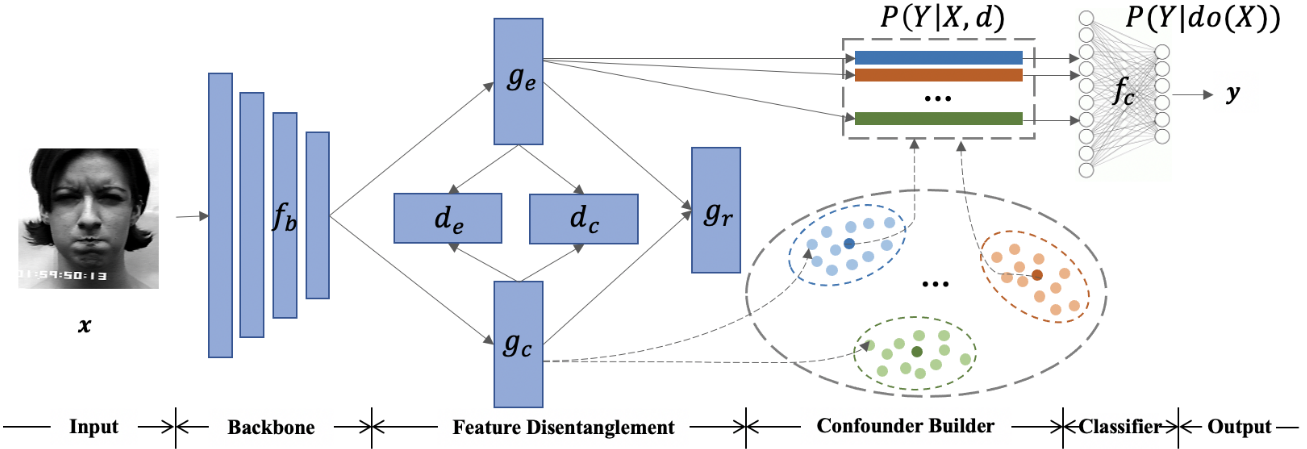

The paper we propose a novel Interventional Emotion Recognition Network (IERN) to achieve the backdoor adjustment on the confounder, i.e. context of the image(C). The author implements it as:

IERN, which is composed of four parts:

1.) Backbone

> It extracts the feature embedding of the image.

2.) Feature Disentanglement

> It disentangles the emotions and context from the image, having emotion dicriminator(d_e) and context discriminator(d_c) which ensures that the extracted feature are separated and has the desired feature. The loss comprises as :

L = CE(d_e(g_e(f_b(x))), y_e) + MSE(d_c(g_e(f_b(x))), 1/n)where g_e is emotion generator and y_e is the emotion label and n is the number of counfounder and the same loss is for context replacing d_e, g_e and d_c by d_c, g_c and d_e, here n represents number of emotions. To ensure that the separated features fall within reason-able domains, IERN should be capable of reconstructing the base feature f_b(x), i.e.L =MSE(g_r(g_e(f_b(x)), g_c(f_b(x))), f_b(x))

3.) Confounder Builder

> The purpose of the confounder builder is to combine each emotion feature with different context features so as to avoid the bias towards the observed context strata.

4.) Classifier

> It is simply used for prediciton.

-

-

Human Trajectory Prediction via Counterfactual Analysis

-

Maheep's Notes

The paper propose a counterfactual analysis method for human trajectory prediction. They cut off the inference from environment to trajectory by constructing the counterfactual intervention on the trajectory itself. Finally, they compare the factual and counterfactual trajectory clues to alleviate the effects of environment bias and highlight the trajectory clues.

They Y_causal is defined as

Y_causal = Y_i - Y-i(do(X_i = x_i))

They define a generative model which generates trajectory by a noise latent variable Z indicated byY*_i. Finally the loss is defined as:

Y_causal = Y*_i - Y*_i(do(X_i = x_i))

L_causalGAN = L2(Y_i, Y_causal) + log(D(Y_i)) + log(1-D(Y_causal)), where D is the discriminator.

-

-

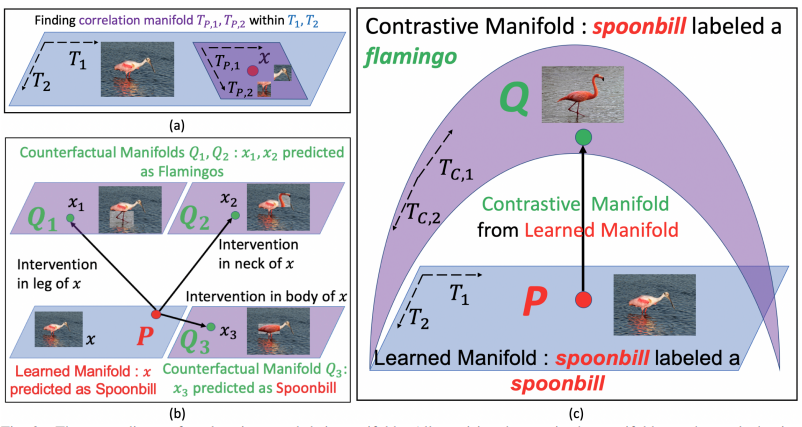

Proactive Pseudo-Intervention: Contrastive Learning For Interpretable Vision Models

-

Maheep's Notes

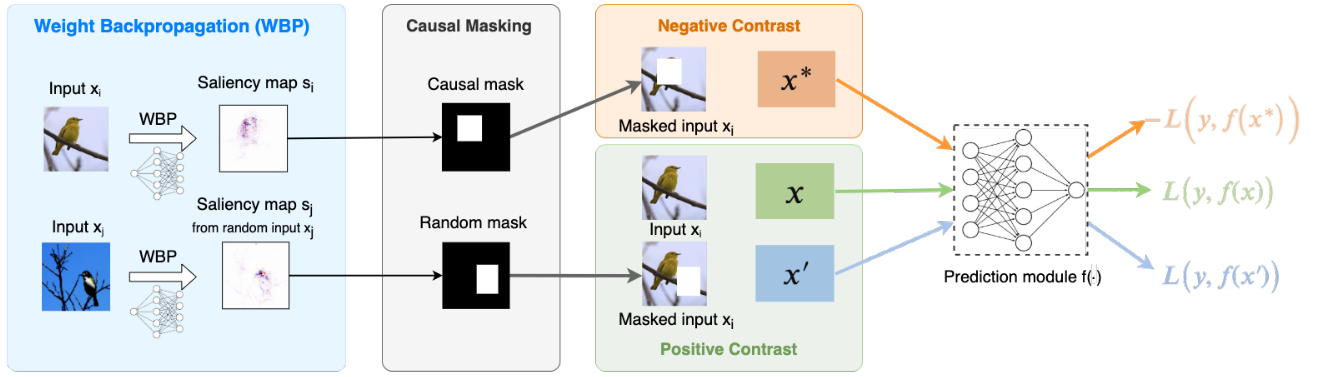

The paper present a novel contrastive learning strategy called Proactive Pseudo-Intervention (PPI) that leverages proactive interventions to guard against image features with no causal relevance. The PPI consists of three main components:

(i) a saliency mapping module that highlights causally relevant features which are obtained using the WBP which backpropagates the weights through layers to compute the contributions of each input pixel, which is truly faithful to the model, and WBP tends to highlight the target objects themselves rather than the background