NEW VERSION here: LAEO-Net++

Support code for LAEO-Net paper (CVPR'2019).

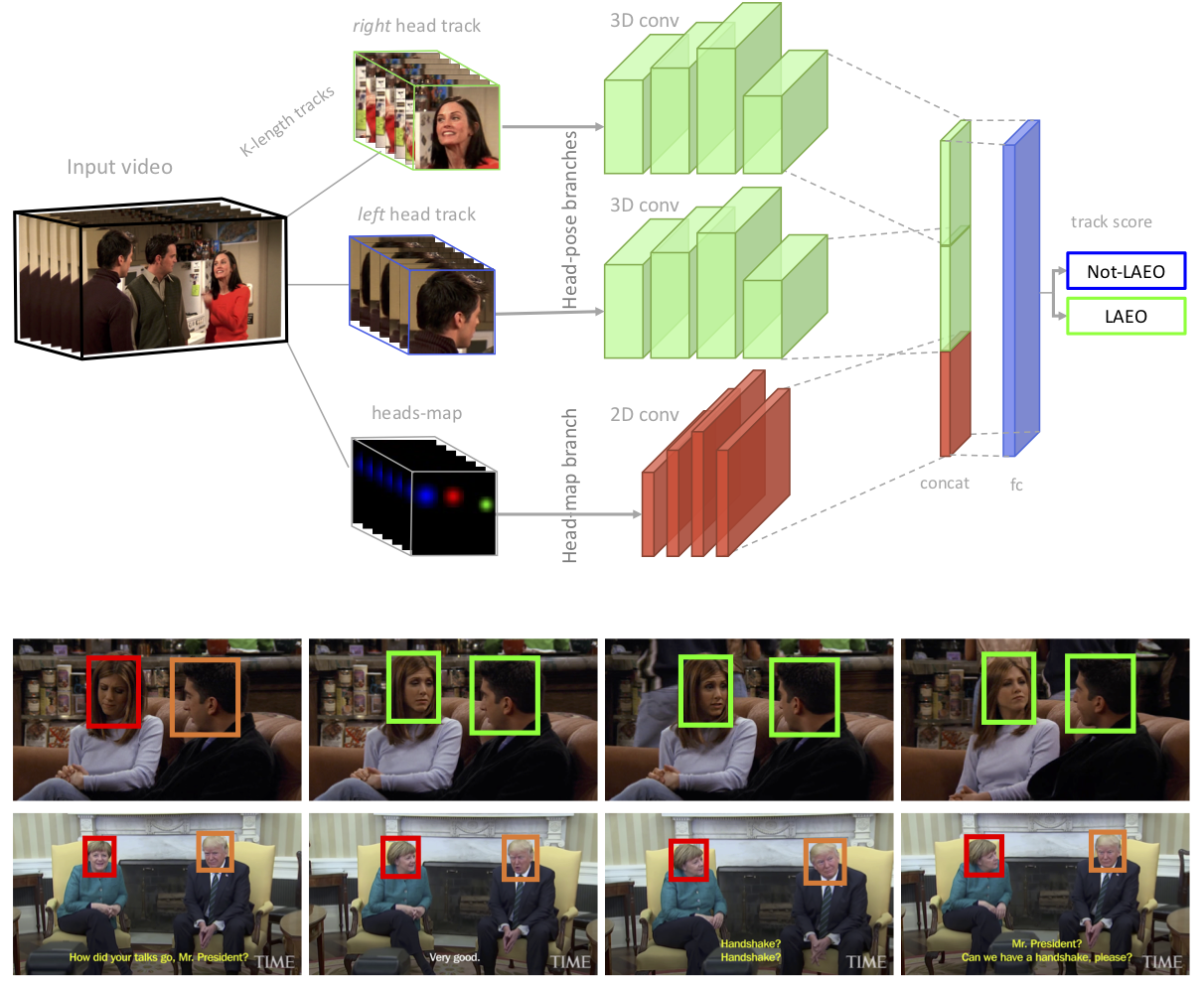

The LAEO-Net receives as input two tracks of head crops and a map containing the relative position of the heads, and returns the probability of being LAEO those two heads.The following demo predicts the LAEO label on a pair of heads included in

subdirectory data/ava_val_crop. You can choose either to use a model trained on UCO-LAEO

or a model trained on AVA-LAEO.

cd laeonet

python mains/ln_demo_test.pyTraining code will be available soon.

The following block shows how to run a more complete demo where, given a video file, head detection, tracking and LAEO classification is applied.

cd laeonet

python mains/ln_demo_det_track_laeo.pyNote that the detector provided in directory laeonet-head-det-track corresponds to a re-implementation of the Matlab's one originally used for the CVPR publication. Therefore, some results might differ a bit.

The saved models require a specific version of libraries to be loaded properly. See the LAEO-Net Wiki for details. For example, it is known not to work with versions of Python greater than 3.5.x.

@inproceedings{marin19cvpr,

author = {Mar\'in-Jim\'enez, Manuel J. and Kalogeiton, Vicky and Medina-Su\'arez, Pablo and and Zisserman, Andrew},

title = {{LAEO-Net}: revisiting people {Looking At Each Other} in videos},

booktitle = CVPR,

year = {2019}

}