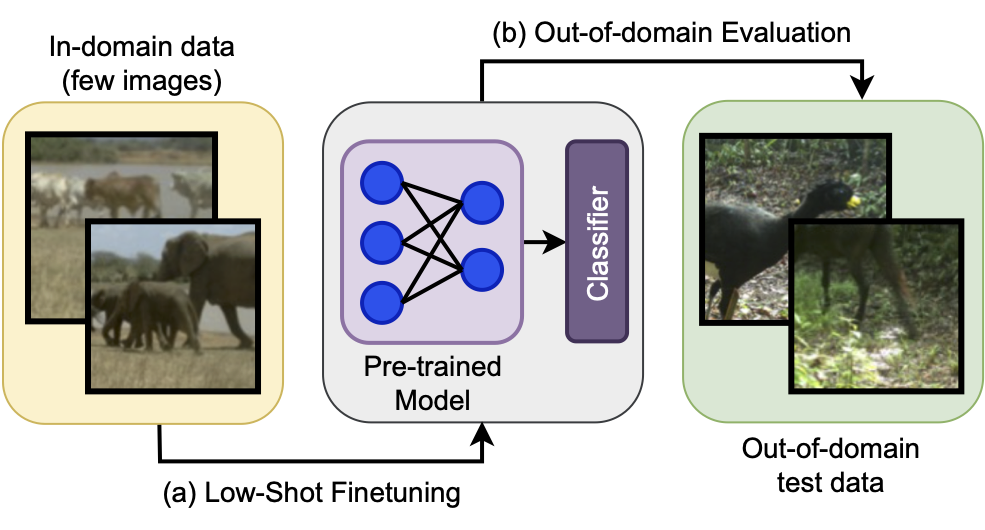

This repository contains the code for our ICCV 2023 paper: Benchmarking Low-Shot Robustness To Natural Distribution Shifts. To clone the full repository along with the submodules, the following command can be used.

git clone --recurse-submodules https://github.com/Aaditya-Singh/Low-Shot-Robustness.git

- Python 3.8 or newer (preferably through Conda) and Cyanure.

- Install WILDS benchmark as a package and other requirements.

- Please refer to WiSE-FT and WILDS benchmark for downloading the datasets.

- The low-shot subsets used in the paper can be found in the subsets directory.

- Code for creating such low-shot subsets can be found in the create_subsets directory.

| MSN checkpoints | download |

| DINO checkpoints | download |

| DEIT checkpoints | download |

| SwAV checkpoints | download |

CLIP ViTB-16 with zero-shot head weights

| ImageNet | download |

| iWildCam | download |

| Camelyon | download |

The bash commands used for fine-tuning can be found in the commands directory. Methods other than Logistic Regression and Mean Centroid Classifier additionally make use of the config yamls. We summarize some of the important flags and keys for experimentation below.

root_path_train/test: Specify the root directory containing the images for training or testing, e.g.../datasets/image_folder_train/test: Specify the directory containing the images for the different classes, e.g.imagenet/val_split: Set toid_valfor training andvalfor out-of-domain (OOD) testing for WILDS datasets.training: Set totruefor training andfalsefor evaluation.finetuning: Set totruefor full fine-tuning andfalsefor training only the classifier.eval_type: Should be set tobslplplfor Baseline++. Default islineval.folderandpretrained_path: Specify the directory and path to save and load model weights.

For more details and parameters than the ones provided here, please refer to the --help option. Details for full fine-tuning on ImageNet can be found in our MAE codebase.

This codebase supports LP-FT and WiSE-FT interventions. Note that the same general instructions are also applicable for these interventions. We summarize some other important details below.

- For CLIP, the

clipmodel should be loaded andclip.visualweights should be saved offline. - CLIP's zero-shot head weights can be saved with the command provided here.

- Alternatively, the full set of weights (encoder and zero-shot head) for ViTB-16 can be found here.

- Set

finetuningtotrueandeval_typetozeroshotfor full fine-tuning with these weights. - This command with

Type=wiseftcan be used to save WiSE-FT weights after full fine-tuning.

Please refer to our RobustViT and Model Soups codebases for additional interventions. We also provide them as submodules in this repository. The command for cloning the full repository is provided here.

Please consider citing our paper if you find this repository helpful:

@InProceedings{Singh_2023_ICCV,

author = {Singh, Aaditya and Sarangmath, Kartik and Chattopadhyay, Prithvijit and Hoffman, Judy},

title = {Benchmarking Low-Shot Robustness to Natural Distribution Shifts},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {16232-16242}

}

We follow these repositories and thank the authors for open-sourcing their code.

- [1]: Masked Siamese Networks

- [2]: Masked Autoencoders