High-Resolution Virtual Try-On with Misalignment and Occlusion-Handled Conditions

Sangyun Lee*1, Gyojung Gu*2,3, Sunghyun Park2, Seunghwan Choi2, Jaegul Choo2

1Soongsil University, 2KAIST, 3Nestyle

In ECCV 2022 (* indicates equal contribution)

Paper: https://arxiv.org/abs/2206.14180

Project page: https://koo616.github.io/HR-VITON

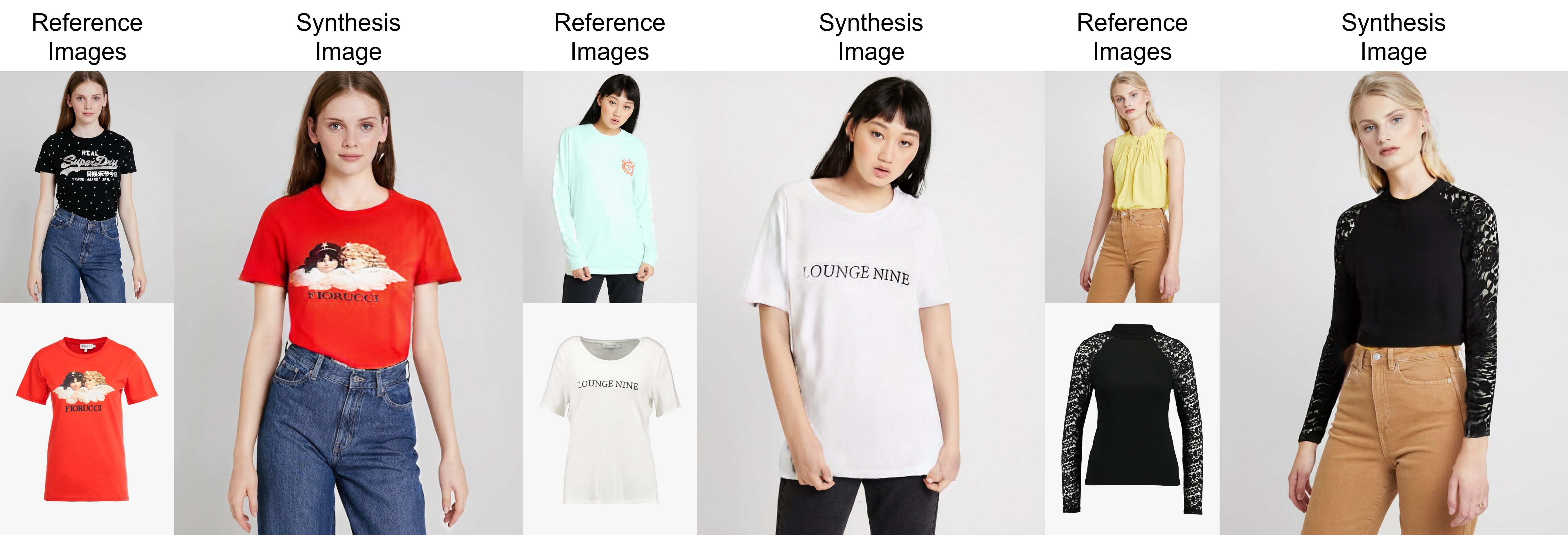

Abstract: Image-based virtual try-on aims to synthesize an image of a person wearing a given clothing item. To solve the task, the existing methods warp the clothing item to fit the person's body and generate the segmentation map of the person wearing the item before fusing the item with the person. However, when the warping and the segmentation generation stages operate individually without information exchange, the misalignment between the warped clothes and the segmentation map occurs, which leads to the artifacts in the final image. The information disconnection also causes excessive warping near the clothing regions occluded by the body parts, so-called pixel-squeezing artifacts. To settle the issues, we propose a novel try-on condition generator as a unified module of the two stages (i.e., warping and segmentation generation stages). A newly proposed feature fusion block in the condition generator implements the information exchange, and the condition generator does not create any misalignment or pixel-squeezing artifacts. We also introduce discriminator rejection that filters out the incorrect segmentation map predictions and assures the performance of virtual try-on frameworks. Experiments on a high-resolution dataset demonstrate that our model successfully handles the misalignment and occlusion, and significantly outperforms the baselines.

Clone this repository:

git clone https://github.com/sangyun884/HR-VITON.git

cd ./HR-VITON/

Install PyTorch and other dependencies:

conda create -n {env_name} python=3.8

conda activate {env_name}

conda install pytorch torchvision torchaudio cudatoolkit=11.1 -c pytorch-lts -c nvidia

pip install opencv-python torchgeometry Pillow tqdm tensorboardX scikit-image scipy

We train and evaluate our model using the dataset from VITON-HD: High-Resolution Virtual Try-On via Misalignment-Aware Normalization.

To download the dataset, please check the following link https://github.com/shadow2496/VITON-HD.

We assume that you have downloaded it into ./data.

Here are the download links for each model checkpoint:

- Try-on condition generator: link

- Try-on condition generator (discriminator): link

- Try-on image generator: link

- AlexNet (LPIPS): link, we assume that you have downloaded it into

./eval_models/weights/v0.1.

python3 test_generator.py --occlusion --test_name {test_name} --tocg_checkpoint {condition generator ckpt} --gpu_ids {gpu_ids} --gen_checkpoint {image generator ckpt} --datasetting unpaired --dataroot {dataset_path} --data_list {pair_list_textfile}python3 train_condition.py --gpu_ids {gpu_ids} --Ddownx2 --Ddropout --lasttvonly --interflowloss --occlusionpython3 train_generator.py --name test -b 4 -j 8 --gpu_ids {gpu_ids} --fp16 --tocg_checkpoint {condition generator ckpt path} --occlusionThis stage takes approximately 4 days with two RTX 3090 GPUs. Tested environment: PyTorch 1.8.2+cu111.

To use "--fp16" option, you should install apex library.

All material is made available under Creative Commons BY-NC 4.0. You can use, redistribute, and adapt the material for non-commercial purposes, as long as you give appropriate credit by citing our paper and indicate any changes that you've made.

If you find this work useful for your research, please cite our paper:

@article{lee2022hrviton,

title={High-Resolution Virtual Try-On with Misalignment and Occlusion-Handled Conditions},

author={Lee, Sangyun and Gu, Gyojung and Park, Sunghyun and Choi, Seunghwan and Choo, Jaegul},

journal={arXiv preprint arXiv:2206.14180},

year={2022}

}