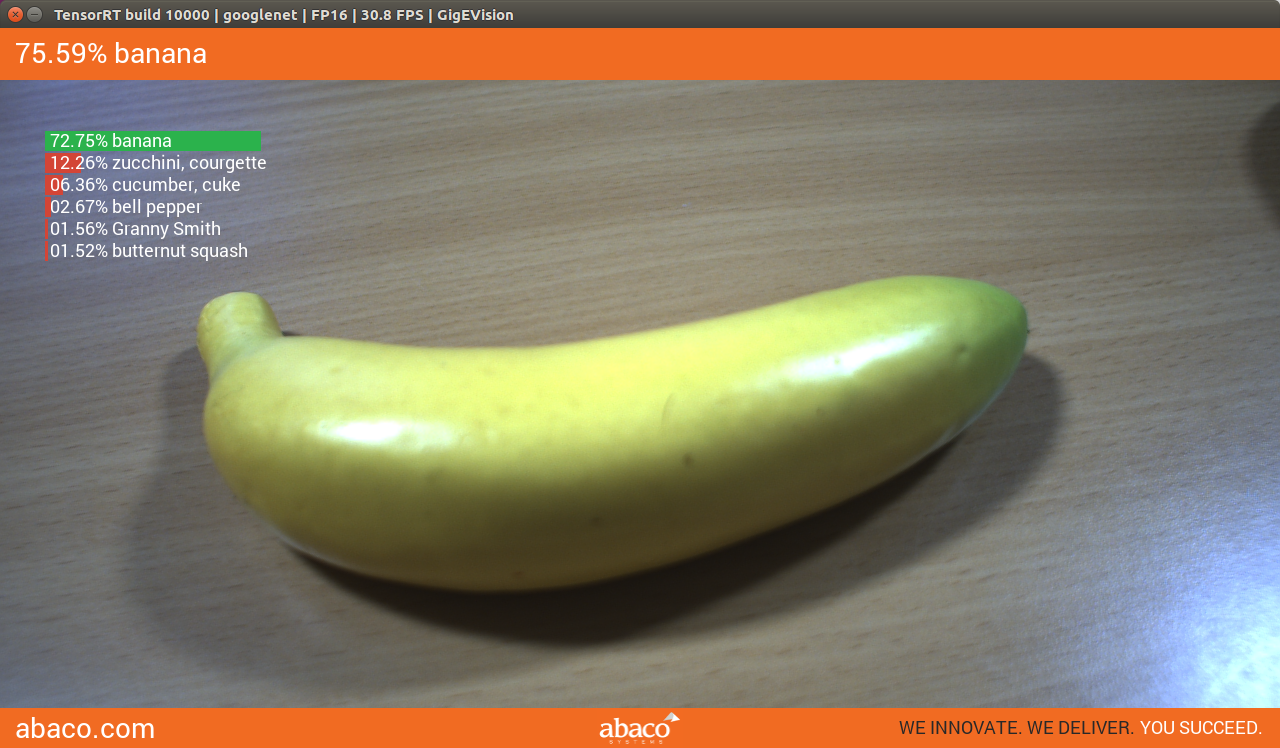

This is a branch of the NVIDIA's deep learning inference workshop and end-to-end realtime object recognition library for Jetson TX1 and TX2. Please reffer to the main branch for details and tips on setting up this demonstartion.

Included in this repo are resources for efficiently deploying neural networks using NVIDIA TensorRT.

Vision primitives, such as imageNet for image recognition and detectNet for object localization, inherit from the shared tensorNet object. Examples are provided for streaming from live camera feed and processing images from disk.

note Project 'Elroy' is a rugged TX2 box built to military specifications. Due for release mid 2017.

for more information on source images goto (http://image-net.org/)

for more information on source images goto (http://image-net.org/)

On the Abaco Systems SFF box there is no CSI camera so gstCamera has been modified to support the Logitech C920 Webcam. At the moment only imagenet-camera.cpp has been tested and proven to work with this modification.

Modified pipelines can be supplied to the create function to support cameras using RGB colour space. The included pipeline in imagenet-camera.cpp for the C920 is shown below:

video/x-raw, width=(int)640, height=(int)480, format=RGB ! videoconvert ! video/x-raw, format=RGB ! videoconvert ! appsink name=mysink

note : Set VIDEO_SRC to GST_V4L_SCR to use your RGB webcamera as an input source.

note : Set VIDEO_SRC to GST_GV_STREAM_SCR to use GigEVision cameras.

Set VIDEO_SRC to VIDEO_NV revert to the Jetson evaluation on board CSI camera.

The aim of this project is to create a network trained on images that come from military applications such at Air / Sea / Land. Updating the network to work with this updated network for demonstration and as an example to a defence audiance.

Please be patient whilst we build our DIGITS server to retrain the network and watch this space.

-

imagenet-camera.cppupdated to use webcam -

detectnet-camera.cppupdated to use webcam - parametrize requested USB resolution (Default fixed at 1280 x 720)

- Add suport for GigE Vision Cameras using the Aravis libaraies.

- GigEVision RGB8 colorspace support

- GigEVision YUV422 colorspace support

- GigEVision Bayer8 colorspace support.

- update training data (military images)

- update GUI to run in window and toggle to fullscreen (fill screen)

- update GUI to use SDL2

- update GUI fonts to SDL2_ttf

- add RTP video streaming source for YUV encoded video streams. Injest progressive GVA (Generic Vehicle Architecture) compliant video sources.

- Code tidys up, remove redundant depedencies.

Provided along with this repo are TensorRT-enabled examples of running Googlenet/Alexnet on live camera feed for image recognition, and pedestrian detection networks with localization capabilities (i.e. that provide bounding boxes).

The latest source can be obtained from GitHub and compiled onboard Jetson TX2.

note: this branch is verified against :

- TX1 JetPack 2.3 / L4T R24.2 aarch64 (Ubuntu 16.04)

- TX1 JetPack 2.3.1 / L4T R24.2.1 aarch64 (Ubuntu 16.04)

- TX2 JetPack 3.0 / L4T R27.1 aarch64 (Ubuntu 16.04 kernel 4.4)

To obtain the repository, navigate to a folder of your choosing on the Jetson. First, make sure git and cmake are installed locally:

sudo apt-get install git cmakeThen clone the jetson-inference repo:

git clone http://github.com/Abaco-Systems/jetson-inference-gvWhen cmake is run, a special pre-installation script (CMakePreBuild.sh) is run and will automatically install any dependencies.

mkdir build

cd build

cmake ../Make sure you are still in the jetson-inference/build directory, created above in step #2.

cd jetson-inference/build # omit if pwd is already /build from above

makeFor jetson 64bit builds the architecte will be aarch64, with the following directory structure:

|-build

\aarch64 (64-bit)

\bin where the sample binaries are built to

\include where the headers reside

\lib where the libraries are build to

For GigEVision camera support please download and compile the Aravis 0.6 release.

git clone https://github.com/AravisProject/aravis

cd aravis

sudo apt-get install intltool gtk-doc-tools

./autogen.sh --enable-viewer

./configure

makeOnce built do

make installYou will also need the bayer plugin for gstreamer to enable display Once built do

sudo apt-get install gstreamer1.0-plugins-badHeader files and library objects should not allow you to compile the code with GigEVision support. Configure camera in config.h

The font file and binary images used can be found in /data and should be copied into the working directort.

- q = Quit application

- f = Toggle fullscreen

- SPACE = Toggle overlay (imagenet-camera only)

For testing (on the Jetson TX2) I selected an Intel PCIe Ethernet NIC device that has deeper buffers and can allows pause frames to be disabled. To optimise your network interface device please run the script jetson-ethernet found in the jetson-scripts project under Abaco Systems. Example streams using external NIC:

- RGB8 encoded video streams at 1280x720@30Htz, total bandwidth consumed is aproximatly 82.2 Mb / Sec. SampleOutput-RGB8.png best for quality.

- YUV422 encoded video streams at 1280x720@30Htz, total bandwidth consumed is aproximatly 52.4 Mb / Sec. SampleOutput-YUV422.png

- BAYER GR8 encoded video streams at 1280x720@30Htz, total bandwidth consumed is aproximatly 27.4 Mb / Sec. SampleOutput-Bayer_GR8.png lowest bandwidth. Can achieve 1920x1080@30Htz 61.0 Mb / Sec.

The sample output supplied is a test card encoded using each of the three color spaces. Bayer has a few different formats we are only testing GR8 (requires CUDA kernel for each to convert video).

note : The YUV422 and Bayer GR8 CUDA convesion functions are not optimal and could be improved!!.