Amazon ECS typically measures service utilization based on CPU and memory average utilization and publishes them as predefined CloudWatch metrics, namely, ECSServiceAverageCPUUtilization and ECSServiceAverageMemoryUtilization. Application Auto Scaling uses these predefined metrics in conjunction with scaling policies to proportionally scale the number of tasks in a service.

However, there are several use cases where customers want to scale the number of tasks on other custom metrics to trigger when and to what degree to a scaling action should be executed.

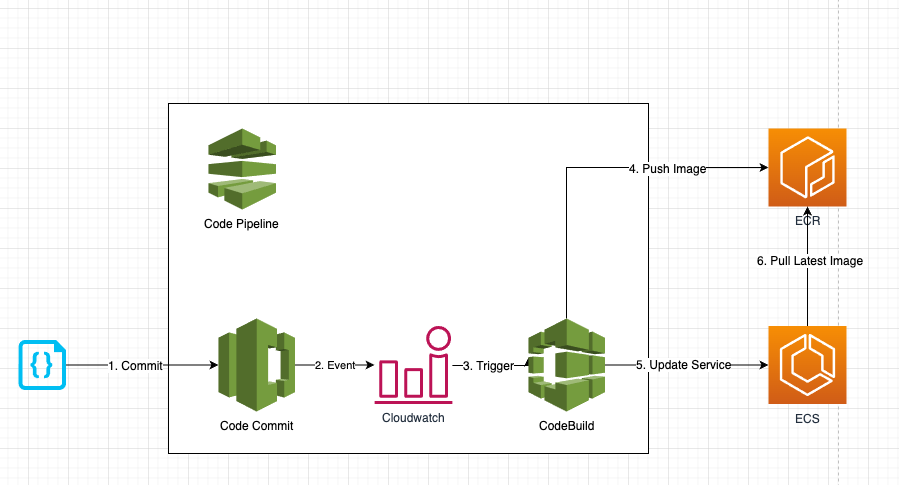

- Build a CI/CD pipeline that builds the latest image and trigger a deployment to ECS Service

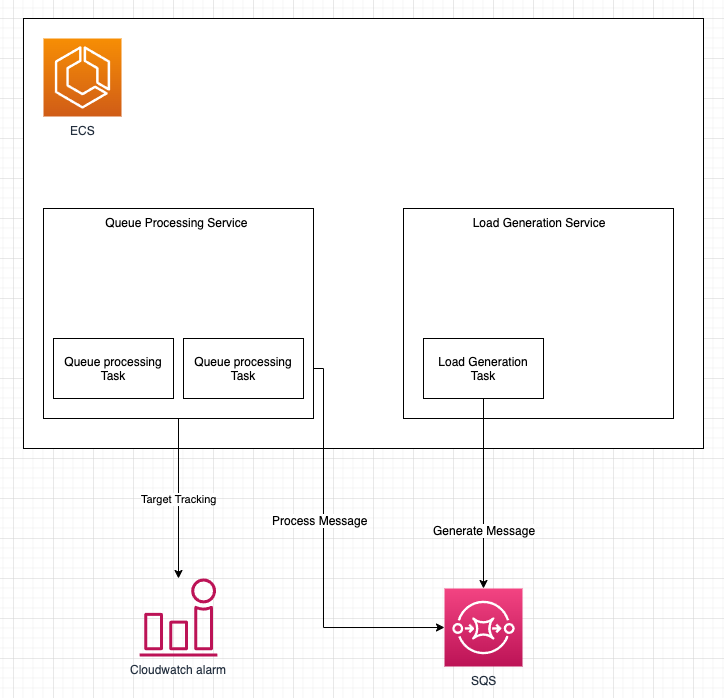

- Auto-scale ECS Queue Processing service using target scaling with custom metrics, backlogPerTask

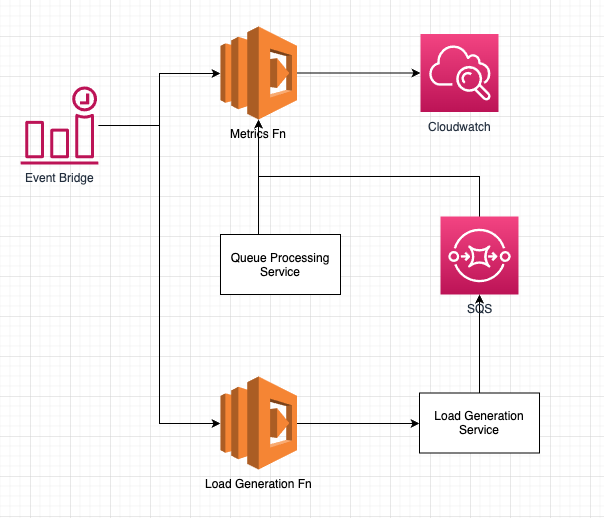

- Set up a scheduled cron job to trigger a lambda function to publish backlogPerTask metrics

- Set up a Load Generation service to report on SQS attributes

- Set up a scheduled cron job to generate random loads to an SQS queue

- This is a reference architecture and may not be suitable for production use.

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.

npm install -g cdk

npm install -g node-jq

> git clone https://github.com/aws-samples/aws-ecs-auto-scaling-with-custom-metrics

> cd ecs-autoscaling

> git submodule init

> git submodule update

> cd ecs-autoscaling/cdk-resource

> npm install

> npm run cdk:deploy:CodePipelineResourceStack

Outputs:

CodePipelineResourceStack.codeCommitRepositoryUrlGrc = codecommit::<region>://queue-processing-codecommit-repo

CodePipelineResourceStack.ecrRepositoryName = queue-processing-ecr-repo

Stack ARN:

arn:aws:cloudformation:ap-southeast-1:<account id>:stack/CodePipelineResourceStack/<stack id>

> cd ecs-autoscaling/queue-processing-node-app

> git remote add codecommit $(cat ../aws-outputs.json | jq ".CodePipelineResourceStack.codeCommitRepositoryUrlGrc" -r)

> git push codecommit main

> cd ecs-autoscaling/cdk-resoruce

> npm run cdk:deploy

...

Do you wish to deploy these changes (y/n)? y

// To see the status of your SQS attributes

> echo $(cat ../aws-outputs.json | jq ".ECSResourceStack.LoadBalancedFargateServiceServiceURLA3D7F0DD" -r)

- A change or commit to the code in the CodeCommit application repository triggers CodePipeline with the help of a CloudWatch event.

- The pipeline downloads the code from the CodeCommit repository, initiates the Build and Test action using CodeBuild, and pushes the image on to a ECR repository.

- If the preceding step is successful, a post-build triggers a deployment to update ECS service using the latest container image

- The load generation service will artificially generate random load to a sqs queue

- The queue processing service will process the messages from the SQS queue

- The queue processing service leverage on target tracking scaling policy with custom metrics, backlogPerTask to calculate the desired no. of task running

- The cron job triggers the metrics function to publish custom metrics, backlogPerTask every 5 minutes

- The cron job triggers the load generation function to generate load to an SQS queue every 5 minutes

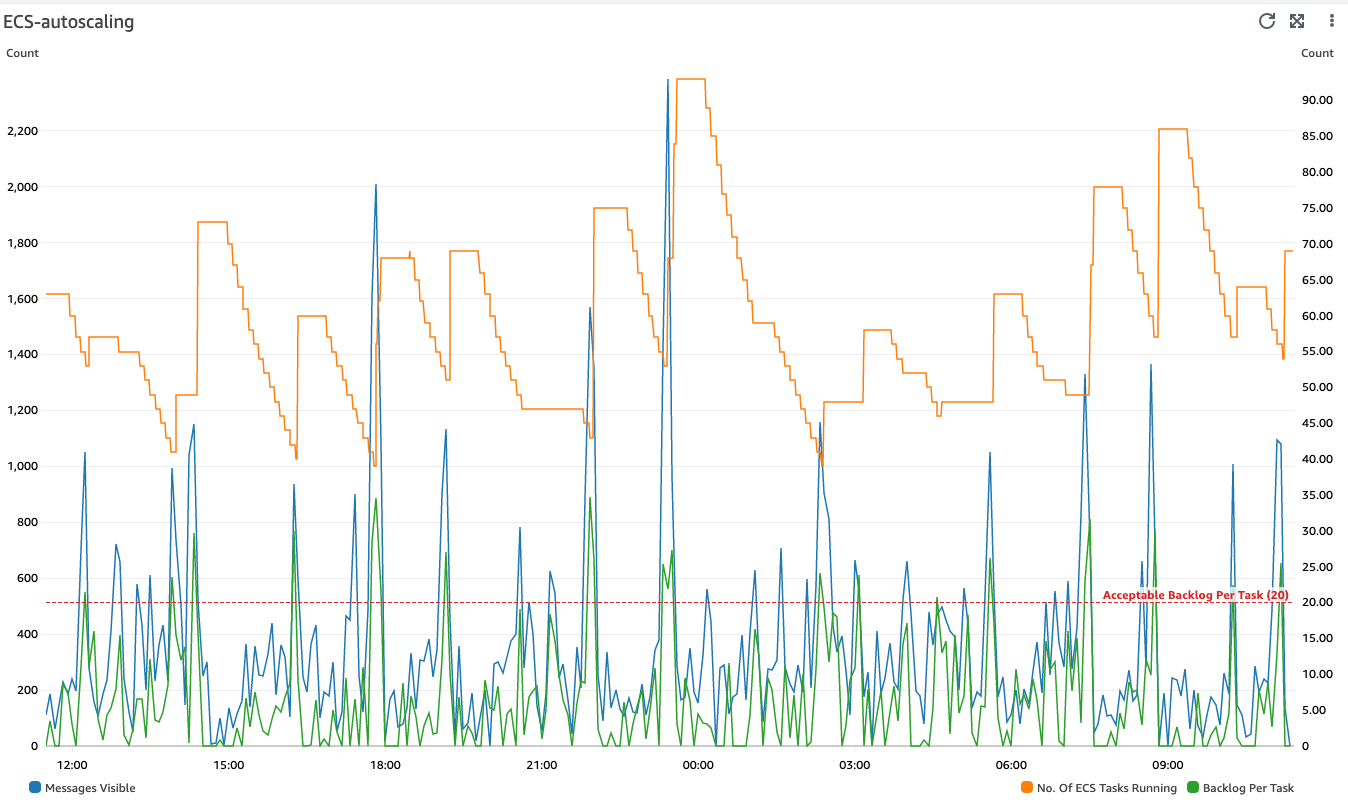

Why do we want to use custom metrics, backlogPerTask as a scaling metric instead of readily available SQS metrics, ApproximateNumberOfMessagesVisible?

If you use a target tracking scaling policy based on a custom Amazon SQS queue metric, dynamic scaling can adjust in response to the load change curve of your application more effectively. CloudWatch Amazon SQS metric like ApproximateNumberOfMessagesVisible (i.e. queue depth) for target tracking does not change proportionally to the capacity of the ECS Service (i.e. number of tasks in running status) that processes messages from the queue. That's because the number of messages in the SQS queue does not solely define the number of ECS tasks needed.

The number of tasks in the ECS Service can be driven by multiple factors, including how long it takes to process a message and the acceptable amount of latency (i.e. queue delay).

As such, we opted for the metric backlog per task with the target value being the acceptable backlog per task to maintain.

You can calculate these numbers as follows:

- Backlog per task: To calculate your backlog per task, start with the ApproximateNumberOfMessages queue attribute to determine the length of the SQS queue (number of messages available for retrieval from the queue). Divide that number by the fleet's running capacity, which for an ECS Service is the number of tasks in the Running state, to get the backlog per task.

- Acceptable backlog per task: To calculate your target value, first determine what your application can accept in terms of latency. Then, take the acceptable latency value and divide it by the average time that an ECS task takes to process a message.

To illustrate with an example, let's say that the current ApproximateNumberOfMessages is 1500 and the fleet's running capacity is 10 ECS tasks. If the average processing time is 0.1 seconds for each message and the longest acceptable latency is 10 seconds, then the acceptable backlog per task is 10 / 0.1, which equals 100 (messages/task). This means that 100 is the target value for your target tracking policy. If the backlog per task is currently at 150 (1500 / 10), your fleet scales out, and it scales out by five tasks to maintain proportion to the target value.

> vim ecs-autoscaling/cdk-resources/lib/global.json

// Edit the keys: acceptableLatencyInSeconds, averageProcessingTimePerJobInSeconds

{

"acceptableLatencyInSeconds": "10",

"averageProcessingTimePerJobInSeconds": "0.5",

"codebuildName": "queue-processing-codebuild-project",

"codeCommitRepositoryName": "queue-processing-codecommit-repo",

"codepipelineName": "queue-processing-codepipeline",

"ecrRepositoryName": "queue-processing-ecr-repo",

"ecsClusterName": "ecs-autoscaling-cluster",

"ecsFargateLoadGenerationServiceName": "load-generation-ecs-service",

"ecsFargateQueueProcessingServiceName": "queue-processing-ecs-service",

"imageTagVersion": "latest",

"queueName": "queue-processing-queue",

"metricName": "backlogPerTask",

"metricNamespace": "queue-processing-lambda-metrics",

"metricUnit": "Count",

"ecsTargetTrackingPolicyName": "auto-scaling-using-avg-backlog-per-task"

}