InstaNAS is an instance-aware neural architecture search framework that employs a controller trained to search for a distribution of architectures instead of a single architecture.

InstaNAS: Instance-aware Neural Architecture Search

An-Chieh Cheng*, Chieh Hubert Lin*, Da-Cheng Juan, Wei Wei, Min Sun

National Tsing Hua University, Google AI

In ICML'19 AutoML Workshop. (* equal contributions)

- May-2019: Project Page with built-in ImageNet controller released.

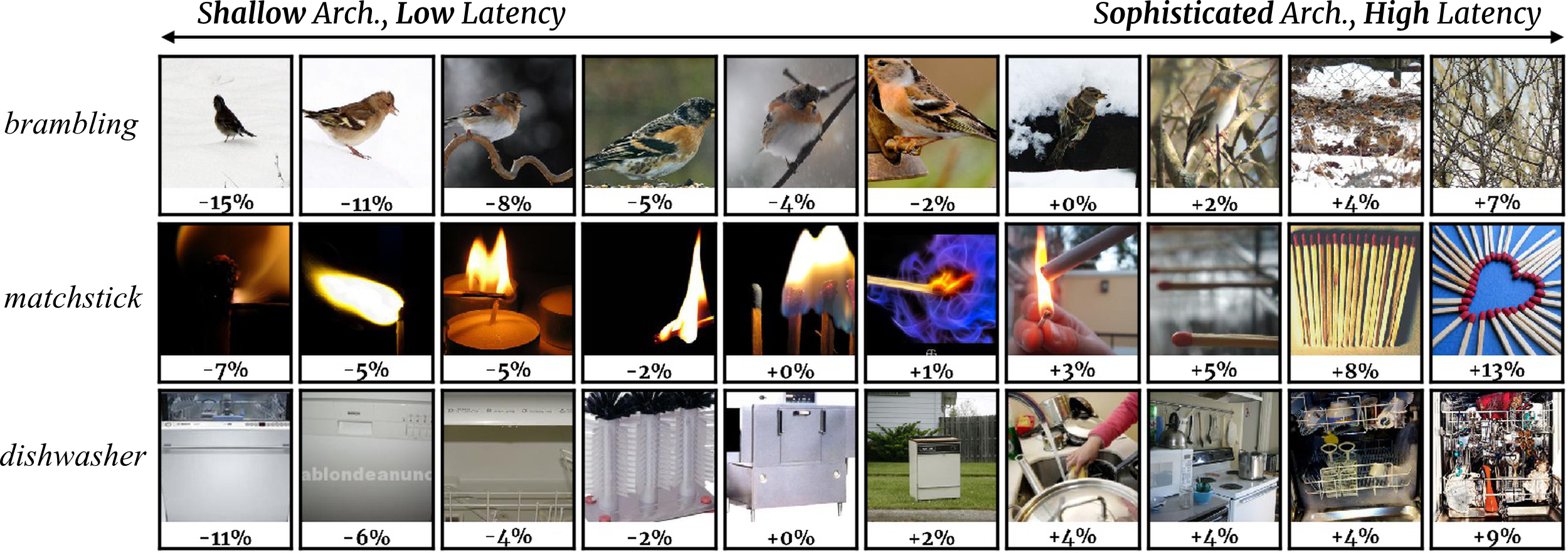

The controller selects architectures according to the difficulty of samples. The estimated difficulty matches human perception (e.g., cluttered background, high intra-class variation, illumination conditions).

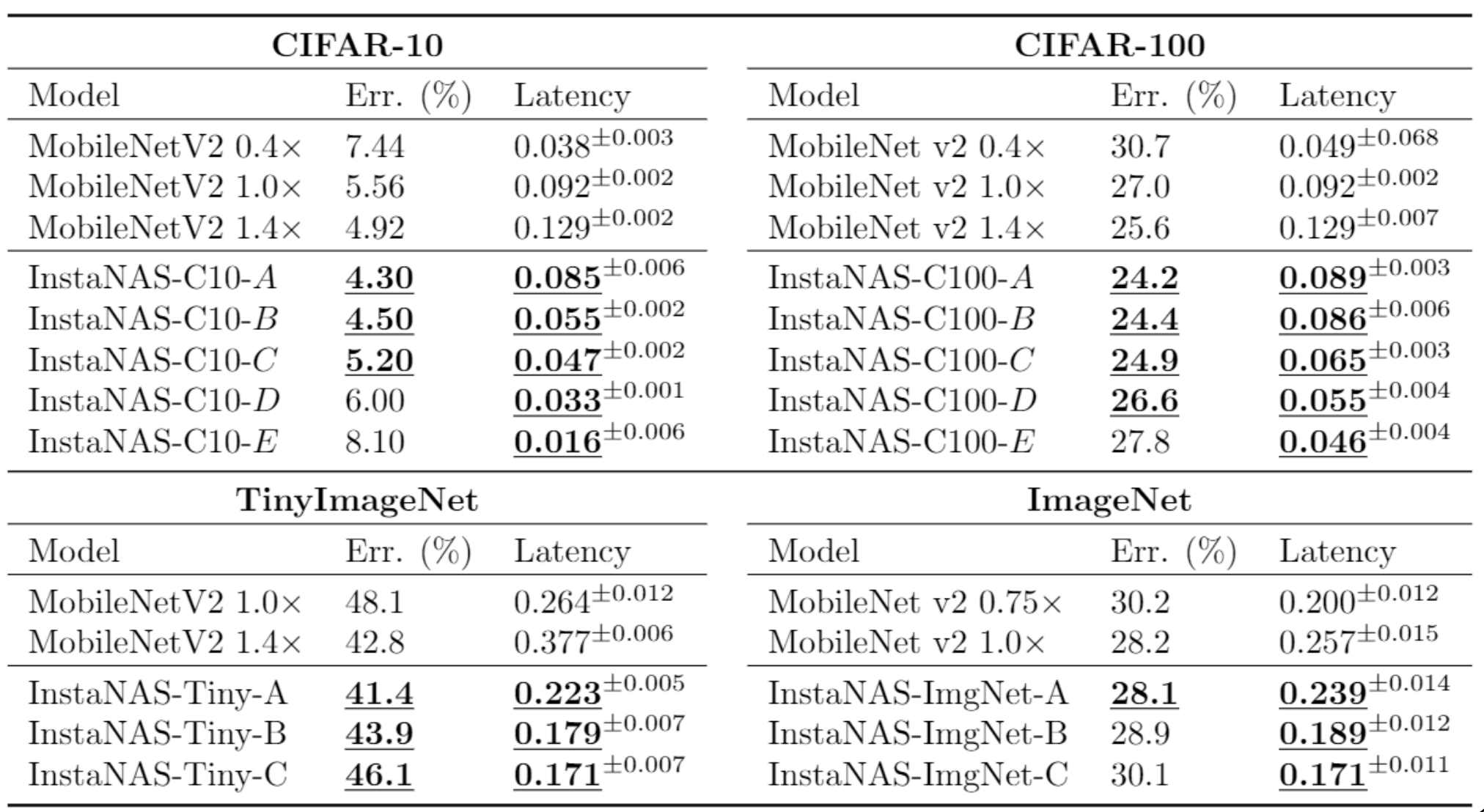

InstaNAS consistently improves MobileNetv2 accuracy-latency tradeoff on 4 datasets. We highlight the values that dominates MobileNetv2 1.0. All InstaNAS variants (i.e., A-E or A-C) are obtained in a single search.

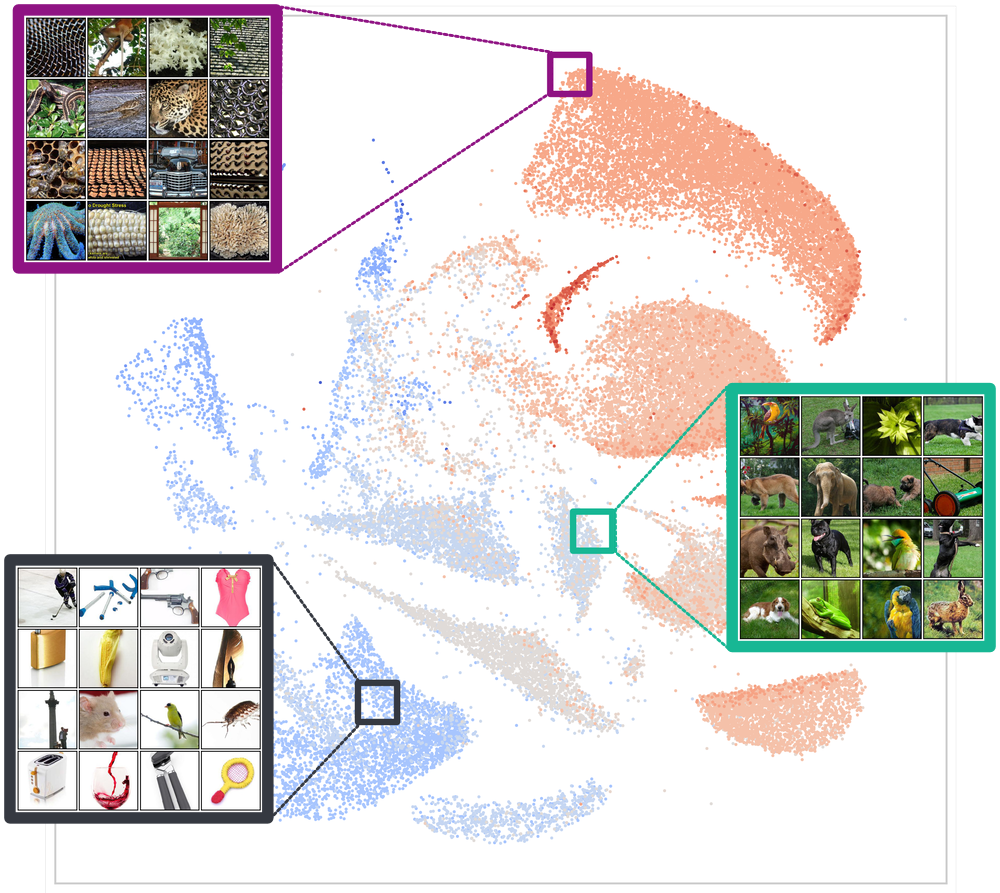

We project the result architecture distribution to 2D space with UMAP. The result forms multiple clusters and clearly separates high latency architectures from low latency architectures. (Red for high latency and blue for low latency.)

Please cite our paper (link) in your publications if this repo helps your research:

@article{cheng2018instanas,

title={InstaNAS: Instance-aware Neural Architecture Search},

author={Cheng, An-Chieh and Lin, Chieh Hubert and Juan, Da-Cheng and Wei, Wei and Sun, Min},

journal={arXiv preprint arXiv:1811.10201},

year={2018}

}

This is not an official Google product.