Straightforward implementations of interpretable ML models + demos of how to use various interpretability techniques. Code is optimized for readability. Pull requests welcome!

Implementations of imodels • Demo notebooks • Accompanying slides

Scikit-learn style wrappers/implementations of different interpretable models. Docs is available here. The interpretable models within the imodels folder can be easily installed and used:

pip install git+https://github.com/csinva/interpretability-implementations-demos

from imodels import RuleListClassifier, RuleFit, GreedyRuleList, SkopeRules, SLIM, IRFClassifier

model = RuleListClassifier() # initialize Bayesian Rule List

model.fit(X_train, y_train) # fit model

preds = model.predict(X_test) # discrete predictions: shape is (n_test, 1)

preds_proba = model.predict_proba(X_test) # predicted probabilities: shape is (n_test, n_classes)- bayesian rule list (based on this implementation) - learn a compact rule list

- rulefit (based on this implementation) - find rules from a decision tree and build a linear model with them

- sparse integer linear model (simple implementation with cvxpy)

- greedy rule list (based on this implementation) - uses CART to learn a list (only a single path), rather than a decision tree

- skope-rules (based on this implementation)

- (in progress) optimal classification tree (based on this implementation) - learns succinct trees using global optimization rather than greedy heuristics

- iterative random forest (based on this implementation)

- see readmes in individual folders within imodels for details

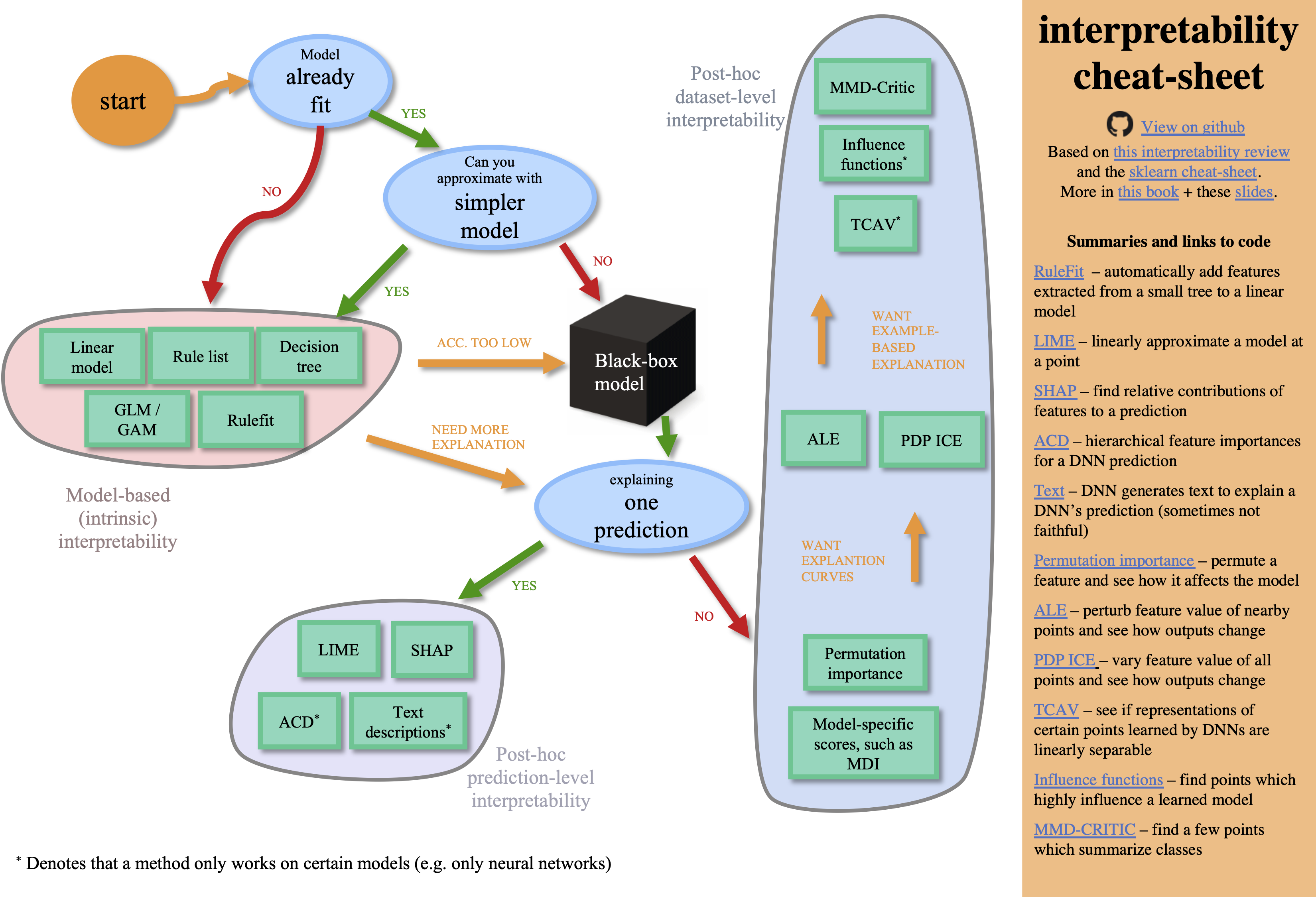

The demos are contained in 3 main notebooks, following this cheat-sheet:

- model_based.ipynb - how to use different interpretable models

- posthoc.ipynb - different simple analyses to interpret a trained model

- uncertainty.ipynb - code to get uncertainty estimates for a model