Facial Expressions Recognition

Table Of Contents

- About the Project

- Built With

- Getting Started

- Usage

- Roadmap

- Contributing

- License

- Authors

- Acknowledgements

About The Project

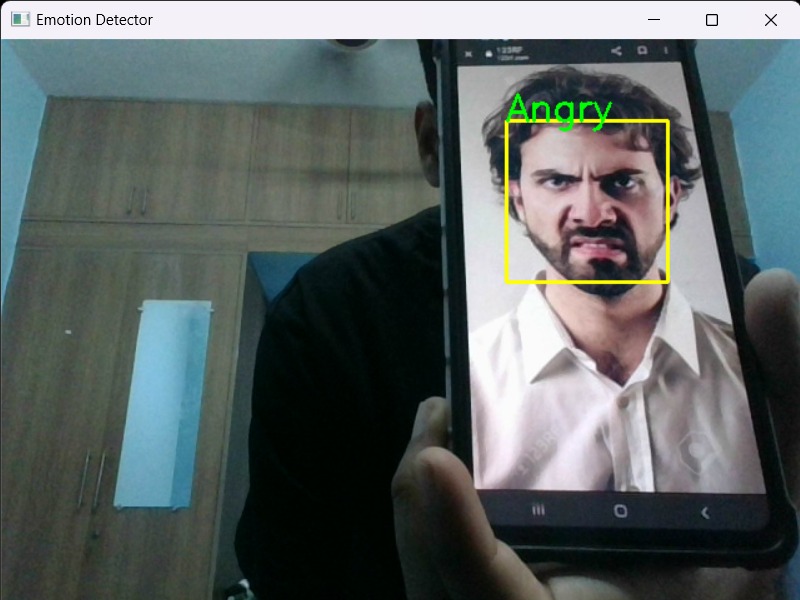

This project focuses on developing an automatic facial emotion recognition system using Convolutional Neural Networks (CNNs). The system aims to extract discriminative features from facial images and classify them into different emotion categories. The report provides an introduction to the significance of automatic facial emotion recognition and outlines the project's objectives. It includes a review of existing research on facial emotion recognition and the role of CNNs in computer vision tasks. The dataset used for training and evaluation is described, along with preprocessing techniques. The methodology section explains the CNN model architecture, training procedure, and evaluation metrics. The results showcase the system's performance using accuracy, precision, recall, and F1-score, supported by visual representations like confusion matrices. The report concludes by summarizing the achievements, discussing limitations, and suggesting future improvements, highlighting the potential impact of automatic facial emotion recognition systems in various domains.

Built With

The Automatic Facial Emotion Recognition system using CNN works by collecting and preprocessing facial images. A CNN architecture is designed to extract features from the images, which are then used to classify emotions. The model is trained using an optimization algorithm and evaluated using metrics like accuracy. Once trained, the system can be deployed for real-time emotion recognition tasks, enabling applications in human- computer interaction, market research, and psychology.

Getting Started

This is an example of how you may give instructions on setting up your project locally. To get a local copy up and running follow these simple example steps.

Prerequisites

This is an example of how to list things you need to use the software and how to install them.

- npm

npm install npm@latest -gInstallation

-

Get a free API Key at https://example.com

-

Clone the repo

git clone https://github.com/your_username_/Project-Name.git- Install NPM packages

npm install- Enter your API in

config.js

const API_KEY = 'ENTER YOUR API';Usage

1.Mental Health Support: The system can assist in early detection and monitoring of mental health conditions by analyzing facial expressions associated with different emotions. 2. Autism Spectrum Disorders (ASD): Facial emotion recognition can aid in the diagnosis and intervention of ASD. By accurately identifying and interpreting facial expressions, the system can help professionals and caregivers better understand and support individuals on the autism spectrum, improving their social interactions and emotional well-being. 3. Social Skills Training: The system can be utilized as a tool in social skills training programs. It can provide real-time feedback on individuals' facial expressions during social interactions, helping them develop a better understanding of their own emotional responses and enhancing their ability to interpret and respond to the emotions of others. 4. Human-Robot Interaction: In the context of social robots, an Automatic Facial Emotion Recognition system can enable robots to perceive and respond to human emotions more effectively. This can enhance the quality of human-robot interactions, making them more natural, empathetic, and socially engaging. 5. Workplace Well-being: The system can contribute to creating emotionally intelligent work environments. By analyzing employees' facial expressions, organizations can gain insights into their well-being, stress levels, and job satisfaction. This information can be used to implement interventions, policies, and support systems that promote a positive work culture and enhance employee well-being. 6. Bias and Discrimination Mitigation: Facial emotion recognition can help identify instances of bias and discrimination in various contexts, such as hiring processes or customer service interactions. By detecting subtle emotional cues, the system can provide objective insights into potential biases, supporting efforts to create fairer and more inclusive environments.

Roadmap

See the [open issues](https://github.com//Facial Expressions Recognition /issues) for a list of proposed features (and known issues).

Contributing

Contributions are what make the open source community such an amazing place to be learn, inspire, and create. Any contributions you make are greatly appreciated.

- If you have suggestions for adding or removing projects, feel free to [open an issue](https://github.com//Facial Expressions Recognition /issues/new) to discuss it, or directly create a pull request after you edit the README.md file with necessary changes.

- Please make sure you check your spelling and grammar.

- Create individual PR for each suggestion.

- Please also read through the [Code Of Conduct](https://github.com//Facial Expressions Recognition /blob/main/CODE_OF_CONDUCT.md) before posting your first idea as well.

Creating A Pull Request

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

License

Distributed under the MIT License. See [LICENSE](https://github.com//Facial Expressions Recognition /blob/main/LICENSE.md) for more information.

Authors

- Abhi Mukeshkumar Patel - CSE AIML