This repository contains an implementation of the Transformer architecture from scratch, written in Python and PyTorch.

The Transformer is a powerful neural network architecture that has been shown to achieve state-of-the-art performance on a wide range of natural language processing tasks, including language modeling, machine translation, and sentiment analysis.

The goal of this project is to gain a deeper understanding of how the Transformer works and how it can be applied to different natural language processing tasks.

By implementing the Transformer from scratch, we can get a hands-on understanding of the key components of the architecture, including multi-head self-attention, feedforward layers, and layer normalization.

This implementation requires Python 3.6 or later.

You can install the requirements using:

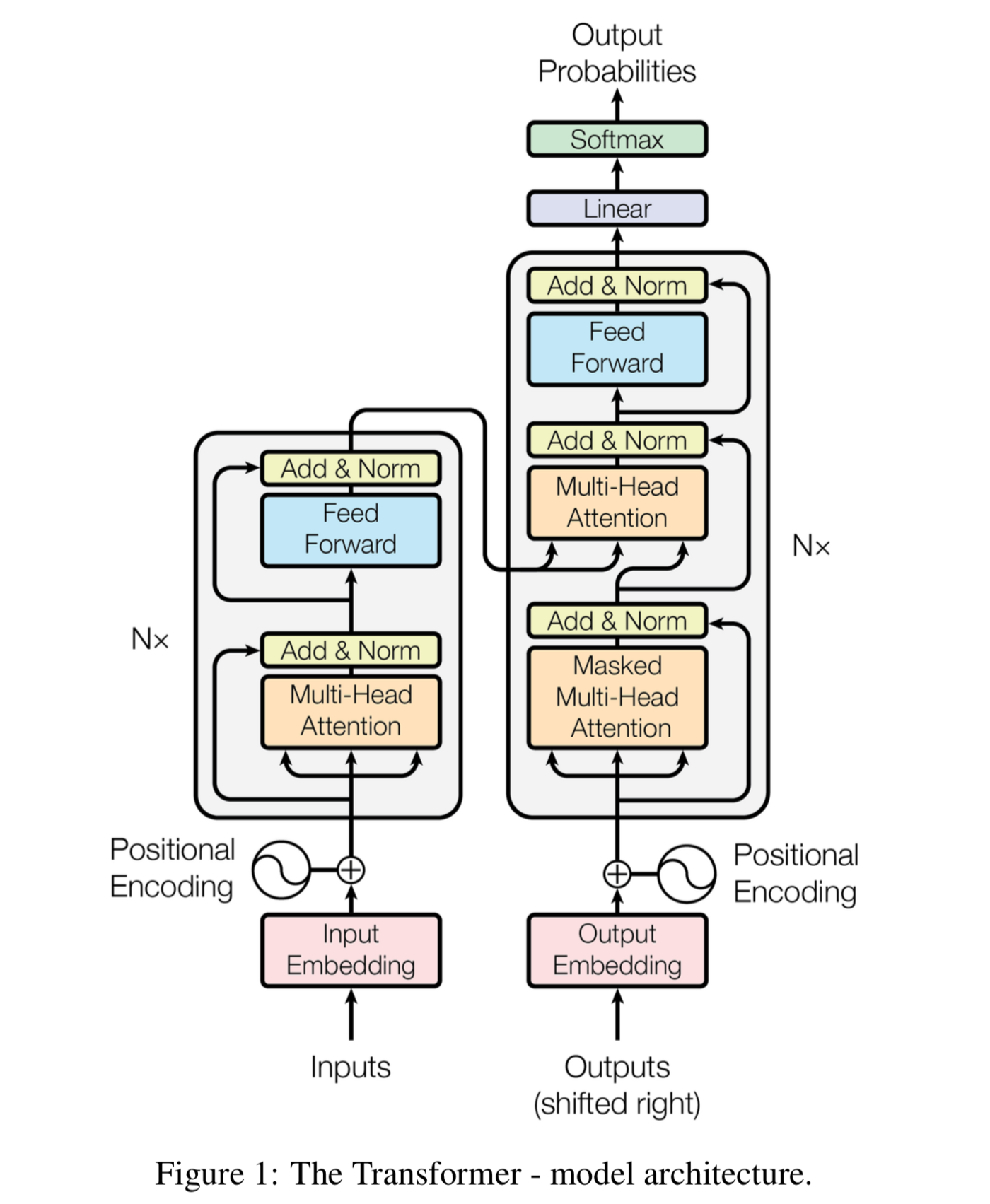

pip install -r requirements.txtThe Transformer architecture consists of a set of encoders and a decoders, In the paper they used 6 of each.

The encoder processes the input sequence of tokens, while the decoder generates the output sequence.

Here's a brief overview of each component:

The input sequence of tokens is first embedded into a continuous vector space using an embedding layer.

This layer maps each token to a fixed-length vector that captures its semantic meaning. The embedding layer is trained jointly with the rest of the model.

Since the Transformer does not use recurrent or LSTM layers, it needs a way to incorporate positional information about the tokens. This is done using a positional encoding layer, which adds a set of sinusoidal (sin and cos) functions to the input embeddings. The frequencies and phases of these functions encode the position of each token in the input sequence.

The encoder consists of multiple identical layers, each of which applies a set of operations to the input sequence in parallel. The core operation of each encoder layer is multi-head self-attention (they used 8 heads in the paper), which allows the model to attend to different parts of the input sequence at different levels of granularity.

The outputs of the self-attention layer are passed through a feedforward neural network, and the resulting representations are combined with the original input sequence using residual connections and layer normalization.

The decoder also consists of multiple identical layers, each of which applies a similar set of operations to the output sequence. In addition to the self-attention and feedforward layers, each decoder layer also includes a multi-head attention layer that attends to the encoder output. This allows the decoder to align the input and output sequences and generate more accurate translations.

The final layer of the decoder is a softmax layer that produces a probability distribution over the output vocabulary. During training, the model is trained to maximize the likelihood of the correct output sequence. During inference, the output sequence is generated by sampling from the predicted probability distribution.

If you are interested in learning more about the Transformer architecture, I recommend the following resources:

- Attention Is All You Need paper.

- The Illustrated Transformer by Jay Alammar by Jay Alammar.

- Pytorch Transformers from Scratch by Aladdin Persson.

- The Annotated Transformer by Sasha Rush.

- Understanding and Coding the Self-Attention Mechanism of Large Language Models From Scratch by Sebastian Raschka.

- TRANSFORMERS FROM SCRATCH by Peter Bloem.