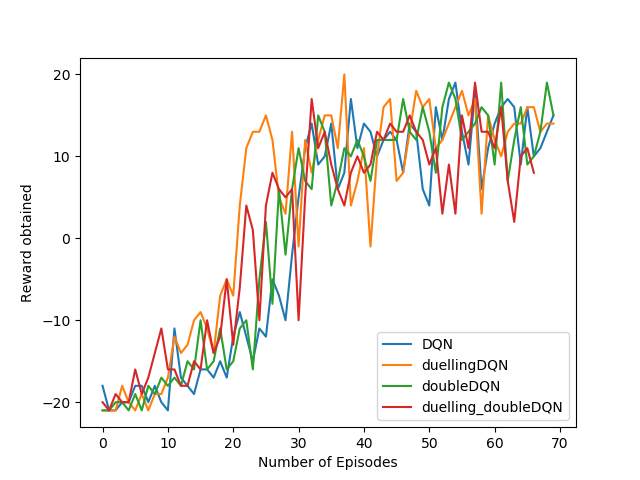

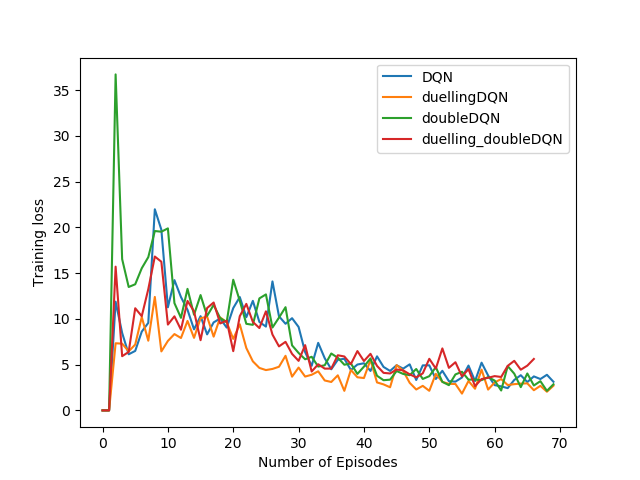

This repo contains implementations of Deep Q Network(DQN) and its variants: Double DQN and Duelling DQN. A variant which uses a duelling architecture and calculates loss in Double DQN style is also included. For evaluating performance, PongDeterministic-v4 was used since it converges very fast.

The code is highly inspired from https://github.com/higgsfield/RL-Adventure. The network architecture and hyperparameters are directly borrowed from this repo.