neural-style-transfer

Follow along in a GPU-enabled workbook! Link.

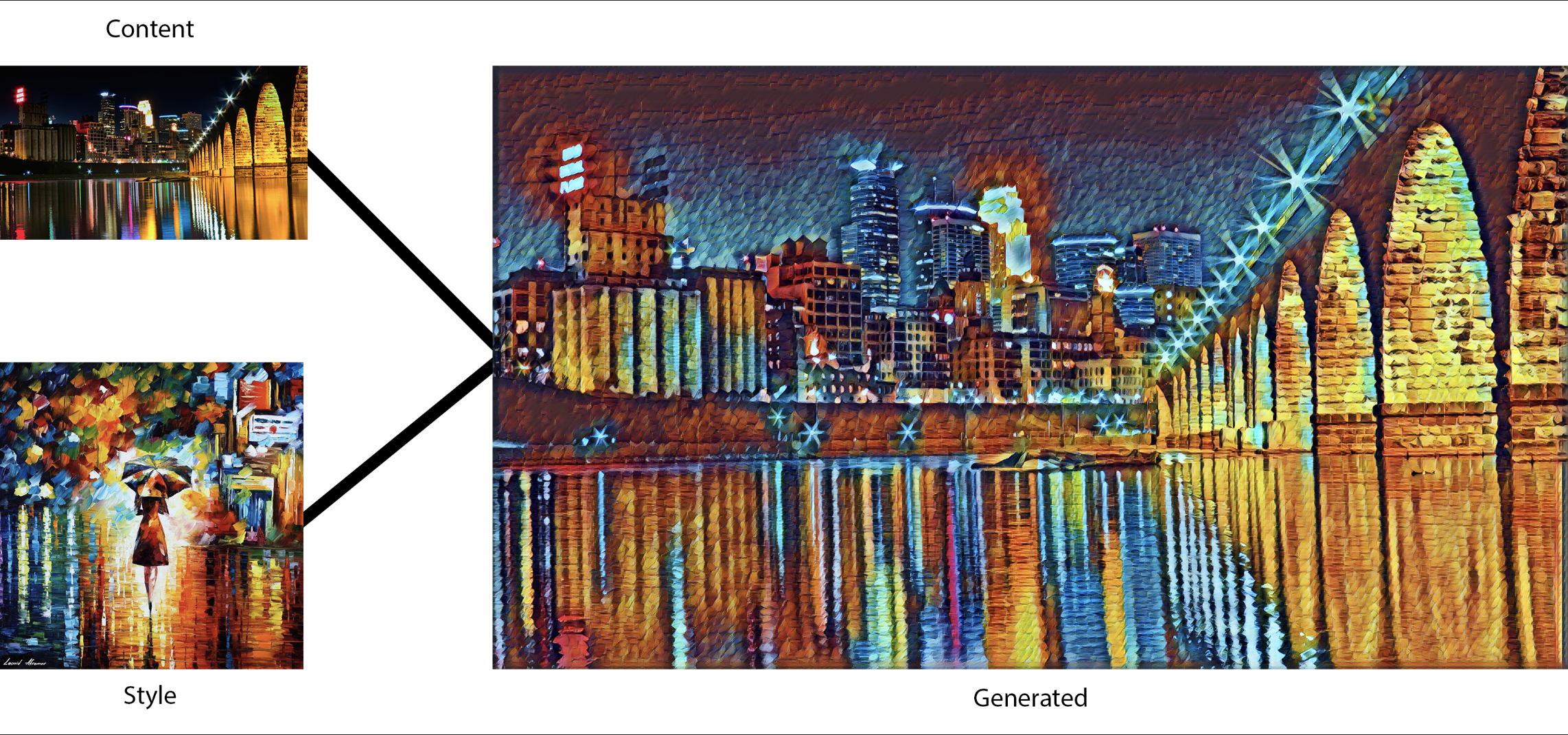

Neural Style Transfer utilizes the VGG-19 Image Classification Neural Network to apply transfer learning to images.

This repository explores two methods - one introduced by Leon A. Gatys, and another introduced by Justin Johnson.

Gatys' method is an iterative process (typically 150-200 iterations) to optimize a generated image, based on a cost function for style and content. The content cost function is defined by comparing the outputs of conv4_2 between the generated image and the content image. The style cost function is defined by comparing the outputs of [conv1_1, conv2_1, conv3_1, conv4_1, conv5_1] between the generated image and the style image.

Instead of using an iterative optimization, Johnson's method transforms images using a single forward pass through the network. It still uses the pre-trained VGG network, but instead trains the model to make transformations using 80,000 training images from the Microsoft COCO dataset. A single forward pass through this network has a loss which is comparable to ~100 iterations of Gatys' method.

The main notebook, Neural_Style_Transfer.ipynb, contains all relevant documentation for this repository.