This is the implementation for Full Parameter Fine-Tuning for Large Language Models with Limited Resources.

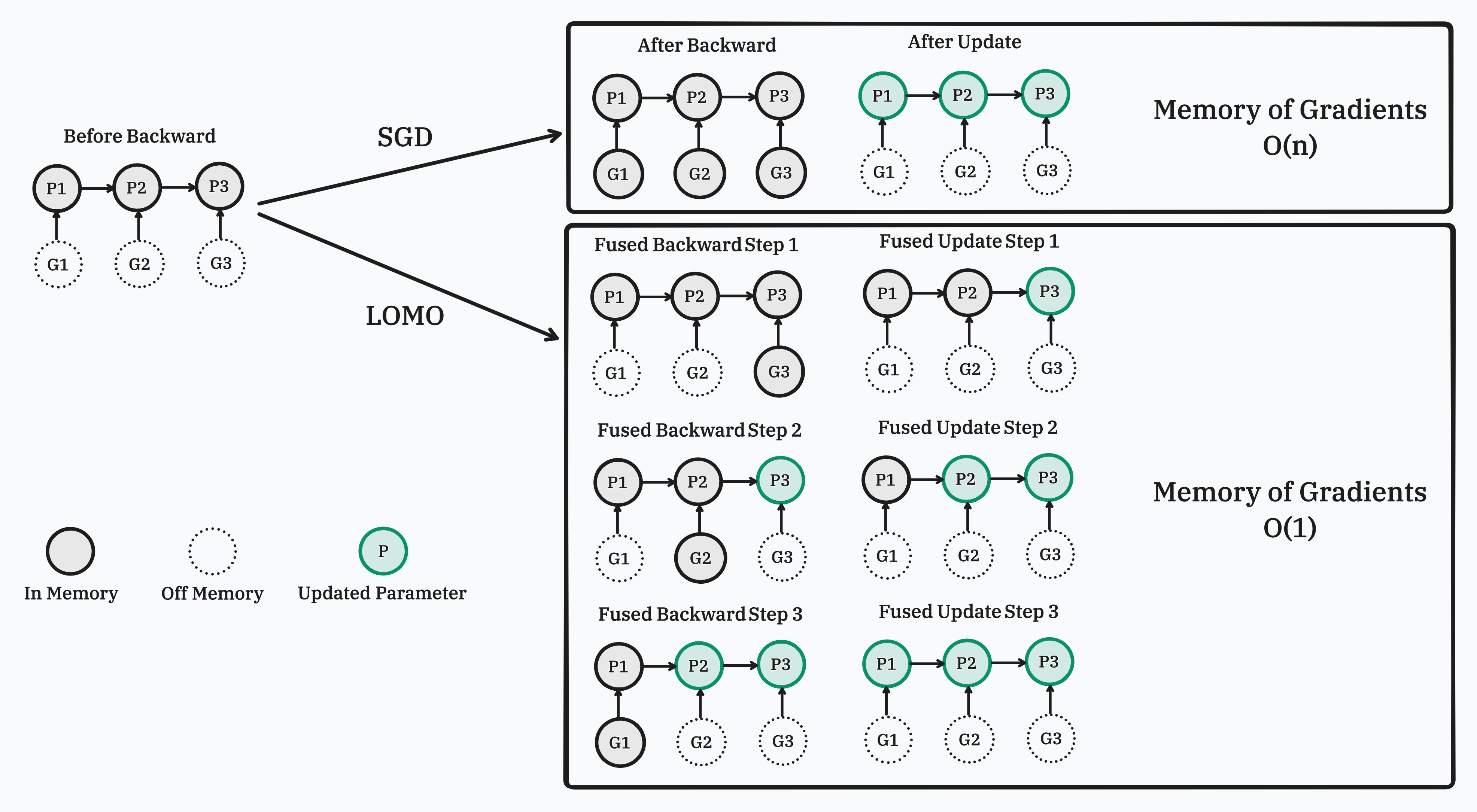

In this work, we propose a new optimizer, LOw-Memory Optimization (LOMO), which fuses the gradient computation and the parameter update in one step to reduce memory usage. Our approach enables the full parameter fine-tuning of a 7B model on a single RTX 3090, or a 65B model on a single machine with 8×RTX 3090, each with 24GB memory.

LOMO is integrated with CoLLiE library, which supports Collaborative Tuning of Large Language Models in an Efficient Way.

torch

deepspeed

transformers

peft

wandbThe minimum dependency is PyTorch, and others are used to reproduce our paper results.

bash run.shTo change the model size, dataset or hyperparameters, please modify the files under config.

We provide the sampled datasets used in our experiments here.

Due to the limited computational resources, we reported the highest results obtained from experiments conducted with the same random seed (42).

We acknolwedge this limitation in our work and plan to conduct repeated experiments in the next version to address it.

Feel free to raise an issue if you have any questions.