- Implementaion based on Harmonic DenseNet: A low memory traffic network (ICCV 2019)

- Refer to Pytorch-HarDNet for more information about the backbone model

- This repo was forked from meetshah1995/pytorch-semseg

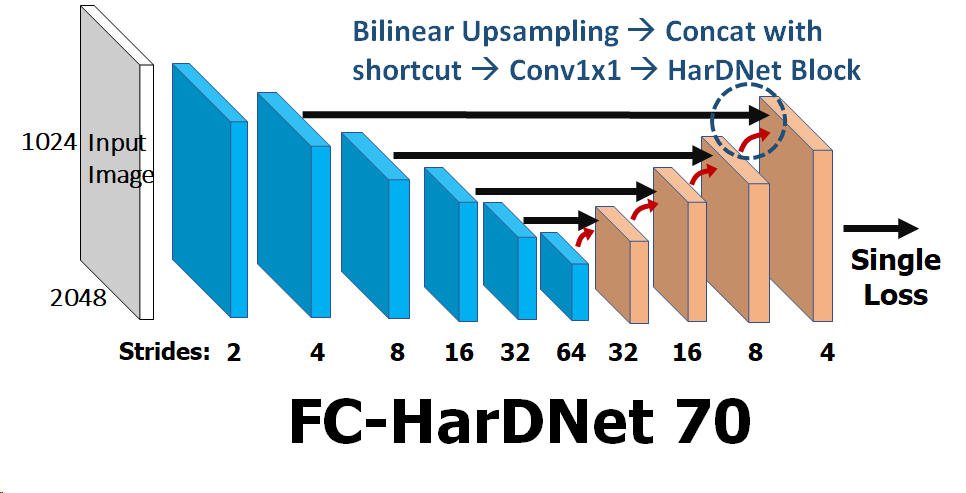

- Simple U-shaped encoder-decoder structure

- Conv3x3/Conv1x1 only (including the first layer)

- No self-attention layer or Pyramid Pooling

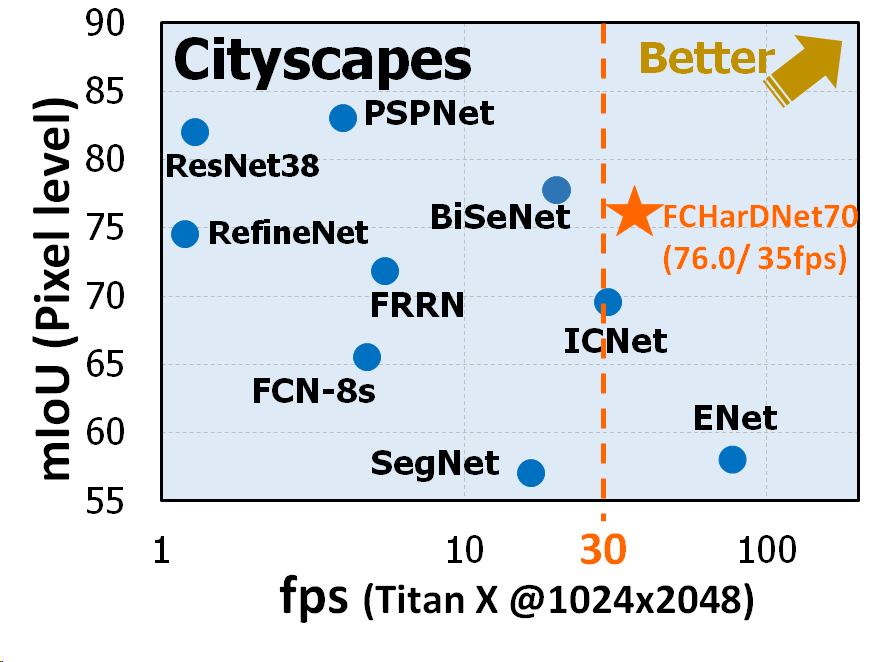

| Method | #Param (M) |

GMACs / GFLOPs |

Cityscapes mIoU |

fps on Titan-V @1024x2048 |

fps on 1080ti @1024x2048 |

|---|---|---|---|---|---|

| ICNet | 7.7 | 30.7 | 69.5 | 63 | 48 |

| SwiftNetRN-18 | 11.8 | 104 | 75.5 | - | 39.9 |

| BiSeNet (1024x2048) | 13.4 | 119 | 77.7 | 36 | 27 |

| BiSeNet (768x1536) | 13.4 | 66.8 | 74.7 | 72** | 54** |

| FC-HarDNet-70 | 4.1 | 35.4 | 76.0 | 70 | 53 |

- ** Speed tested in 1536x768 instead of full resolution.

- pytorch >=0.4.0

- torchvision ==0.2.0

- scipy

- tqdm

- tensorboardX

Setup config file

Please see the usage section in meetshah1995/pytorch-semseg

To train the model :

python train.py [-h] [--config [CONFIG]]

--config Configuration file to use (default: hardnet.yml)

To validate the model :

usage: validate.py [-h] [--config [CONFIG]] [--model_path [MODEL_PATH]] [--save_image]

[--eval_flip] [--measure_time]

--config Config file to be used

--model_path Path to the saved model

--eval_flip Enable evaluation with flipped image | False by default

--measure_time Enable evaluation with time (fps) measurement | True by default

--save_image Enable writing result images to out_rgb (pred label blended images) and out_predID

- Cityscapes pretrained weights: Download

(Val mIoU: 77.7, Test mIoU: 75.9) - Cityscapes pretrained with color jitter augmentation: Download

(Val mIoU: 77.4, Test mIoU: 76.0) - HarDNet-Petite weights pretrained by ImageNet:

included in weights/hardnet_petite_base.pth