Yabo Chen 1*, Chen Yang 1*,

Jiemin Fang 2‡, Xiaopeng Zhang 2 ,Lingxi Xie 2

, Wei Shen 1 ,Wenrui Dai 1 , Hongkai Xiong 1 , Qi Tian 2

1 Shanghai Jiao Tong University 2 Huawei Inc.

* equal contributions in no particular order.

Leveraging Latent Video Diffusion Models (LVDMs) priors effectively faces three key challenges: (1) degradation in quality across large camera motions,

(2) difficulties in achieving precise camera control, and (3) geometric distortions inherent to the diffusion process that damage 3D consistency.

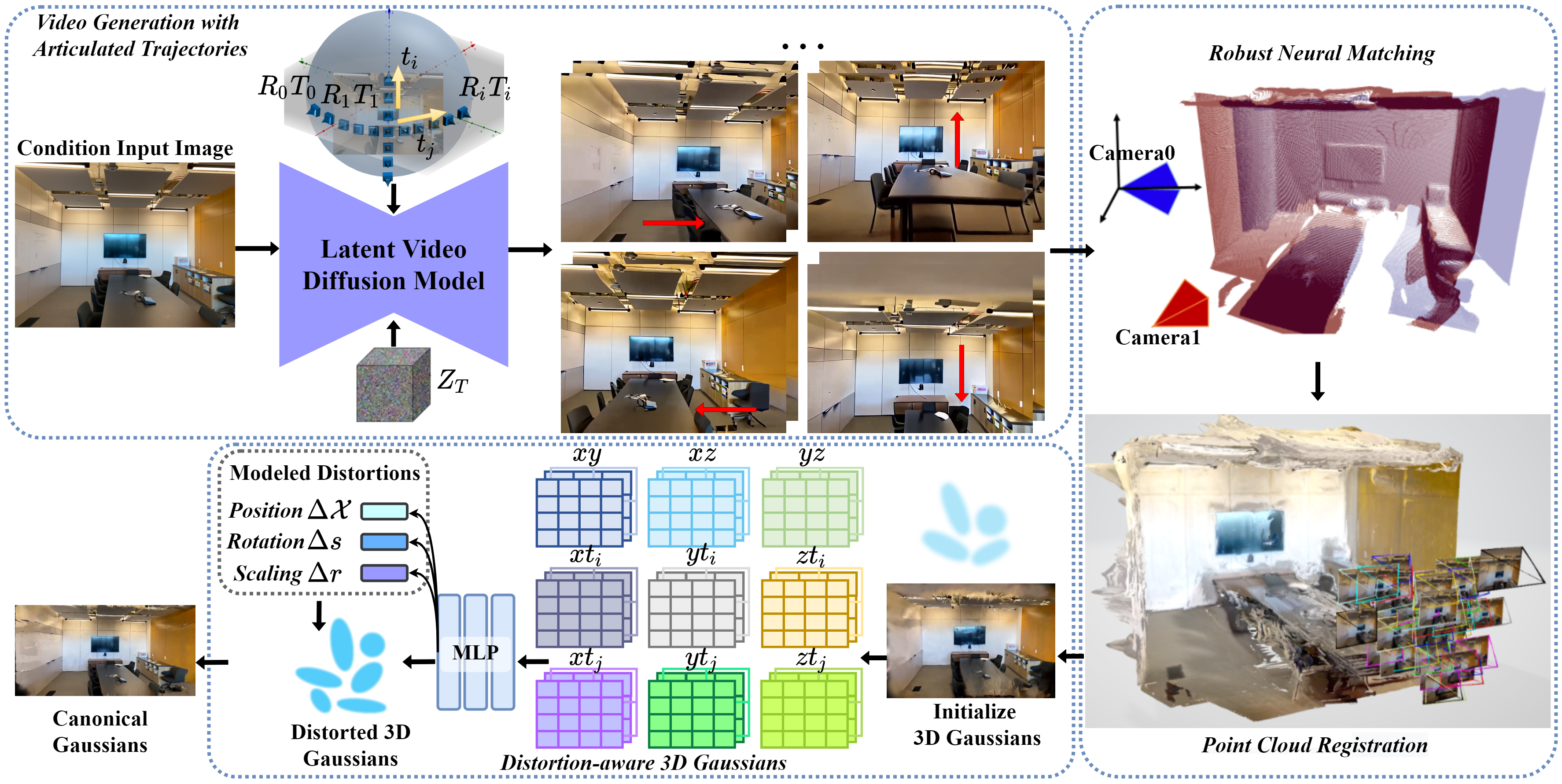

The overall pipeline of LiftImage3D. Firstly, we extend LVDM to generate diverse video clips from a single image using an

articulated camera trajectory strategy. Then all generated frames are matching using the robust neural matching module and registered in

to a point cloud. After that we initialize Gaussians from registered point clouds and construct a distortion field to model the independent

distortion of each video frame upon canonical 3DGS.

The overall pipeline of LiftImage3D. Firstly, we extend LVDM to generate diverse video clips from a single image using an

articulated camera trajectory strategy. Then all generated frames are matching using the robust neural matching module and registered in

to a point cloud. After that we initialize Gaussians from registered point clouds and construct a distortion field to model the independent

distortion of each video frame upon canonical 3DGS.

- 12/13/2024: Post the arxiv paper and project page.

- 12/23/2024: Post the pipeline of LiftImage3D and requirements.

- Release the code based on ViewCrafter

- Release the code of test prototype

- Release the local 3DGS viewer

- Clone LiftImage3D.

git clone --recursive https://github.com/AbrahamYabo/LiftImage3D

cd LiftImage3D

# if you have already cloned LiftImage3D:

# git submodule update --init --recursive- Pytorch 2.0 for faster training and inference.

conda create -n liftimage3d python=3.11

conda activate liftimage3d

conda install pytorch torchvision pytorch-cuda=12.1 -c pytorch -c nvidia # use the correct version of cuda for your system

pip install -r requirements.txt- Install xformer properly to enable efficient transformers.

conda install xformers -c xformers

# from source

pip install -v -U git+https://github.com/facebookresearch/xformers.git@main#egg=xformers- Install the submodules of 3DGS. We have two diff-gaussian-rasterization for different rendering strategy. diff-w and diff-ori

# We have two diff-gaussian-rasterization for different rendering strategy

cd distort-3dgs

pip install -e submodules/diff-w

pip install -e submodules/diff-ori

pip install -e submodules/simple-knn

cd ../- Optional, compile the cuda kernels for RoPE (as in CroCo v2).

# DUST3R relies on RoPE positional embeddings for which you can compile some cuda kernels for faster runtime.

cd mast3r/dust3r/croco/models/curope/

python setup.py build_ext --inplace

cd ../../../../../- Download all the checkpoints needed

mkdir -p checkpoints/

wget https://huggingface.co/depth-anything/Depth-Anything-V2-Large/resolve/main/depth_anything_v2_vitl.pth -P checkpoints/

wget https://download.europe.naverlabs.com/ComputerVision/MASt3R/MASt3R_ViTLarge_BaseDecoder_512_catmlpdpt_metric.pth -P checkpoints/

wget https://huggingface.co/TencentARC/MotionCtrl/resolve/main/motionctrl_svd.ckpt -P checkpoints/Because the computing resources I have cannot directly access the web network, I choose to keep laion/CLIP-ViT-H-14-laion2B-s32B-b79K locally. You can also download it from the website https://huggingface.co/laion/CLIP-ViT-H-14-laion2B-s32B-b79K/tree/main for access. All the checkpoints should be organize as follows.

├── checkpoints

│ ├── depth_anything_v2_vitl.pth

│ ├── MASt3R_ViTLarge_BaseDecoder_512_catmlpdpt_metric.pth

│ ├── motionctrl_svd.ckpt

├── laion

│ | CLIP-ViT-H-14-laion2B-s32B-b79K

│ ├── config.json

│ ├── open_clip_config.json

│ ├── open_clip_pytorch_model.bin

│ ├── ...

- Try LiftImage3D now

python run_liftimg3d_motionctrl.py --cache_dir ./output --input_file input_images/testimg001.png --width 1024 --height 768

#Note that --width and --height need to match the actual resolution of the input image.

#To maintain the generation performance of motionctrl, it is recommended to choose a width of 1024."This repository is based on original MotionCtrl, ViewCrafter, DUSt3R, MASt3R, Depth Anything V2, 3DGS, and 4DGS,. Thanks for their awesome works.

If you find this work repository/work helpful in your research, welcome to cite the paper and give a ⭐:

@misc{chen2024liftimage3d,

title={LiftImage3D: Lifting Any Single Image to 3D Gaussians with Video Generation Priors},

author={Yabo Chen and Chen Yang and Jiemin Fang and Xiaopeng Zhang and Lingxi Xie and Wei Shen and Wenrui Dai and Hongkai Xiong and Qi Tian},

year={2024},

eprint={2412.09597},

archivePrefix={arXiv},

primaryClass={cs.CV}

}