- Demo

- Overview

- Technical Aspect

- Directory Tree

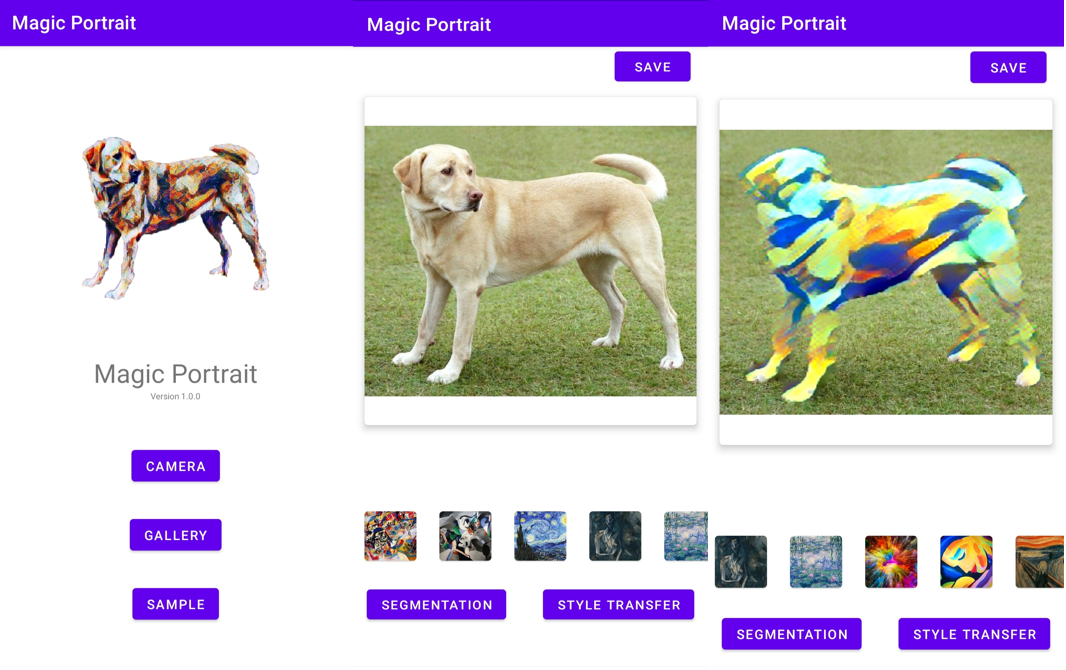

- Screenshots from the application environment

- To Do

- Bug / Feature Request

- Technologies Used

- Credits

Download the app from here.

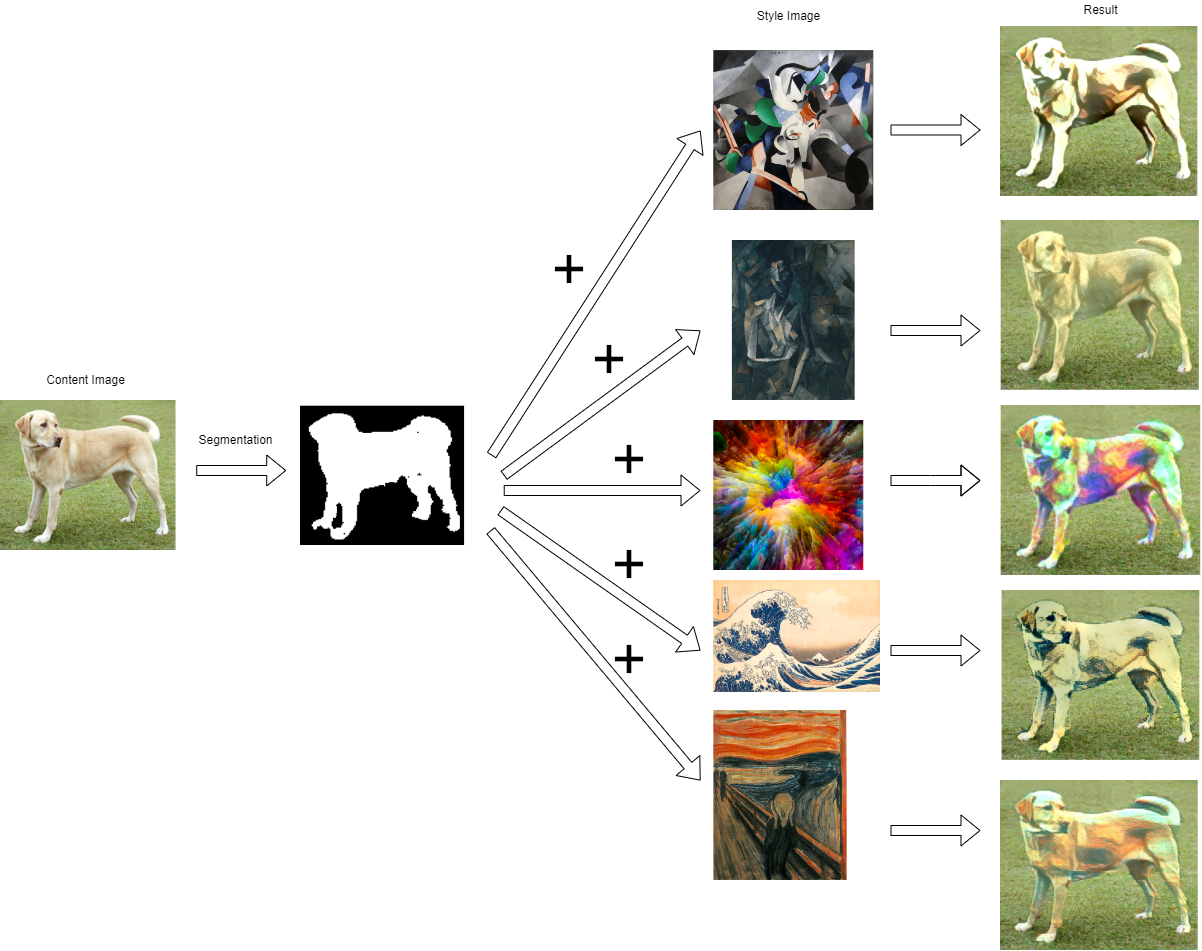

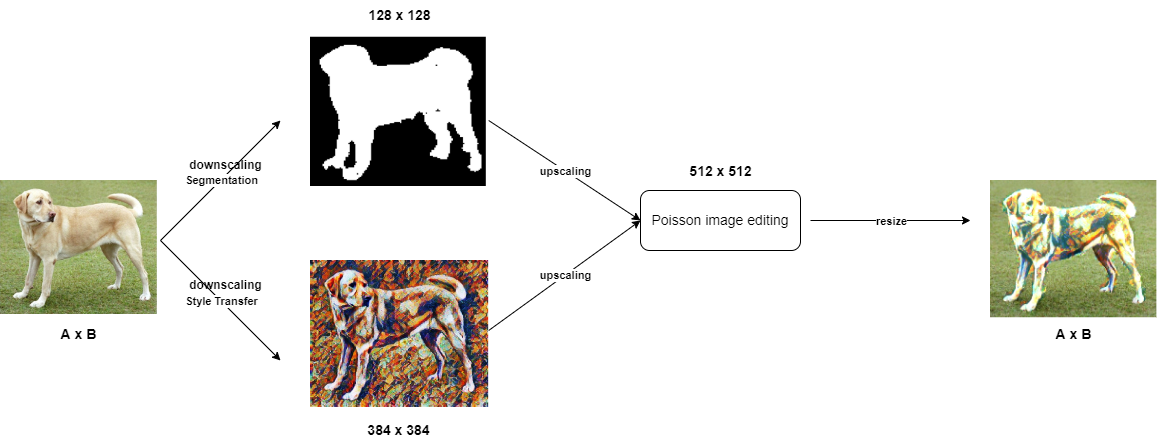

In this application with the help of artificial intelligence and deep learning, two deep models have been created. The first one is a segmentation model which can mask out every pet that has appeared in the original image. The second one is a style transfer model which can change the style of the original image to the desired style which can be a painting from a famous artist or a natural texture (like wood).

Then, the obtained images from these models are combined into a single image using Poisson image editing, in a way that, the background of the original image stays unchanged, and pets recognized from the segmentation model change to style transformed image.

This project is divided into four parts:

- Training a deep segmentation model.

- Training a deep style transfer model.

- Saved the trained models in TFLite format.

- Building an Android app.

- The user can choose an image from the device's gallery, capture it using the device's camera, or use the sample pre-defined image.

- Use the saved TFLite segmentation model to mask out every pet that appears in the image.

- Use the saved TFLite style transfer model to transform the style of the content image to the desired style. (user can choose one of the 10 pre-defined styles or import a new style from the device's gallery.)

- Combine the obtained images from these models using Poisson image editing.

- Save the resulting image in the devices' storage.

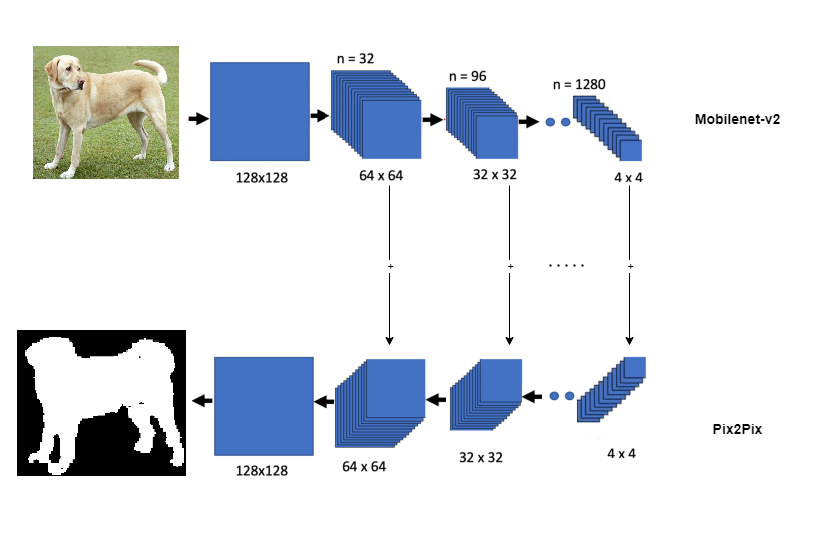

I used a modified U-net model for the segmentation task. U-net is an auto-encoder in which in its' first half there is an encoder that transforms the original image into a different symbolic space, and in the second half, there is a decoder that transforms the results from the encoder to the target data. For training, I used The Oxford-IIIT Pet Dataset.

For the encoder, I used mobilenet-v2 architecture, and for the decoder, upsamples block from pix2pix model. In the training phase, the encoder froze and only the weights of the decoder were updated. The resulting model can be seen in the following image.

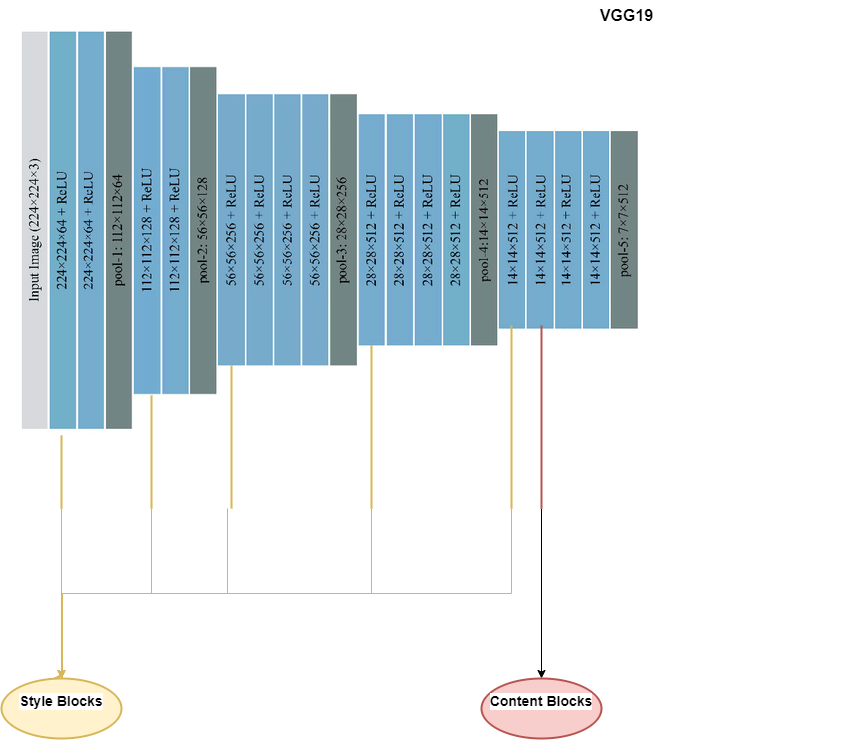

For the style transfer model, I used pre-trained VGG-19 as the base on which content and style images are fitted into. Some blocks in this model are dedicated to style images and some are for content images. these blocks' representation is gathered into one single representation for both style and content images. Then by calculating the mean square error for the models' output relative to each target, then take the weighted sum of these losses. finally, using gradient descent, we apply the gradients to the image. The resulting model can be seen in the following image.

For blending the obtained images from these models, I used Poisson image editing technique which is a type of digital image processing that operates on the differences between neighboring pixels, rather than on the pixel values directly. In gradient-domain methods, one fixes the colors of the boundary (taken from the background image) and provides a vector field that defines the structure of the image to be copied (taken from the foreground and/or a mixture of foreground and background). The resulting image is generated by minimizing the squared error terms between the gradient of the resulting image and the guidance vector field.

The complete workflow is demonstrated in the following diagram.

├── app

│ ├── src

│ │ ├── main

│ │ │ ├── cpp

│ │ │ │ ├── SeamlessBlending

│ │ │ │ ├── CMakeLists.txt

│ │ │ │ └── native-lib.cpp

│ │ │ ├── ml

│ │ │ │ ├── segmentor.tflite

│ │ │ │ ├── stylemodel.tflite

│ │ │ │ └── transformer.tflite

│ │ │ ├── res

│ │ │ │ └── drawables

│ │ │ ├── java

│ │ │ │ ├── LoadingDialog.java

│ │ │ │ ├── MainActivity.java

│ └── └── └── └── TransformerActivity.java

├── LICENSE

├── build.gradle

├── settings.gradle

├── .gitignore

└── README.md

- Parallelize the Poisson image editing process in order to speed up the application.

- Extend the segmentation model to mask out various types of objects.

- Build and train a deep neural network to blend the resulting images more accurately, for speed up and better performance.

If you find a bug, kindly open an issue here by including your inputs and the expected result.

If you'd like to request a new function, feel free to do so by opening an issue here. Please include sample inputs and their corresponding results.