Hackeando el Pensamiento: Traduciendo Señales Eléctricas del Cerebro en Acciones a través de Modelos LLM

"Hackeando el Pensamiento: Traduciendo Señales Eléctricas del Cerebro en Acciones a través de Modelos LLM" is a comprehensive talk presented at PyCon 2024. This session, led by Adonai Vera from Subterra AI, dives into the intersection of neuroscience and machine learning to explore how brainwaves can be harnessed to drive actions using Large Language Models (LLMs).

View the full presentation on "Hackeando el Pensamiento: Traduciendo Señales Eléctricas del Cerebro en Acciones a través de Modelos LLM" by clicking the image below:

- Introduction to Brainwaves: Understanding the electrical impulses generated by the brain.

- History of EEG Technology: Tracing the advancements in brain monitoring.

- Neurosity and Real-Time EEG Monitoring: Insights into modern EEG technology and its capabilities.

- Practical Applications: From everyday activities to complex BCI (Brain-Computer Interface) tasks.

- Live Demonstrations: Real-time interaction with GPT-4, translating brain signals into actionable outputs.

- Conclusions and Future Steps: How this technology will evolve and its potential future impacts.

To run the demonstration code provided:

- Set Up Environment:

- Ensure Python 3.8+ is installed.

- Install required packages:

pip install -r requirements.txt

- Configuration:

- Input your API keys and device IDs in the

config.pyfile.

- Input your API keys and device IDs in the

- Running the Application:

- Execute the main script:

python main.py

- Execute the main script:

- Graphs from the presentation are included to illustrate:

- The types of brainwaves and their significance.

- Real-time data analysis and interpretation through the Neurosity device.

- Visual aids help in understanding the complex dynamics of brain-to-computer communication.

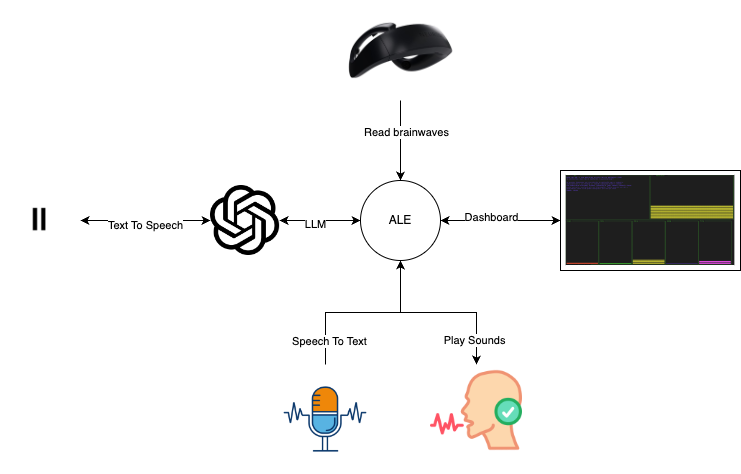

Description: Processes natural language to generate responses based on brainwave data. Usage: Translates brainwave patterns into text to enhance user-system interaction.

Description: Converts generated text into natural-sounding audio. Usage: Provides auditory feedback, making the application accessible to visually impaired users.

Description: Advanced EEG device capturing real-time brain activity. Usage: Analyzes brainwave patterns to detect mental states like relaxation and concentration.

Description: Creates interactive dashboards to display real-time data. Usage: Visualizes brainwave data and system statuses interactively.

Description: Converts spoken words into digital text. Usage: Enables voice commands and verbal interactions, enhancing usability.

- Links to additional resources, including detailed documentation on EEG technology and the latest advancements in BCI:

- Contributions to the project are welcome. Please read through the contribution guidelines in

CONTRIBUTING.md. - For major changes, please open an issue first to discuss what you would like to change.

This project is licensed under the MIT License - see the LICENSE file for details.

- Adonai Vera - Computer vision subterra AI - AdonaiVera

Adonai Vera, a Machine Learning Engineer at Subterra AI, is passionate about making AI technology more accessible and user-friendly. More about Adonai's work and contributions can be found at my personal website.