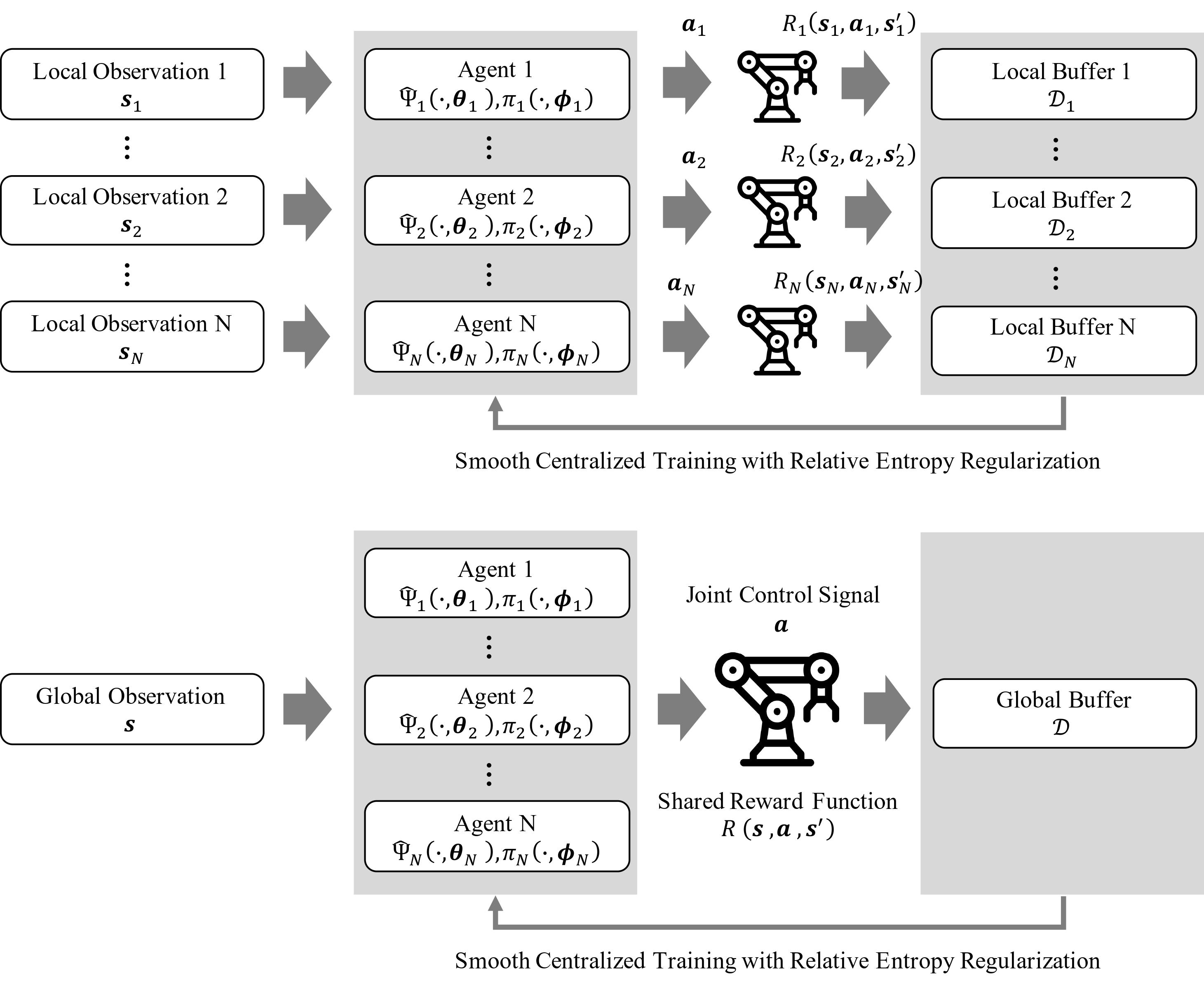

Code for Paper "Effective Multi-agent Reinforcement Learning Control with Relative Entropy Regularization".

This paper is currently submitted to IEEE Transactions on Automation Science and Engineering (T-ASE) for peer review.

Please feel free to contact us regarding to the details of implementing MACDPP. (Chenyang Miao: cy.miao@siat.ac.cn Yunduan Cui: cuiyunduan@gmail.com)

Please feel free to contact us regarding to the details of implementing MACDPP. (Chenyang Miao: cy.miao@siat.ac.cn Yunduan Cui: cuiyunduan@gmail.com)

You can see the peformance of MACDPP v.s. other RL algorithms with UR5 task in our video in YouTube. Here we compared these algorithms in different stage interacting with environment.

Here we update two versions of the MACDPP code, one for the classic MPE tasks and another for the factorization tasks.

A simple multi-agent particle world based on gym. Please see here to install and know more about the environment. For other dependencies, please refer to the requirements_mpe.txt.

Robo-gym is an open source toolkit for distributed reinforcement learning on real and simulated robots. You can see here to install this env. For other dependencies, please refer to the requirements_robo_gym.txt.

Experiment for a specific configuration can be run using:

python train.py --configIn the configs directory we upload the specific configurations of physical_deception task and End Effector Positioning task.

We use VisualDL, a visualization analysis tool of PaddlePaddle to realize the visualization of the exp process.

@misc{miao2023effective,

title={Effective Multi-Agent Deep Reinforcement Learning Control with Relative Entropy Regularization},

author={Chenyang Miao and Yunduan Cui and Huiyun Li and Xinyu Wu},

year={2023},

eprint={2309.14727},

archivePrefix={arXiv},

primaryClass={eess.SY}

}