PAg-NeRF: Towards fast and efficient end-to-end panoptic 3D representations for agricultural robotics

🌱 AgRobotics, Institute of Agriculture 📸 Institute of Photogrammetry

University of Bonn

PAg-NeRF uses state-of-the-art accelerated NeRFs and online pose optimization to produce 3D consistent panoptic representations of challenging agricultural environments.

Tested on Ubuntu 20.04; CUDA 11.7

-

Install CUDA and cuDDN.

-

Install Kaolin Wisp (release 0.1.1) for your CUDA version. (This process will install PyTorch and NVIDIA's Kaolin library in a conda environment)

-

Install Permutohedral Grid Encoding in the same

condaenvironment.

Finally install PAg-NeRF requirements by running:

cd [path_to_pagnerf_repo]

conda activate wisp

pip install --upgrade pip

pip install -r requirements.txt

cd [path_to_pagnerf_repo]

conda activate wisp

bash scripts/get_bup20.sh # ~70GB

bash scripts/get_bup20_mask2former_detections.sh # ~58GB

./train.sh

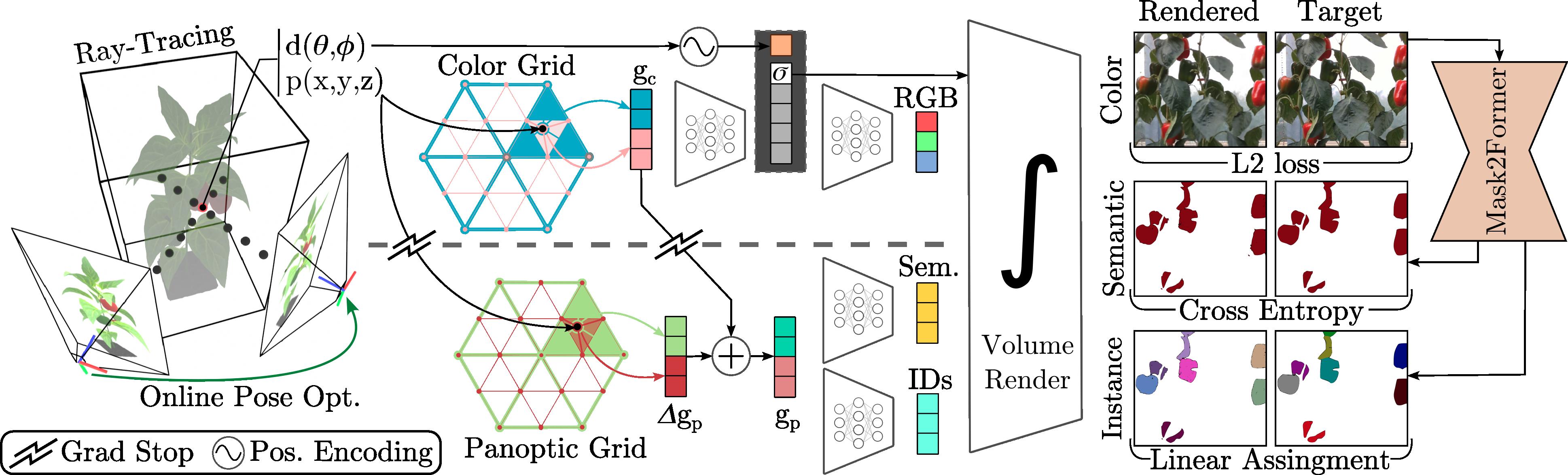

We use state-of-the-art permutohedral feature hash-grids to encode 3D space, allowing our system to be fast and memory efficient. Our architecture uses novel delta grid that computes panoptic features by correcting the color features, leveraging the similarity between modalities. Thanks to the implicit sparseness of hash-grids, we are able to reduce the panoptic capacity to only have valid values where corrections are needed. We avoid propagating gradients from the panoptic to the color branch to ensure the panoptic grid only learns corrections over the color features. Our grid based architecture allows us to decoded all render quantities with very shallow MLPs. To learn 3D consistent instant IDs from inconsistent ID still-image predictions, we employ a modified linear assignment loss, tackling the fix scale nature of several agricultural datasets, rejecting repeated IDs. To obtain high-detail multi-view consistent renders, we also perform online pose optimization, making our system end-to-end trainable.

@article{smitt2023pag,

title={PAg-NeRF: Towards fast and efficient end-to-end panoptic 3D

representations for agricultural robotics},

author={Smitt Claus and Halstead Michael and Zimmer Patrick and

Laebe Thomas and Guclu Esra and Stachniss Cyrill and McCool Chris},

journal={arXiv preprint arXiv:2309.05339},

year={2023}

}