By The Inventors #20131 Angelos Theodoridis(GitHub)

This program is only for testing purposes and on a match is not legal. It's a powerful way to tune your PIDF Feedforward Values for the Robot. Saves up a lot of time and it's also really accurate if it is set up well.

Note: This is a default program... If you are planning to use it for your robot and field you might need to change some parts of the code to make it suitable for your needs.

We are open to suggestions and feedback so feel free to contact us.

|

|

|---|

Soooooooooo...

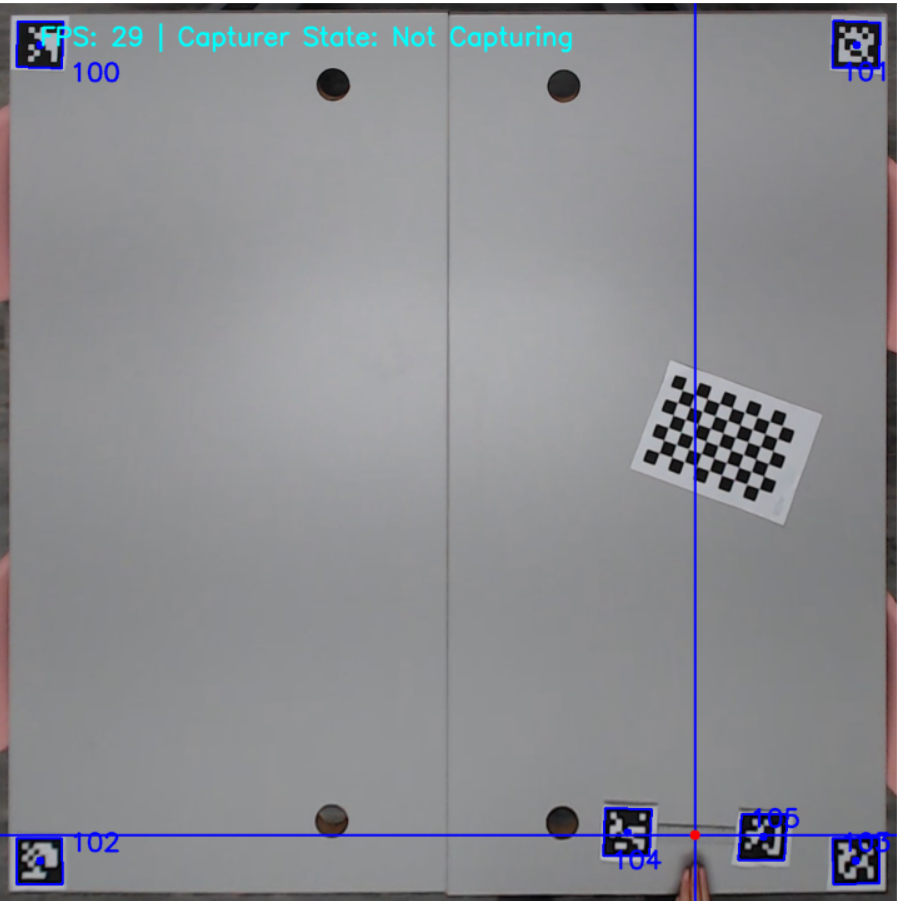

Mount a Camera, which you can use through your computer, on the ceiling facing down and make sure that the field is completely visible from the camera.

- Find on the internet some AprilTags that are part of the 36h11 family of tags with known ids and print the in a piece of paper. (there are 10 tag examples in the imgs/apriltags directory)

- Trace out the tags and cut them with a pair of scissors. Attention!: Make sure that you leave some padding of white paper around the tags!

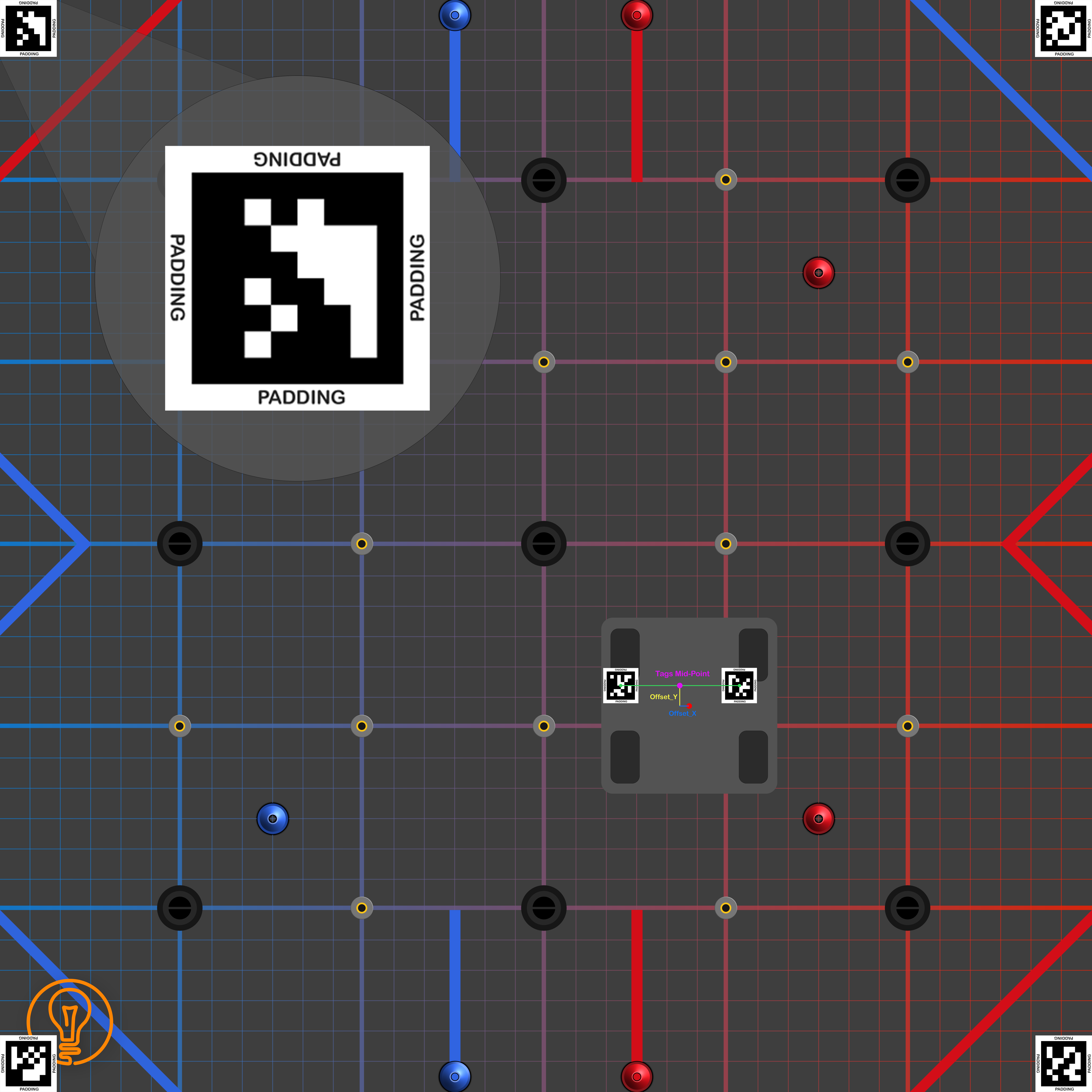

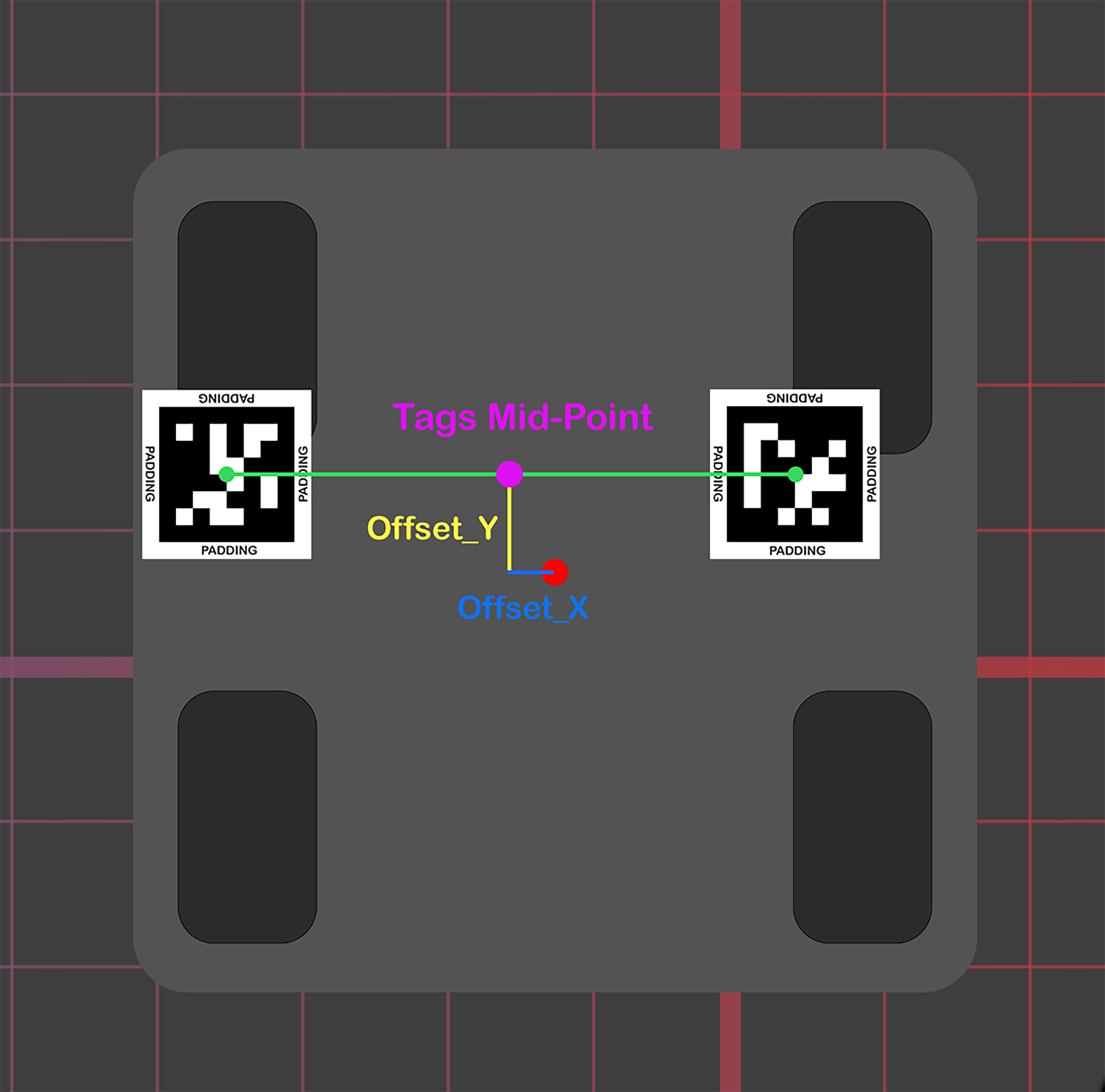

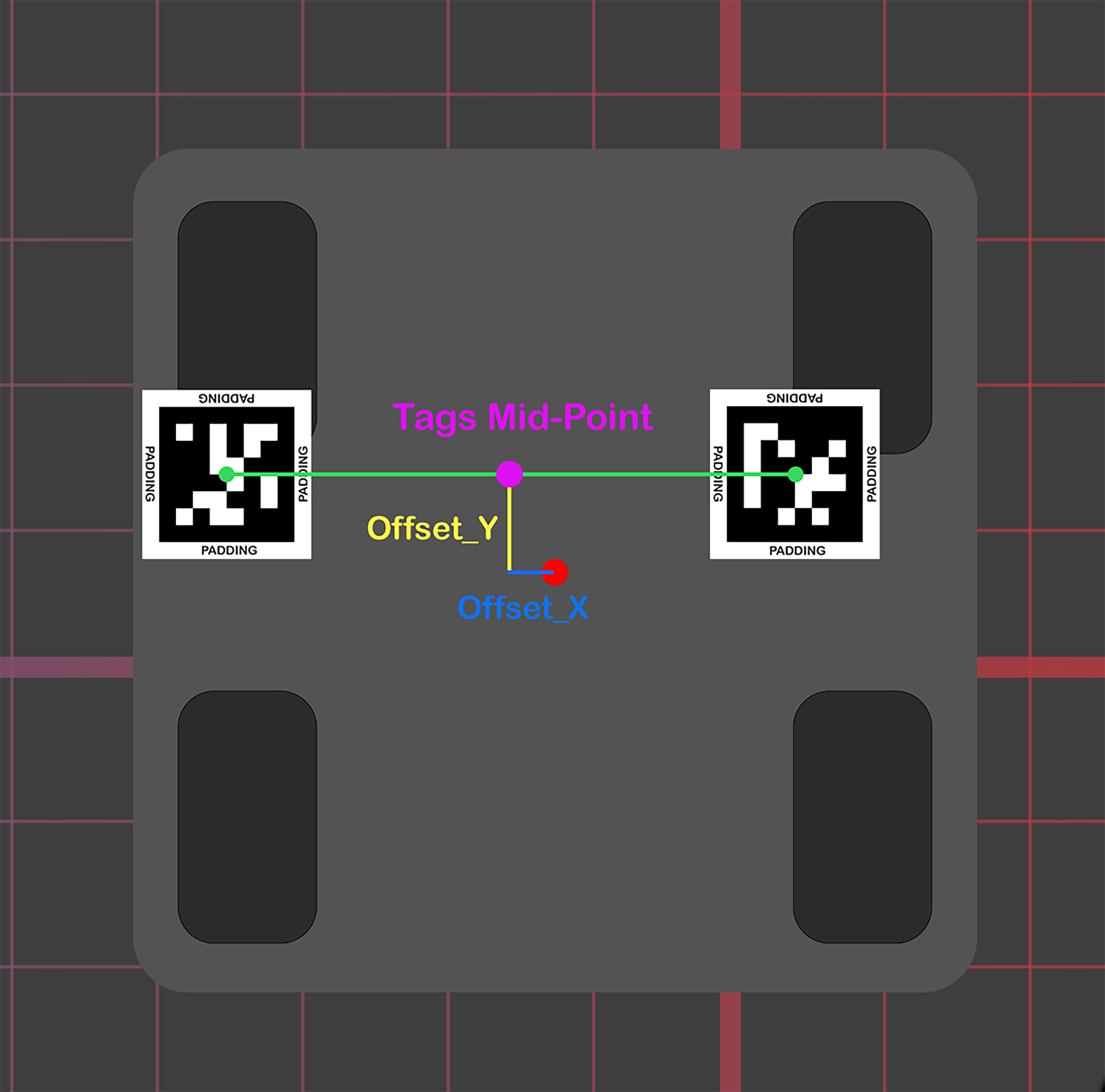

Next step is to place the tags on the field and on the robot. Place 4 tags in each corner of the field. Then place 2 tags on top of the robot. Also, measure the tags mid-point offset from the center of the robot.(take a look at the 2 pictures below)

|

|

|---|

Note:

- You can place a single tag in the robot but then the heading calculation won't be available so we recommend 2

- You might have to play around with the sizes of the tags so that are visible and detectable from the camera.

You will also need to gather some information in order in input it to the program. The info is the following:

- All Tags Ids(specify these in the contants.json file)

- Tags Mid-Point Offset from the center of the robot(this should be specified in the init method of the Localizer Class)

Extract the .zip file somewhere that you like to store it.

Open the command line/terminal in your repo/folder and run the following command:

pip install -r requirements.txt

- Open Anaconda Prompt (we suggest that you create a new environment only for the Robot-Autotuner)

- Locate the repo/folder

cd [PATH] - Then run the following command:

conda install -r requirements.txtIn case the enviroment has an error solving it.- Activate the Robot-Autotuner environment by running

conda activate [ROBOT_AUTOTUNER_ENV_NAME] - Locate your repo/folder if you haven't already

- Then run the following command:

pip install -r requirements.txt

- Activate the Robot-Autotuner environment by running

In order to keep the code simple and organized the whole program is divided in classes:

-

Apriltagging - This class is responsible for the Apriltag detection.

-

Localizer - This class is responsible for localizing the robot in side the field and calculate some kinematics such as pose(x, y, θ) and velocity.

-

Path Capturer - This class continuously captures the robots position into a series of poses to make a path.

-

Datalogger - Cooperates with Path Capturer to log/save the robots pose together with a timestamp in a csv file so we can analyze it later on.

-

Visualizer - Takes a path as an input and visualizes it in a 2D plane using MatPlotLib Python Library.

-

Path Evaluator - Contains some useful functions for analyzing a path such as Path Interpolation, Path Linearity and Start vs Stop Pose Difference.

-

Video Stream - Is a class that run in parallel with the main loop of the program and uses Frame Buffering Algorithm to make video capturing/streaming faster by a huge factor.

-

Camera Calibrator - This is a class that calibrates the camera for fish-eye distortion as well as perspective misalignments

The Project/File Structure looks something like this:

The Documentation is in a separate Markdown and you can find it here