A comprehensive system for collecting, analyzing, and summarizing legal cases in Arabic using Large Language Models fine tuning and knowledge graph techniques.

- Overview

- System Architecture

- Key Features

- Prerequisites

- Installation

- Usage Pipeline

- Project Structure

- Performance

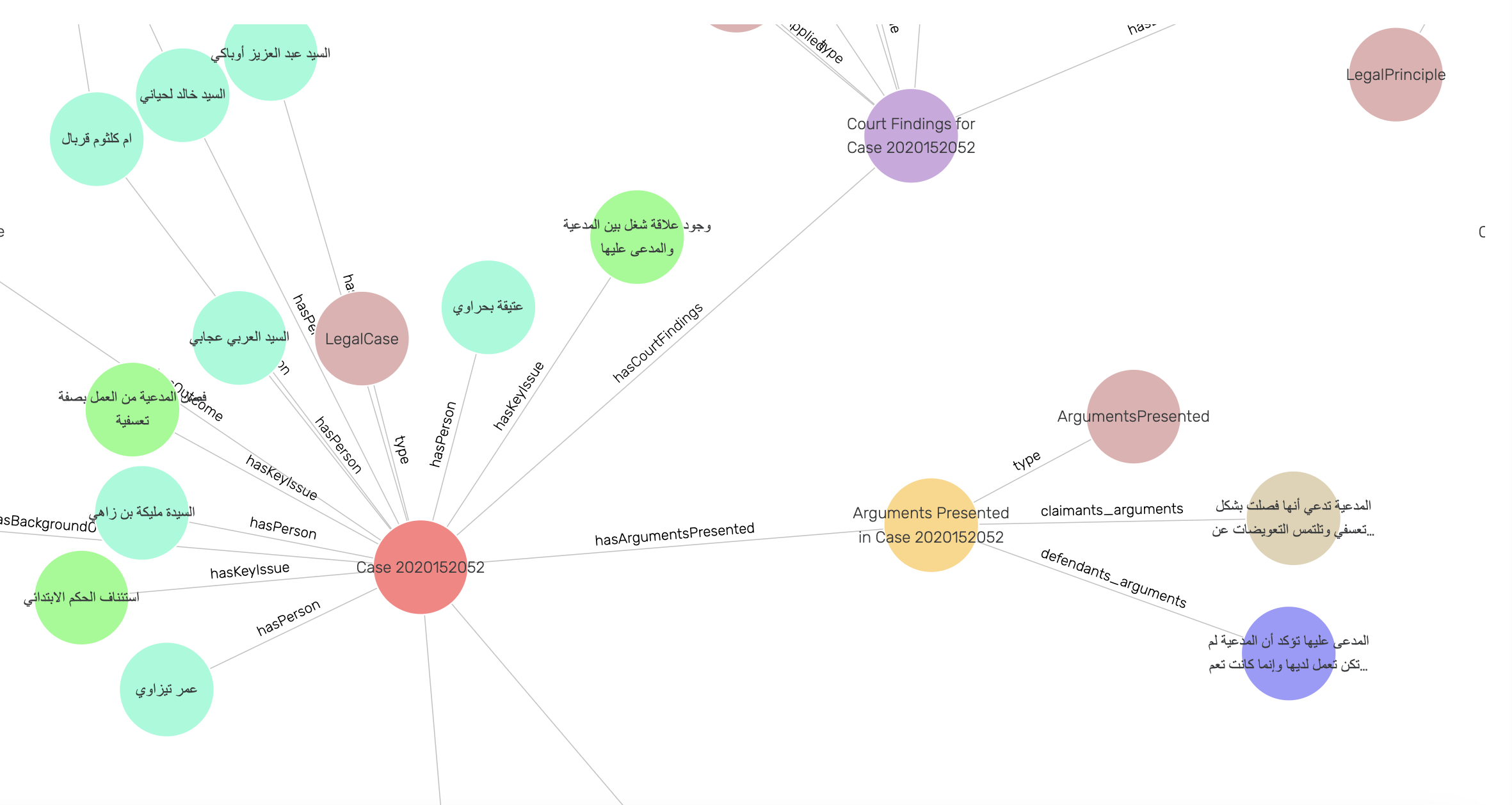

- Created Knowledge Graph

This project provides an end-to-end solution for processing legal cases, from data collection to serving structured summaries via an API. The system uses state-of-the-art language models, specifically a fine-tuned LLaMA model, to generate comprehensive case summaries and builds a knowledge graph for advanced legal analysis.

The fine-tuned model is publicly available on the Hugging Face Hub: ahmadsakor/Llama3.2-3B-Instruct-Legal-Summarization

The system consists of five main components:

- Data Collection & Preparation: Automated crawling and OCR processing of legal documents

- Model Fine-tuning: Custom LLaMA model adaptation for legal summarization

- Evaluation Framework: Comprehensive metrics for model assessment

- Knowledge Graph: RDF-based graph database for legal case analysis

- Inference API: FastAPI service for generating case summaries

- Automated legal case collection from the Moroccan Judicial Portal

- Advanced OCR processing with error correction

- Fine-tuned LLaMA model for Arabic legal text

- Comprehensive evaluation framework

- RDF-based knowledge graph

- FastAPI-based inference service

- Multi-language support (Arabic/English)

- Python 3.10 or higher

- CUDA-capable GPU (12GB+ VRAM recommended)

- 32GB+ RAM recommended

- 50GB+ storage space

- Internet connection

- GraphDB instance (for knowledge graph)

Each component has its own dependencies. To set up the complete system:

- Clone the repository:

git clone [repository-url]

cd [repository-name]- Follow the instructions in each directory.

-

Data Collection & Preparation

-

Model Fine-tuning

-

Model Evaluation

-

Knowledge Graph Creation

-

Deploy Inference API

├── fetch_data/ # Data collection and processing

├── fine_tuning/ # Model training and adaptation

├── evaluation/ # Performance assessment

├── knowledge_graph/ # Graph database creation

├── inference/ # API service

├── data/ # Data storage

└── logs/ # System logs

The fine-tuned model shows significant improvements over the base model:

- Court Information: +4.58% BERTScore F1

- Legal Principles: +10.48% BERTScore F1

- Final Decision: +9.27% BERTScore F1

- JSON Validity: 99% accuracy

Full evaluation metrics available in the evaluation directory.

Below is a sample of the created knowledge graph:

- Moroccan Judicial Portal for providing access to legal cases

- Contributors and maintainers of the OCR libraries used in this project

- Contributors and maintainers of 🤗 Transformers

- Contributors and maintainers of DeepSpeed

- Contributors and maintainers of PEFT (Parameter-Efficient Fine-Tuning)

- Contributors and maintainers of Weights & Biases for experiment tracking

- Contributors and maintainers of Moroccan Judicial Portal for providing access to legal cases

- Contributors and maintainers of Meta AI for the base LLaMA model.