-

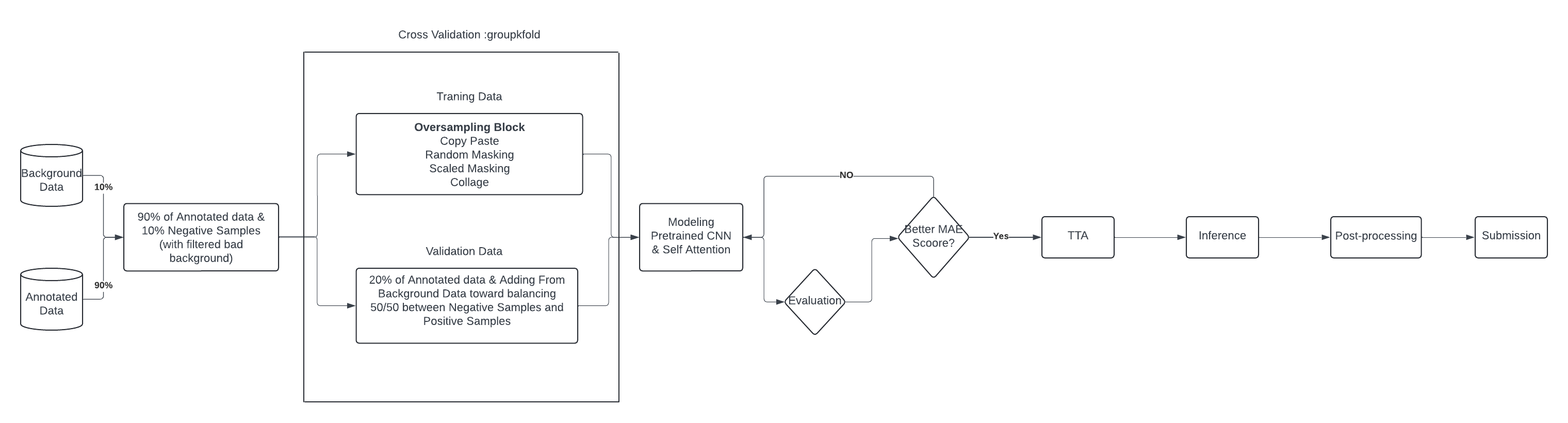

Data Preparation

- Annotated Data (90%): 90% of the annotated data is used for training.

- Background Data (10%): 10% of the data is background data to add negative samples with filtered bad backgrounds.

-

Training Data

- Oversampling Block: This block includes techniques to balance and augment the training data:

- Copy-Paste: Inserts annotated objects onto varied background scenes to diversify the training data.

- Masked Scale: Resizes and positions images within a defined canvas to simulate different object scales and viewpoints.

- Random Image Masking: Applies geometric shapes to obscure parts of images, simulating occlusions and environmental variability.

- Collage: Assembles smaller images into composite visuals, enriching the dataset with diverse visual compositions.

- Oversampling Block: This block includes techniques to balance and augment the training data:

-

Validation Data

- 20% of the annotated data is combined with background data to balance the dataset, achieving a 50/50 split between negative and positive samples.

-

Modeling

- Pretrained CNN Models (Multi-Label Regression) & Self-Attention: Two models, EfficientNetV2_rw_s (image size 1024, epoch 25, train batch size 8) and ConvNext_base (image size 512, epoch 25, train batch size 8), are trained with the following augmentations:

- Geometric Augmentations:

- HorizontalFlip

- Transpose

- RandomRotate90

- Blur/Noise Augmentations:

- GaussianBlur

- GaussNoise

- Geometric Augmentations:

- Pretrained CNN Models (Multi-Label Regression) & Self-Attention: Two models, EfficientNetV2_rw_s (image size 1024, epoch 25, train batch size 8) and ConvNext_base (image size 512, epoch 25, train batch size 8), are trained with the following augmentations:

-

Evaluation

- The models are evaluated based on the Mean Absolute Error (MAE) score.

-

Better MAE Score?

- If the MAE score improves, proceed to Test Time Augmentation (TTA).

-

TTA (Test Time Augmentation)

- Additional augmentations during inference:

- RandomRotate90

- HorizontalFlip

- Additional augmentations during inference:

-

Inference

- Run inference on the test data and merge the predictions from both models using the harmonic mean formula:

(2 * Pred1 * Pred2) / (Pred1 + Pred2 + epsilon) -

Post-processing

- This post-processing code modifies the

Targetcolumn in a DataFrame based on specific conditions. For values greater than 40, it converts them to integers and increments by 1. For values 40 or below, it rounds them to the nearest integer, ensuring they are at least 0. This ensures that predictions are within a practical range, with no negative values and proper rounding applied.

- This post-processing code modifies the

-

Submission

- Prepare the final submission file.