This project shows how to build a fully Serverless application on AWS (including the SQL database) using Amazon API Gateway, AWS Lambda, Amazon Aurora Serverless (MySQL) and the new Data API. By using the Data API, our Lambda functions do not have to manage persistent database connections which greatly simplifies application logic.

Cool, eh?

If you are not familiar with the Amazon Aurora Serverless Data API, please have a quick look at my blog post Using the Data API to interact with an Amazon Aurora Serverless MySQL database. It shows how to provision an Amazon Aurora Serverless database (MySQL) using infrastructure-as-code and provides code samples for various Data API use cases.

Imagine for a moment an organization where the majority of application workloads are deployed to virtual machines by leveraging Amazon's EC2 infrastructure. Your team was assigned the task of building up a solution to maintain an inventory of software packages (eg, AWS CLI v1.16.111) installed across the various EC2 fleets. The solution should be API-based (REST) and leverage AWS Serverless services as much as possible, including the database. Most importantly, the solution should not make use of persistent database connections but instead use an API to interact with the SQL database.

This is what this project is all about. It describes an end-to-end API-based Serverless solution for a simple EC2 Package Inventory system leveraging Amazon Aurora Serverless (MySQL) and the Data API for access to the database.

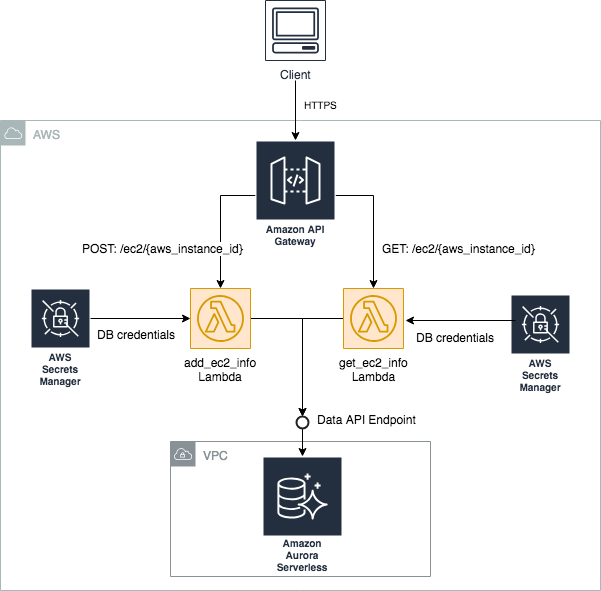

The architecture diagram above shows the two REST APIs (see the POST and GET APIs) that we're going to build. The POST:/ec2/aws_instance_id REST API stores EC2- and package-related information on the SQL database for a given EC2 instance. API GET:/ec2/aws_instance_id retrieves the stored information from the database for a given EC2 instance.

Client applications send REST requests to an Amazon API Gateway endpoint which then routes the request to the appropriate Lambda function. The Lambda functions implement the core API logic and make use of database credentials stored on AWS Secrets Manager to connect to the Data API Endpoint for the Aurora serverless MySQL cluster. By leveraging the Data API, Lambda functions will not have to manage persistent database connections which greatly simplifies application logic. Instead, simple API calls will be performed via the Data API to issue SQL commands to the Aurora Serverless database.

By using Aurora Serverless MySQL we can take advantage of the optional auto-pause feature which allows us to automatically and seamlessly shut down and restart the database when needed without any impact to application code. This makes sense as the EC2 Inventory database will only be updated sporadically when EC2 instances are launched or terminated. In the occasional event of a large number of EC2 instances being launched simultaneously, the Aurora Serverless database will automatically scale up to meet traffic demands.

You'll need to download and install the following software:

Make sure you have set up AWS credentials (typically placed under ~/.aws/credentials or ~/.aws/config). The credentials you're using should have "enough" privileges to provision all required services. You'll know the exact definition of "enough" when you get "permission denied" errors :)

Now, indicate which AWS profile should be used by the provided scripts, e.g,:

export AWS_PROFILE=[your-aws-profile]Create the Python virtual environment and install the dependencies:

# from the project's root directory

pipenv --python 3.6 # creates Python 3.6 virtual environment

pipenv shell # activate the virtual environment

pipenv install # install dependenciesTo know where the virtual environments and the dependencies are installed type this:

pipenv --venvNote: At the time of this writing (July 2019), the Data API is publicly available in US East (N. Virginia), US East (Ohio), US West (Oregon), Europe (Ireland), and Asia Pacific (Tokyo) Regions.

The deployment script reads the values from config file config-dev-env.sh (important: This file will be used everywhere! Make sure you edit the file with config value for your AWS account!).

Create (or reuse) an S3 bucket to store Lambda packages. Your AWS credentials must give you access to put objects in that bucket.

# Creating an S3 bucket (if needed)

aws s3 mb s3://[your-s3-bucket-name]

Make sure you update file config-dev-env.sh with the S3 bucket name otherwise the deployment will fail.

# Specifying the S3 bucket that will store Lambda package artifacts

export s3_bucket_deployment_artifacts="[your-s3-bucket-name]"Now deploy the database resources by invoking the deploy script and passing the config file as an input parameter (important: Notice that we only specify the prefix of the config file (eg, config-dev) not the full file name).

# from project's root directory

./deploy_scripts/deploy_rds.sh config-dev# from project's root directory

cd deploy_scripts/ddl_scripts

# run the script

./create_schema.sh config-dev# from the project's root directory

./deploy_scripts/package_api.sh config-dev && ./deploy_scripts/deploy_api.sh config-devUpon completion, the deploy script will print the output parameters produced by the deployed API stack. Take note of the ApiEndpoint output parameter value.

You can now use a REST API client such as Postman or the curl command to invoke the EC2 Inventory API. You'll use the ApiEndpoint value you grabbed in the previous step for that (see next).

Add a new EC2 to the inventory by specifying the EC2 instance id (aws_instance_id), AWS region, and AWS account as well as the packages that have been deployed to the instance (package_name and package_version).

POST: https://[EpiEndpoint]/ec2/{aws_instance_id}

Example:

POST: /ec2/i-01aaae43feb712345

{

"aws_region": "us-east-1",

"aws_account": "123456789012",

"packages": [

{"package_name": "package-1", "package_version": "v1"},

{"package_name": "package-1", "package_version": "v2"},

{"package_name": "package-2", "package_version": "v1"},

{"package_name": "package-3", "package_version": "v1"}

]

}

Success - HttpCode: 200

Example:

{

"new_record": {

"aws_account": "123456789012",

"aws_region": "us-east-1",

"packages": [

{

"package_name": "package-1",

"package_version": "v1"

},

{

"package_name": "package-1",

"package_version": "v2"

},

{

"package_name": "package-2",

"package_version": "v1"

}

]

}

}

Error - HttpCode: 400

Example:

{

"error_message": "An error occurred (BadRequestException) when calling the ExecuteSql operation: Duplicate entry 'instance-002' for key 'PRIMARY'"

}

Get information about an EC2 from the inventory by specifying the EC2 instance id (aws_instance_id).

GET: https://[EpiEndpoint]/ec2/{aws_instance_id}

Example:

GET: /ec2/i-01aaae43feb712345

Success - HttpCode=200 (AMI found)

Example:

{

"record": {

"aws_instance_id": "i-01aaae43feb712345",

"aws_region": "us-east-1",

"aws_account": "123456789012",

"creation_date_utc": "2019-03-06 02:45:32.0",

"packages": [

{

"package_name": "package-2",

"package_version": "v1"

},

{

"package_name": "package-1",

"package_version": "v2"

},

{

"package_name": "package-1",

"package_version": "v1"

}

]

},

"record_found": true

}

Success - HttpCode=200 (EC2 not found)

{ "record": {}, "record_found": false }

Error - HttpCode=400

Example:

{

"error_message": "Some error message"

}

We enabled observability of this application via AWS X-Ray. Take a look at the data access layer source file (dal.py) for details. Search for terms x-ray and xray.

To run Lambda function GetEC2InfoLambda locally using the environment variables defined in local/env_variables.json and the event input file GetEC2InfoLambda-event.json do the following:

# from the project's root directory

local/run_local.sh config-dev GetEC2InfoLambda

Exercise: Create an event JSON file for the AddEC2InfoLambda Lambda function and invoke it locally.

A few integration tests are available under directory tests/. The tests use the pytest framework to make API calls against our deployed API. So, before running the tests, make sure the API is actually deployed to AWS.

The API endpoint is discovered automatically from the test script based on the ApiEndpoint output parameter produced by the API CloudFormation stack.

To run the integration tests locally do this:

# from the project's root directory

./tests/run_tests.sh config-devThis sample code is made available under a modified MIT license. See the LICENSE file for details.