This project (based on CrowdDet) is the re-implementation of our paper "Body-Face Joint Detection via Embedding and Head Hook" published in ICCV2021.

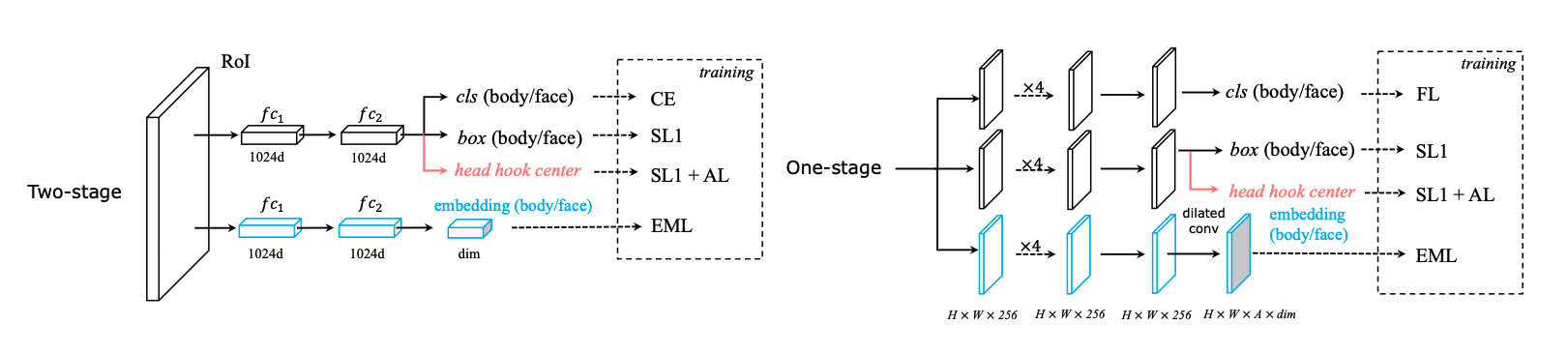

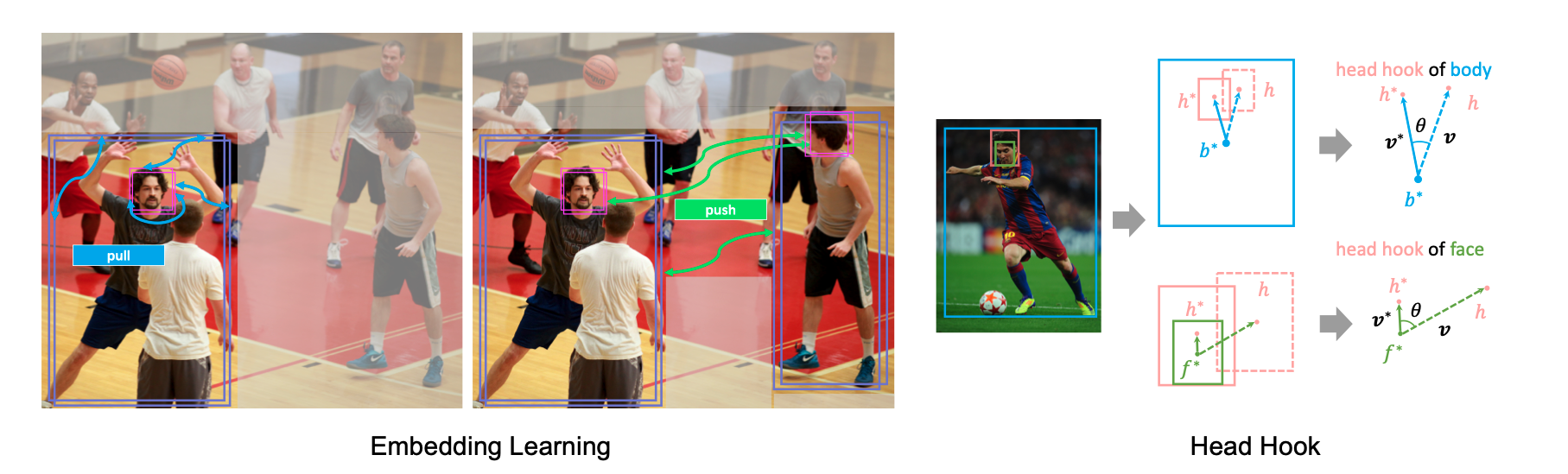

Our motivation is to detect the body and face of pedestrians in a joint manner, which is important in many industrial applications. The core ideas of our approach are mainly two-fold: 1). From appearance level, learning associative embeddings. 2). From geometry level, using adjuct head center as hooks ("head hook"). We utilize information from these two sources to match bodies and faces online inside the detector.

If you find this work is useful in your research, please cite:

@inproceedings{wan2021body,

title={Body-Face Joint Detection via Embedding and Head Hook},

author={Wan, Junfeng and Deng, Jiangfan and Qiu, Xiaosong and Zhou, Feng},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={2959--2968},

year={2021}

}We annotate face boxes in CrowdHuman and head/face boxes in CityPersons, constructing two new largescale benchmarks for joint body-face detection. Please follow instructions below to get these data.

- Download images from http://www.crowdhuman.org/.

- Download annotations from GoogleDrive, which contains the original body/head boxes and our newly annotated face boxes.

- Download images from https://www.cityscapes-dataset.com/.

- Download annotations from GoogleDrive, which contains the original body boxes and our newly annotated head/face boxes.

We propose a new metric: miss matching rate (

- On CrowdHuman, the evaluation code can be found here, in which the related functions will be called when you run the test.py script.

- On CityPersons, since there are quite different evaluation settings from those in CrowdHuman, we organize the evaluation scripts here.

- python 3.6.9

- pytorch 1.5.0

- torchvision 0.6.0

- cuda 10.1

- scipy 1.5.4

- Step1: training. More training and testing settings can be set in

config_bfj.pyorconfig_pos.py.

cd tools

python3 train.py -md rcnn_fpn_baseline -c 'pos' or 'bfj'- Step2: testing. If you have four GPUs, you can use

-d 0-3to use all of your GPUs. The result json file will be evaluated automatically.

cd tools

python3 test.py -md rcnn_fpn_baseline -r 30 -d 0-3 -c 'pos' or 'bfj'Note: 'pos' refers to the position mode baseline as mentioned in our paper and 'bfj' refers to our method.

Following CrowdDet, we use pre-trained model from MegEngine Model Hub and convert this model to pytorch. You can get this model from here. These models can also be downloaded from Baidu Netdisk(code:yx46).

All models are based on ResNet-50 FPN.

| AP@0.5(body/face) | MR(body/face) | mMR | Model | |

|---|---|---|---|---|

| FRCN-FPN-POS (Baseline) | 87.9/71.1 | 43.7/52.6 | 66.4 | GoogleDrive |

| FRCN-FPN-BFJ | 88.8/70.0 | 43.4/53.2 | 52.5 | GoogleDrive |

If you have any questions, feel free to contact Jiangfan Deng (jfdeng100@foxmail.com).