Note: Sorry for misleading naming - please use A3C_trading.py for training and test_trading.py for testing.

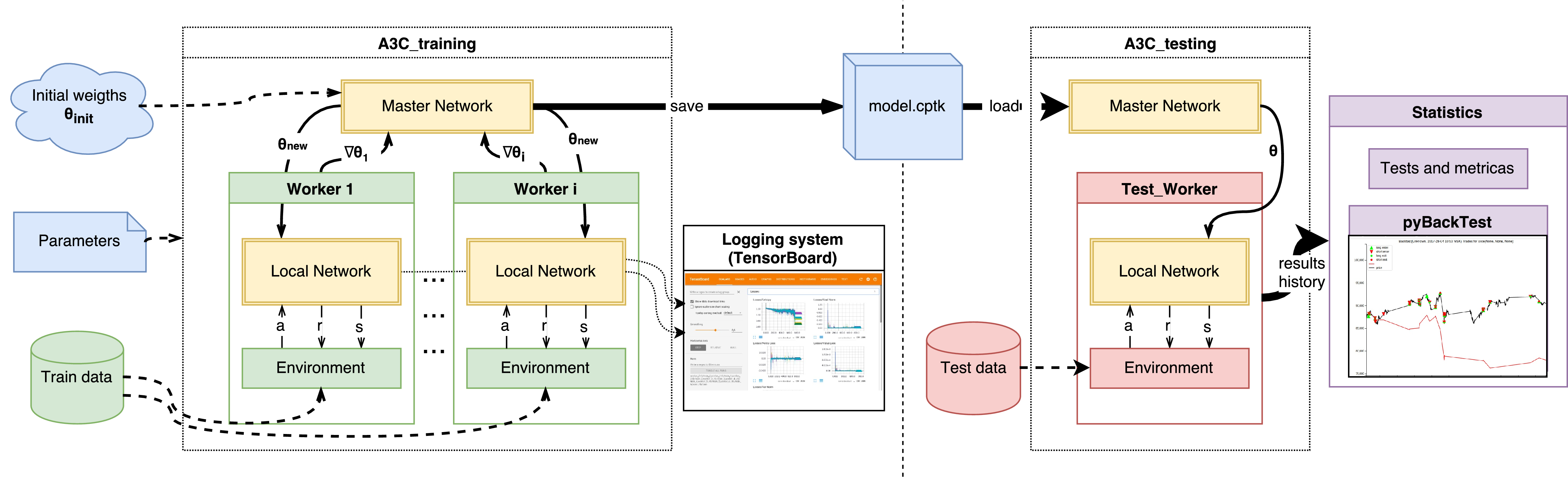

Trading with recurrent actor-critic reinforcement learning - check paper and more detailed old report

This file contains all the pathes and gloabal variables to be set up

Dataset: download from GDrive

After setting config.py please run this file to download and preprocess the data need for training and evaluation

OpenAI.gym-like environment class

This file is containing AC_network, Worker and Test_Worker classes

Run this file, preferrable in tmux. During training it will create files in tensorboard_dir and in model_dir

Jupyter notebook contains all for picturing

@article{ponomarev2019using, title={Using Reinforcement Learning in the Algorithmic Trading Problem}, author={Ponomarev, ES and Oseledets, IV and Cichocki, AS}, journal={Journal of Communications Technology and Electronics}, volume={64}, number={12}, pages={1450--1457}, year={2019}, publisher={Springer} }