The data we collect here includes subdomains, URLs, web servers, cloud assets, and a lot more data on public bug bounty programs.

Our aim with this project is to:

- help bug bounty hunters get up and running on new programs as quickly as possible.

- give security teams better visibility into their assets.

- reduce the load and noise that some programs face from automated tools (we run them on schedule, give the results to everyone)

├── Target

│ ├── cloud

│ │ ├── all.txt # All Cloud Assets

│ │ ├── aws-apps.txt # AWS Apps

│ │ ├── aws-s3-buckets.txt # S3 Buckets

│ │ ├── azure-containers.txt # Azure Containers

│ │ ├── azure-databases.txt # Azure Databases

│ │ ├── azure-vms.txt # Azure VMs

│ │ ├── azure-websites.txt # Azure Websites

│ │ ├── digitalocean-spaces.txt # Digital Ocean Spaces

│ │ ├── dreamhost-buckets.txt # DreamHost Buckets

│ │ ├── gcp-app-engine-apps.txt # Google Cound App Engine Apps

│ │ ├── gcp-buckets.txt # Google Cloud Buckets

│ │ ├── gcp-cloud-functions.txt # Google Cloud Functions

│ │ ├── gcp-firebase-databases.txt # Google Cloud Firebase Databases

│ │ ├── linode-buckets.txt # Linode Buckets

│ │ ├── scaleway-buckets.txt # Scaleway Buckets

│ │ └── wasabi-buckets.txt # Wasabi Buckets

│ ├── network

│ │ ├── hostnames.txt # Hostnames

│ │ └── ips.txt # IP Addresses

│ ├── org

│ │ └── email.txt # Spidered emails

│ ├── technologies.txt # Technologies

│ ├── web

│ │ ├── csp.txt # All Content Security Policy hosts

│ │ ├── forms.txt # Spidered forms

│ │ ├── js.txt # Spidered Javascript files

│ │ ├── links.txt # Spidered links

│ │ ├── requests

│ │ │ ├── 1xx.txt # Requests with 1xx Status

│ │ │ ├── 2xx.txt # Requests with 2xx Status

│ │ │ ├── 3xx.txt # Requests with 3xx Status

│ │ │ ├── 4xx.txt # Requests with 4xx Status

│ │ │ └── 5xx.txt # Requests with 5xx Status

│ │ ├── servers-extended.txt # Web Servers with additional data

│ │ ├── servers.txt # Web Server URLs

│ │ ├── spider.txt # All Spidered data

│ │ └── urls

│ │ ├── all.txt # All gathered URLs

│ │ ├── idor.txt # URLs targeting Insecure Direct Object Reference vulnerabilities

│ │ ├── lfi.txt # URLs targeting Local File Inclusion vulnerabilities

│ │ ├── rce.txt # URLs targeting Remote Code Execution vulnerabilities

│ │ ├── redirect.txt # URLs targeting Redirection vulnerabilities

│ │ ├── sqli.txt # URLs targeting SQL Injection vulnerabilities

│ │ ├── ssrf.txt # URLs targeting Server-Side Request Forgery vulnerabilities

│ │ ├── ssti.txt # URLs targeting Server-Side Template Injection vulnerabilities

│ │ └── xss.txt # URLs targeting Cross-Site Scripting vulnerabilities

│ └── wordlists

│ ├── paths.txt # Paths found in javascript files

│ ├── robots.txt # Paths found in robots.txt files

│ └── subdomain.txt # Words found in root domain HTTP Response

We have selected a few popular, public bug bounty programs as a start, and we are open to suggestions!

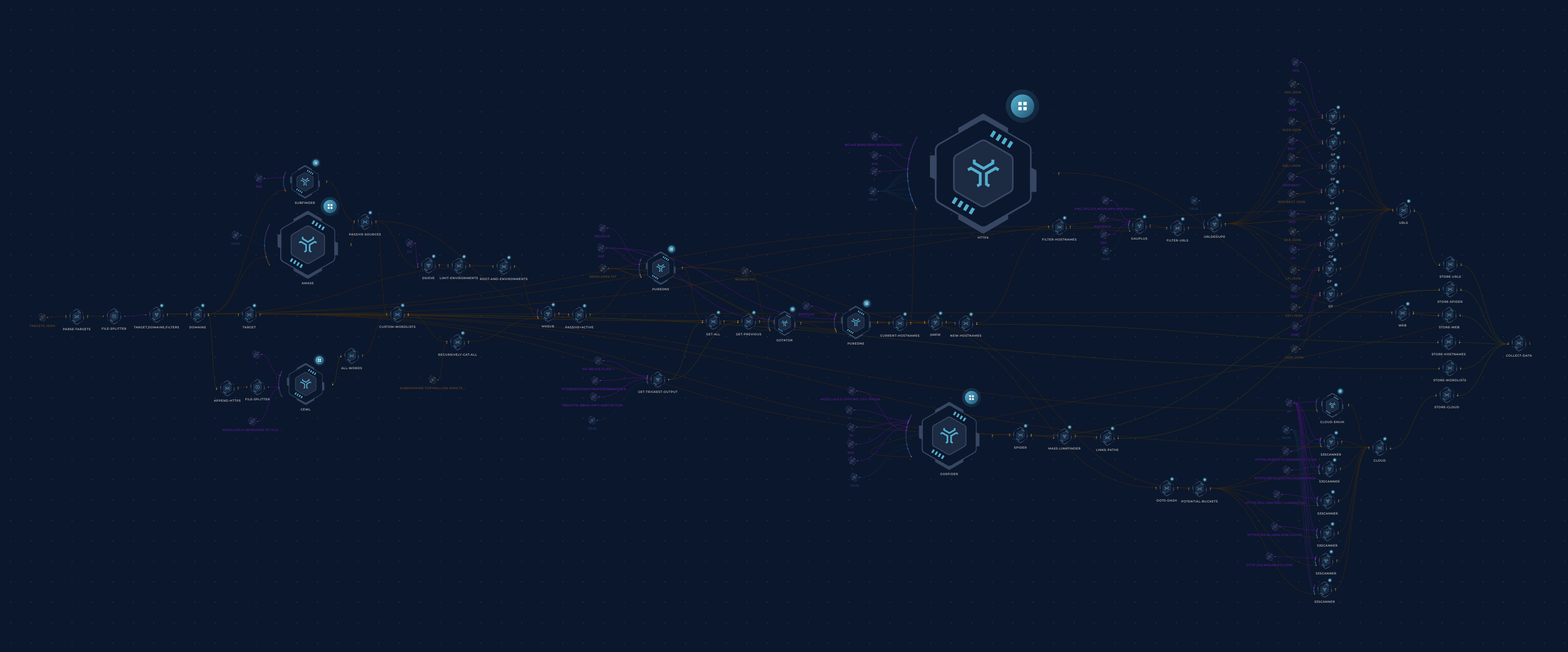

A Trickest workflow picks up these targets, collects data on them, enriches it, cleans it up, and pushes it to this repository.

- Get the list of targets from targets.json

- For each target:

- Use subfinder and amass to collect subdomains from passive OSINT sources (Thanks ProjectDiscovery, hakluke, OWASP, and Jeff Foley!)

- Use CeWL to crawl the main domain and generate a custom wordlist per target (Thanks digininja!).

- Pass the found passive subdomains to dsieve to collect their

main environments(e.g. foo.admin.example.com -> admin.example.com). This will be used for:- brute-forcing per environment using wordlist from previous step

- more permutations later

- Get pre-defined

wordlist - Combine everything into one

wordlist. - Use mksub to merge the

wordlistand themain environmentsalong withroot-domainsand generate DNS names. - Resolve DNS names using puredns (Thanks d3mondev!).

- Generate permutations using gotator (Thanks Josue87!).

- Resolve permutated DNS names using puredns.

- Use anew to pass new results to next steps (Thanks tomnomnom!)

- For each target:

- Probe previously found hostnames using httpx to find live web servers on specific ports (

80,443,8080,5000,3000,8443,8000,4080,8888) and collect their:- HTTP Titles

- Status Codes

- Content Length

- Content Security Policies

- Content Types

- Final Redirect Locations

- Webservers

- Technologies

- IP Addresses

- CNAMEs

- Parse httpx's output and organize it into files for easier navigation.

- Crawl the found websites using gospider (Thanks jaeles-project!)

- Use LinkFinder for previously found

jsfiles to get their external links and paths. (Thanks GerbenJavado!) - Generate files and wordlists by their type

- Probe previously found hostnames using httpx to find live web servers on specific ports (

- For each target:

- Collect URLs using newly found hostnames with gauplus (Thanks bp0lr!)

- Extract filters from

targets.jsonand filter out usinggrep - Deduplicate them using urldedupe (Thanks ameenmaali!)

- Use gf and gf-patterns to categorize newly found URLs. (Thanks tomnomnom,1ndianl33t!)

- Save each pattern's URLs to its own file for easier navigation.

- For each target:

- Collect cloud resources using cloud_enum (Thanks initstring!)

- Collected resources include

- AWS S3 Buckets

- AWS Apps

- Azure Websites

- Azure Databases

- Azure Containers

- Azure VMs

- GCP Firebase Databases

- GCP App Enginee Apps

- GCP Cloud Functions

- GCP Storage Buckets

- Use S3Scanner to bruteforce S3-compatible buckets (using the hostnames collected in Hostnames to seed the wordlist)

- Collected buckets include:

- AWS S3 buckets

- DigitalOcean Spaces

- DreamHost Buckets

- Linode Buckets

- Scaleway Buckets

- Wasabi Buckets

- Save each type of resource to its own file for easier navigation.

In the end, we deduplicate and merge the results of this workflow execution with the previous executions and push them to this repository.

Note: As described, almost everything in this repository is generated automatically. We carefully designed the workflows (and continue to develop them) to ensure the results are as accurate as possible.

You can use trickest-cli (public release soon!) to run this workflow on a custom target(s) using the following command:

trickest execute Inventory --targets targets.json

graph LR

title{Number of<br>Subdomains} --> subdomainsDate1[[Last Commit]] --> subdomainsNum1{{1006656}}

title --> subdomainsDate2[[Currently]] --> subdomainsNum2{{1007133}}

graph TD

title{URL Status Codes} --> 1xx[[1xx]] --> status1xxNum{{0}}

title --> 2xx[[2xx]] --> status2xxNum{{103523}}

title --> 3xx[[3xx]] --> status3xxNum{{1}}

title --> 4xx[[4xx]] --> status4xxNum{{587864}}

title --> 5xx[[5xx]] --> status5xxNum{{0}}

Note: We follow all redirects to get a more accurate representation of each URL - "3xx" counts responses that have no "Location" header.

graph LR

title{5 Most Used<br>Technologies} --> tech1{{Varnish}}

title --> tech2{{Ruby on Rails}}

title --> tech3{{GitHub Pages}}

title --> tech4{{Fastly}}

title --> tech5{{Envoy}}

All contributions/ideas/suggestions are welcome! If you want to add/edit a target/workflow, feel free to create a new ticket via GitHub issues, tweet at us @trick3st, or join the conversation on Discord.

We believe in the value of tinkering. Sign up for a demo on trickest.com to customize this workflow to your use case, get access to many more workflows, or build your own from scratch!