[📃Project Page] [Data] [Paper] [Models] [🎥Video]

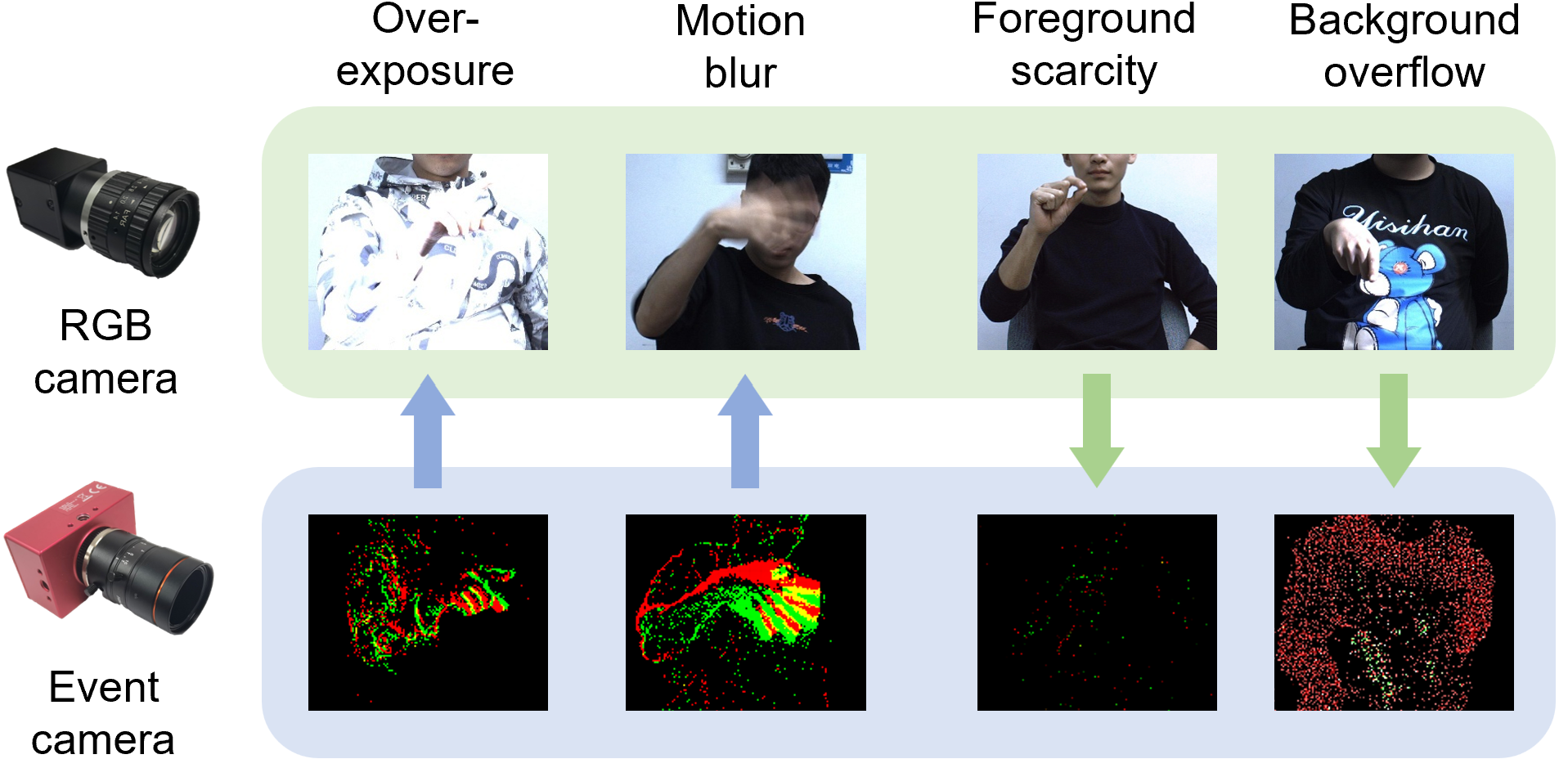

This is the official PyTorch implementation of Complementing Event Streams and RGB Frames for Hand Mesh Reconstruction.This work investigates the feasibility of using events and images for HMR, and proposes the first solution to 3D HMR by complementing event streams and RGB frames.

# Create a new environment

conda create --name evrgb python=3.9

conda activate evrgb

# Install Pytorch

conda install pytorch=2.1.0 torchvision=0.16.0 pytorch-cuda=11.8 -c pytorch -c nvidia

# Install Pytorch3D

conda install -c fvcore -c iopath -c conda-forge fvcore iopath

conda install pytorch3d -c pytorch3d

# Install requirements

pip install -r requirements.txtOur codebase is developed based on Ubuntu 23.04 and NVIDIA GPU cards.

- Download the EvRealHands dataset from EvHandPose and change the path in the

src/datasets/dataset.yaml. - Download MANO models from MANO. Put the

MANO_LEFT.pklandMANO_RIGHT.pkltomodels/mano.

- Modify the config file in

src/configs/config.

python train.py --config <config-path> - Download the pretrained model for the EvImHandNet

python train.py --config <config-path> --resume_checkpoint <pretrained-model> --config_merge <eval-config-path> --run_eval_only --output_dir <output-dir>

#For example

python train.py --config src/configs/config/evrgbhand.yaml --resume_checkpoint output/EvImHandNet.pth --config_merge src/configs/config/eval_temporal.yaml --run_eval_only --output_dir result/evrgbhand/@inproceedings{Jiang2024EvRGBHand,

title={Complementing Event Streams and RGB Frames for Hand Mesh Reconstruction},

author={Jiang, Jianping and Zhou, Xinyu and Wang, Bingxuan and Deng, Xiaoming and Xu, Chao and Shi, Boxin},

booktitle={CVPR},

year={2024}

}

@article{jiang2024evhandpose,

author = {Jianping, Jiang and Jiahe, Li and Baowen, Zhang and Xiaoming, Deng and Boxin, Shi},

title = {EvHandPose: Event-based 3D Hand Pose Estimation with Sparse Supervision},

journal = {TPAMI},

year = {2024},

}- Our code is based on FastMETRO.

- In our experiments, we use the official code of MeshGraphormer, FastMETRO, EventHands for comparison. We sincerely recommend that you read these papers to fully understand the method behind EvRGBHand.