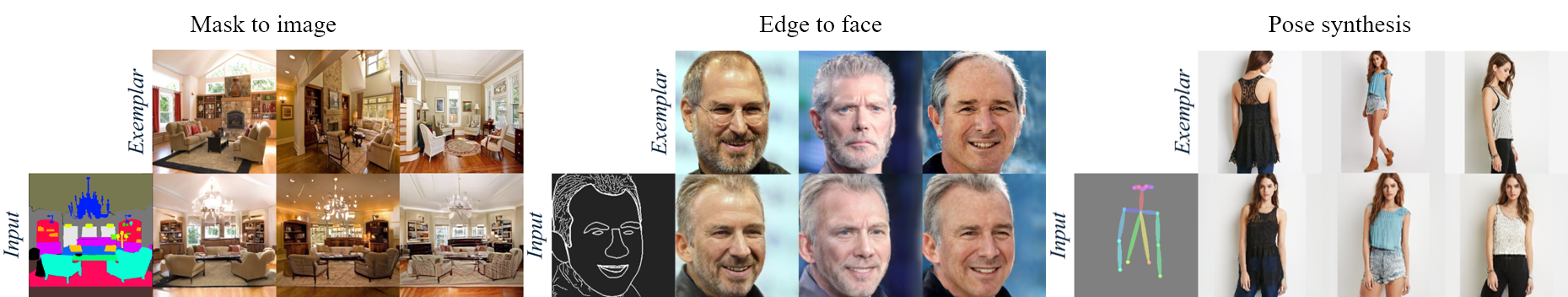

Pytorch Implementation of the paper "Cross-domain Correspondence Learning for Exemplar-based Image Translation" (CVPR 2020 oral).

20200525: Training code for deepfashion complete. Due to the memory limitations, I employed the following conversions:

- Disable the non-local layer, as the memory cost is infeasible on common hardware. If the original paper is telling the truth that the non-lacal layer works on (128-128-256) tensors, then each attention matrix would contain 128^4 elements (which takes 1GB).

- Shrink the correspondence map size from 64 to 32, leading to 4x memory save on dense correspondence matrices.

- Shrink the base number of filters from 64 to 16.

The truncated model barely fits in a 12GB GTX Titan X card, but the performance would not be the same.

- Ubuntu/CentOS

- Pytorch 1.0+

- opencv-python

- tqdm

- Prepare dataset

- Implement the network

- Implement the loss functions

- Implement the trainer

- Training on DeepFashion

- Adjust network architecture to satisfy a single 16 GB GPU.

- Training for other tasks

Just follow the routine in the PATN repo

The pretrained model for human pose transfer task: TO BE RELEASED

run python train.py.

If you find this repo useful for your research, don't forget to cite the original paper:

@article{Zhang2020CrossdomainCL,

title={Cross-domain Correspondence Learning for Exemplar-based Image Translation},

author={Pan Zhang and Bo Zhang and Dong Chen and Lu Yuan and Fang Wen},

journal={ArXiv},

year={2020},

volume={abs/2004.05571}

}

TODO.