Project Page • Arxiv • Demo • FAQ • Citation

paint3d_vid_720p.mp4

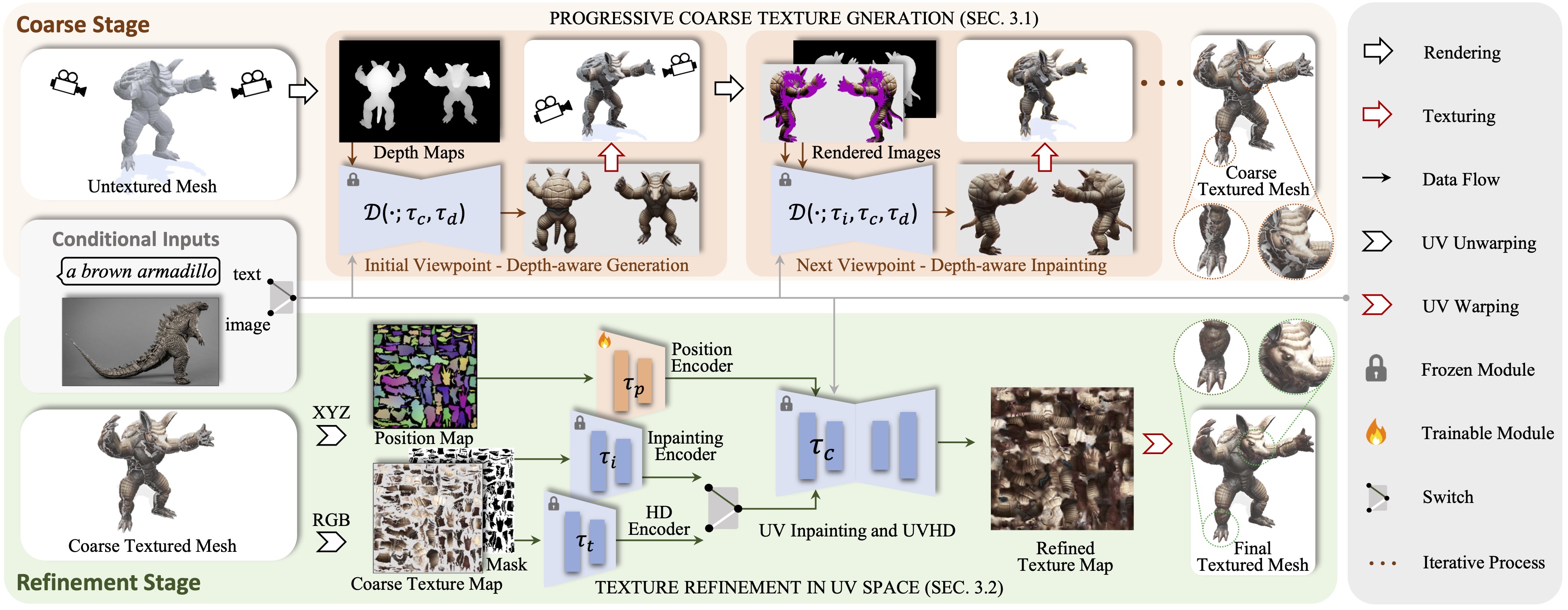

Paint3D is a novel coarse-to-fine generative framework that is capable of producing high-resolution, lighting-less, and diverse 2K UV texture maps for untextured 3D meshes conditioned on text or image inputs.

Technical details

We present Paint3D, a novel coarse-to-fine generative framework that is capable of producing high-resolution, lighting-less, and diverse 2K UV texture maps for untextured 3D meshes conditioned on text or image inputs. The key challenge addressed is generating high-quality textures without embedded illumination information, which allows the textures to be re-lighted or re-edited within modern graphics pipelines. To achieve this, our method first leverages a pre-trained depth-aware 2D diffusion model to generate view-conditional images and perform multi-view texture fusion, producing an initial coarse texture map. However, as 2D models cannot fully represent 3D shapes and disable lighting effects, the coarse texture map exhibits incomplete areas and illumination artifacts. To resolve this, we train separate UV Inpainting and UVHD diffusion models specialized for the shape-aware refinement of incomplete areas and the removal of illumination artifacts. Through this coarse-to-fine process, Paint3D can produce high-quality 2K UV textures that maintain semantic consistency while being lighting-less, significantly advancing the state-of-the-art in texturing 3D objects.

- [2023/12/21] Upload paper and init project 🔥🔥🔥

Question-and-Answer

@misc{zeng2023paint3d,

title={Paint3D: Paint Anything 3D with Lighting-Less Texture Diffusion Models},

author={Xianfang Zeng and Xin Chen and Zhongqi Qi and Wen Liu and Zibo Zhao and Zhibin Wang and BIN FU and Yong Liu and Gang Yu},

year={2023},

eprint={2312.13913},

archivePrefix={arXiv},

primaryClass={cs.CV}

}Thanks to TEXTure, Text2Tex, Stable Diffusion and ControlNet, our code is partially borrowing from them. Our approach is inspired by MotionGPT, Michelangelo and DreamFusion.

This code is distributed under an Apache 2.0 LICENSE.

Note that our code depends on other libraries, including PyTorch3D and PyTorch Lightning, and uses datasets which each have their own respective licenses that must also be followed.