monocular feature projection & object reconstruction network

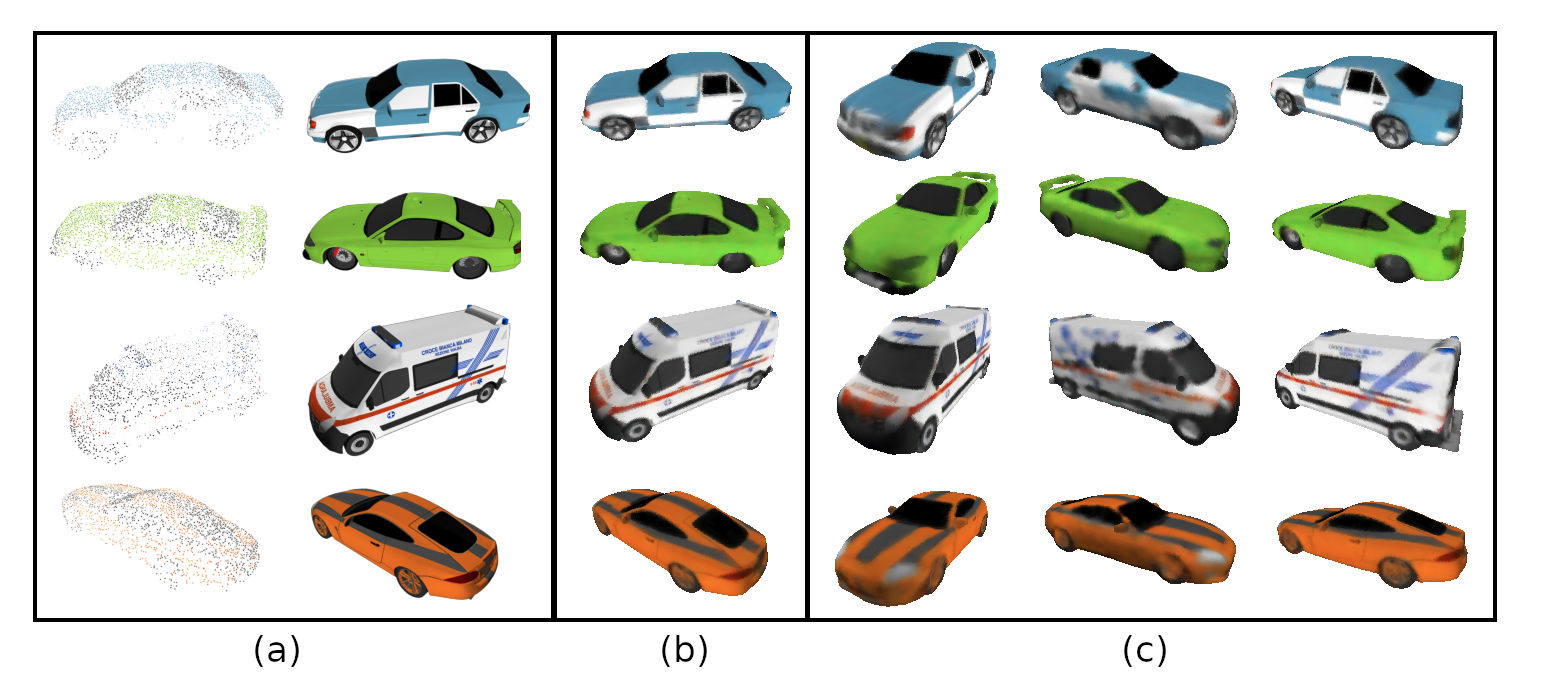

In this work we train a 3D CNN to predict colored meshes from single view inputs (1 image). The network takes as input: 1. camera parameters and 2. an image (a) to predict a fully colored 3D mesh (c), which we can re-render from the input view to validate our prediction (b). See full report for more information.

The network can also use an additional pointcloud as input (a) together with camera parameters and an image. (b) Is a re-render from identical viewpoint, but the improved & fully colored 3D mesh can be re-rendered from arbitrary views (c).

The colored mesh generated from a single view looks like this:

Ambulance.mp4

A linux system with cuda 9.0 is required.

Install the dependencies with conda using the reqs.yml file :

conda env create -f 3d-recon_env.yml

conda activate 3d-recon

Clone the repository and navigate into it in the terminal.

Details for data-processing and instructions will follow soon.

In this work, we used the cars subset of ShapeNet dataset.

- IF-Nets by [Chibane et. al. 2020]

- Occupancy Networks by [Mescheder et. al. CVPR'19]

- IF-Nets by [Chibane et. al. 2020]

- Occupancy Networks by [Mescheder et. al. CVPR'19]

- PiFU by [Shunsuke Saito et. al. ICCV'19]

- DISN by [Qiangeng Xu et. al. NeurIPS'19]