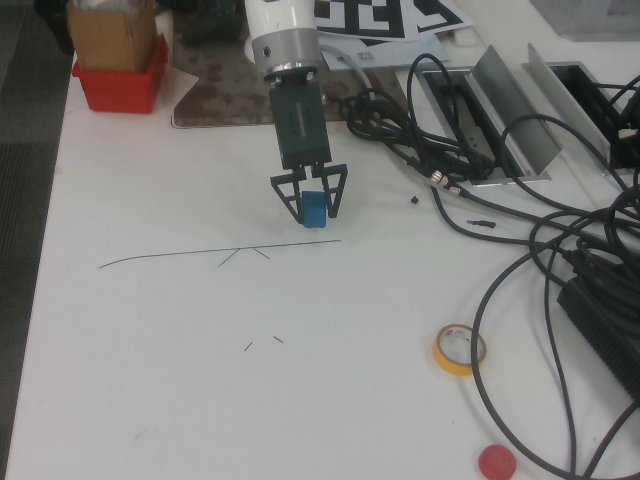

This is a grasping project based on jaka robotic arm. We use ROS1 and OpenCV(HSV or GRCNN) to grasp (eye out of hand).The project includes calculating hand-eye matrices, object recognition, and grasping codes include rt-x test and rt-1 train.

Create workspace:

mkdir -p ~/catkin_ws/src

cd ~/catkin_ws/src

catkin_init_workspaceCompile workspace:

cd ~/catkin_ws/

catkin_makeSet environment variables:

echo "source ~/catkin_ws/devel/setup.bash" >> ~/.bashrcMake the above configuration take effect on the current terminal:

source ~/.bashrc- image with chessboard

- robot pose in txt file (xyz(mm),rpy(deg))

- camera intrinsic parameters

- eye hand transformations

more detail: https://github.com/ZiqiChai/simplified_eye_hand_calibration

roslaunch jaka_driver my_demo.launchCLICK here to see the step

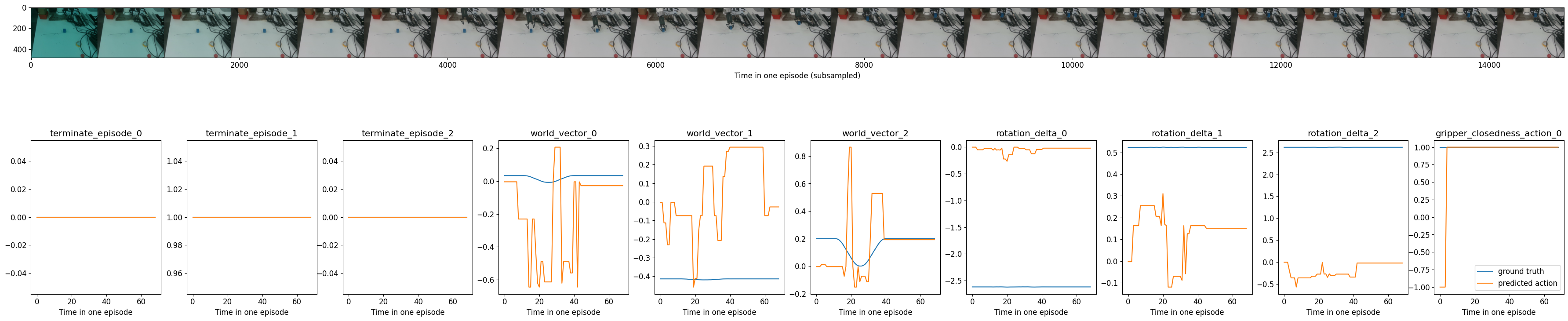

cd RT-1

python -m robotics_transformer.single_train_demoreference code: https://github.com/ZiqiChai/simplified_eye_hand_calibration