Our goal is to reproduce a quantum memory on the

Team members: Marco Ballarin, Alice Pagano, Marco Trenti

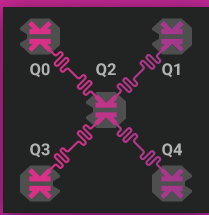

- How the carousel works

- The quantum math behind the carousel

- Decoding the sequence: machine learning agent

- Aaand... the results!

Do you remember those old festivals in which you would mount your fiery horse, and spin around while your parents were watching you? Well, here we go again (but quantum)! We have four mighty horses. Each time you buy a ticket you and your friends can mount up to three horses, and after each ride you must switch horse. Just to make sure you don't hurt the horses' feelings. So, the pattern of the ridden horses is:

$0,1,2$ $1,2,3$ $2,3,1$ $3,1,0$

At the end of each ride the occupied horses neigh. The one ridden in the middle actually makes two neighings! You know, first he answers the horse in front of him, and then the horse behind him. The free horse instead remains silent.

To me and you, all the horse noises seams the same. Really the same! But not to Bob, our Parity checker (yes, parity checker is a specific horce-racing term). He knows how to speak with horses, and by hearing two couple of neighings (first+second, second+third) he is able to understand if one of the horses is in distress! However, Bob cannot stop the carousel. He will write down who is in distress, and give him an extra carrot that evening! Furthermore, by keeping one of the horses silent Bob is always able to understand who is in distress! It would be a mess to hear from all the horses at once. In particular, he would not be able to understand which horses are in distress if there are at least two.

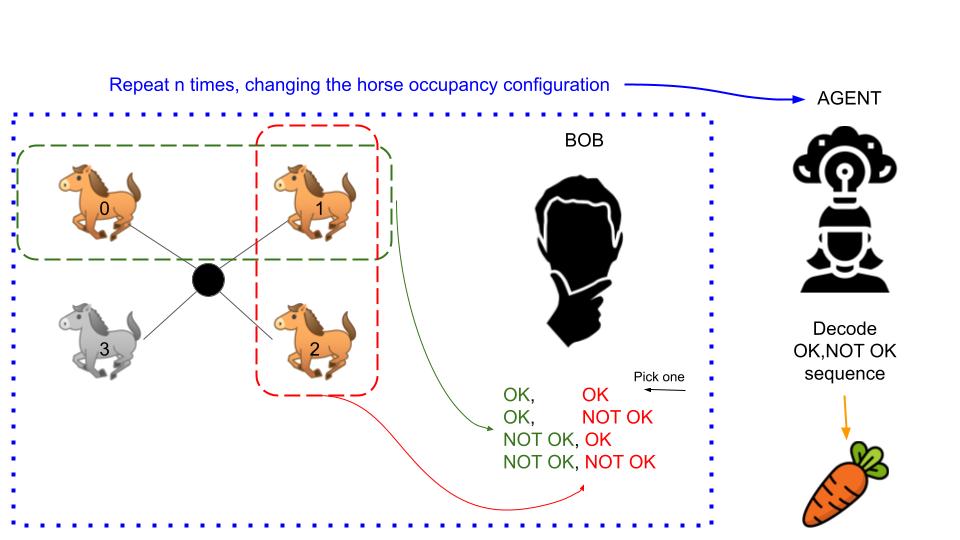

Bob is good at understanding horses. But he is simple-minded, he need the help of his friend Agent to decode the pattern and understand who and how many carrots are needed!

So, what happens in a given ride is (

- The horses

$i-1$ ,$i$ ,$i+1$ are mounted; - After the first ride

$i$ calls,$i-1$ answers. Then$i$ calls again but$i+1$ answers.$i+2$ stays silent, since it has no mounters; - Bob writes down the horses' conversation: OK or NOT OK

- The carousel restart, by changing places At the end of the day, Agent decodes the (OK, NOT OK) sequence, and distribute the carrots accordingly. If the number of carrots received are the correct number we are all happy!

NOTICE: no horses were hurted in this process

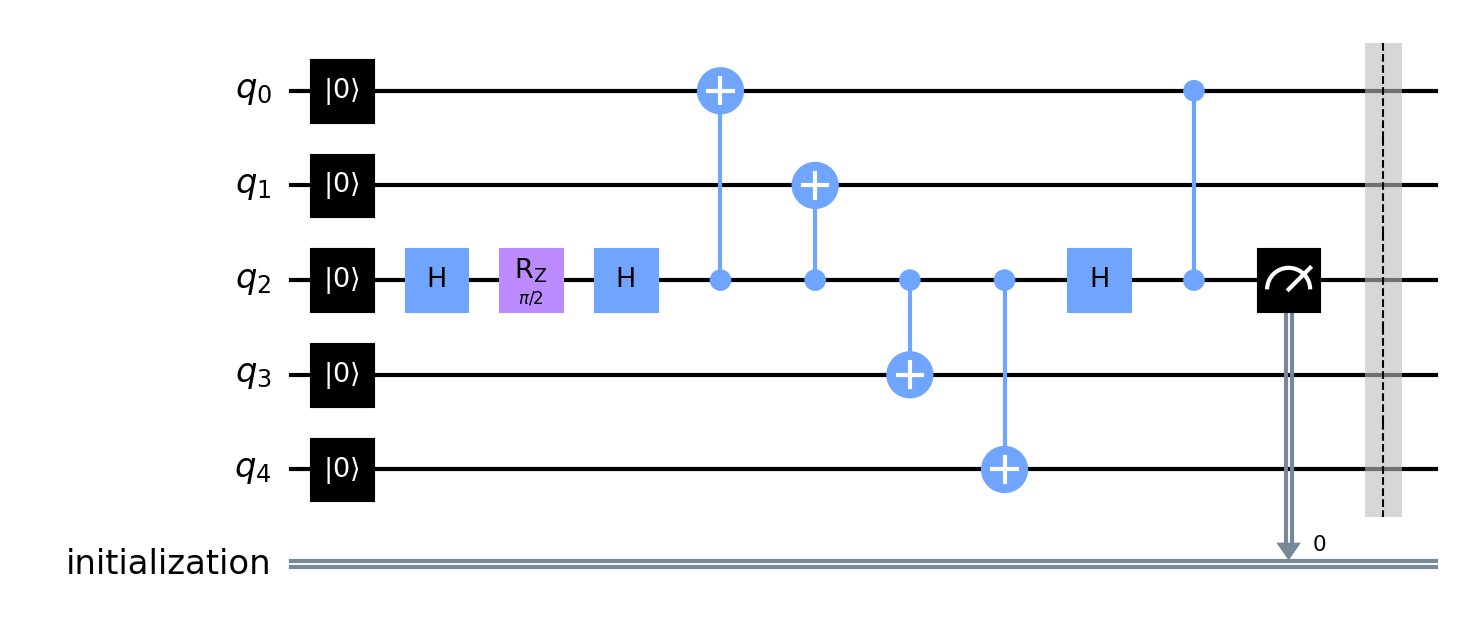

The project workflow is the following:

- Encode a general state

$$|\psi\rangle= \frac{1}{2}\big[ (1+e^{i\theta})|0\rangle + (1-e^{i\theta}|1\rangle \big]$$ in the device, using$4$ physical qubits - Measure repeatedly the state of the inner qubit, performing parity checks on 3 of the logical qubits. The set of three physical qubits spin around

- Save every measure that occurred during the simulation.

- Use a machine learning model to infer what happened and which are the needed actions to restore the correct state

- listen to fancy quasi-quantum music!

We encode, as an example, the state:

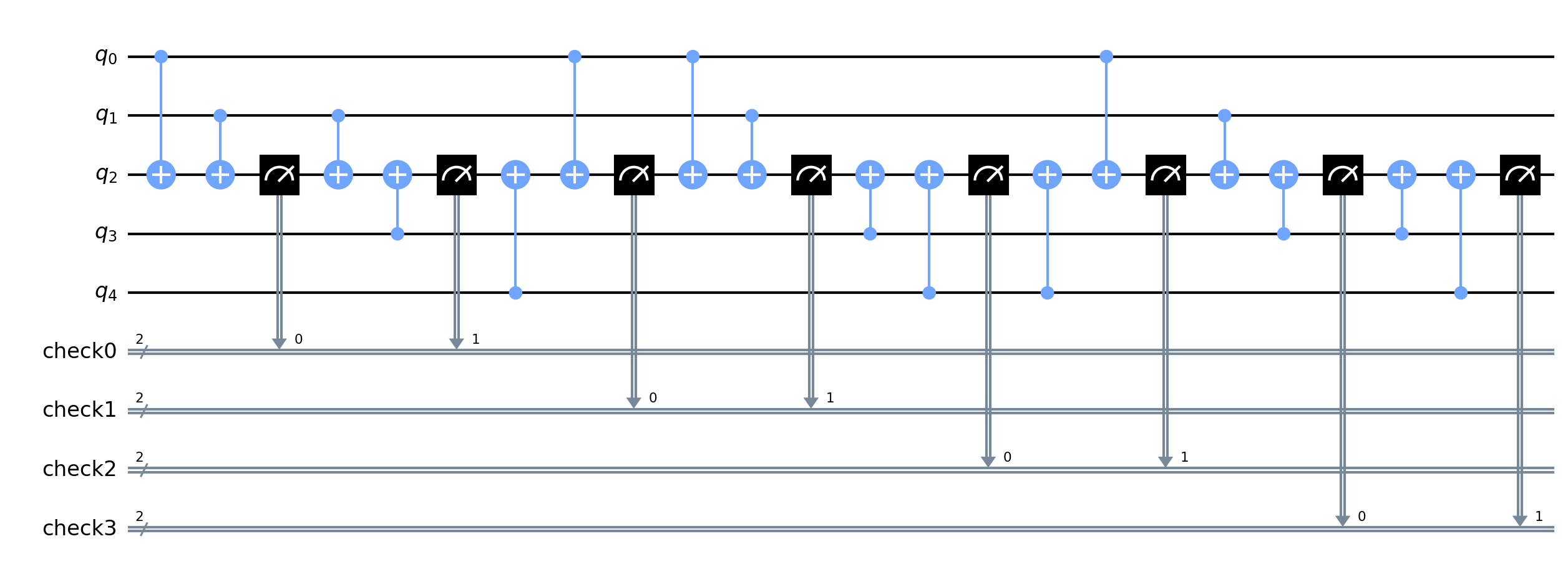

Then we apply the parity checks, also called syndrome detection. We treat it as a "spinning"

-

$q_0 q_1$ ,$q_1 q_3$ . -

$q_1 q_3$ ,$q_3 q_4$ . -

$q_3 q_4$ ,$q_4 q_0$ . -

$q_4 q_0$ ,$q_0 q_1$ .

This spinning version of the

| Qubit with error | ||||

|---|---|---|---|---|

| 0 | 1 | n.a. | n.a. | |

| 1 | 0 | n.a. | n.a. | |

| 1 | 1 | n.a. | n.a. | |

| n.a. | 0 | 1 | n.a. | |

| n.a. | 1 | 0 | n.a. | |

| n.a. | 1 | 1 | n.a. | |

| n.a. | n.a. | 0 | 1 | |

| n.a. | n.a. | 1 | 0 | |

| n.a. | n.a. | 1 | 1 | |

| 1 | n.a. | n.a. | 0 | |

| 0 | n.a. | n.a. | 1 | |

| 1 | n.a. | n.a. | 1 |

It may seem a little strange, but the figure will clarify it!

However, we stress that we don't do any classically controlled operation to update the results. It will be our machine learning model that, given the error pattern, will understand the correct classical post-processing!

To apply the machine learning model, first of all we have to define a task the ML model can understand and tackle. So, our task is to deduce the error landscape given the series of parity check measurements.

We define as error landscape a matrix with the qubit index on the rows (

| Qubit | ||||

|---|---|---|---|---|

The generation of the dataset is a bit tricky. We cannot use real-device or simulator data, since in both cases we wouldn't have access to the error landscape, but only to the parity checks. For this reason, we prepare the dataset using a correct simulation where, before each parity check, we apply an $X=\begin{pmatrix}0 & 1 \ 1 & 0\end{pmatrix}$ gate independently to each qubit with a probability

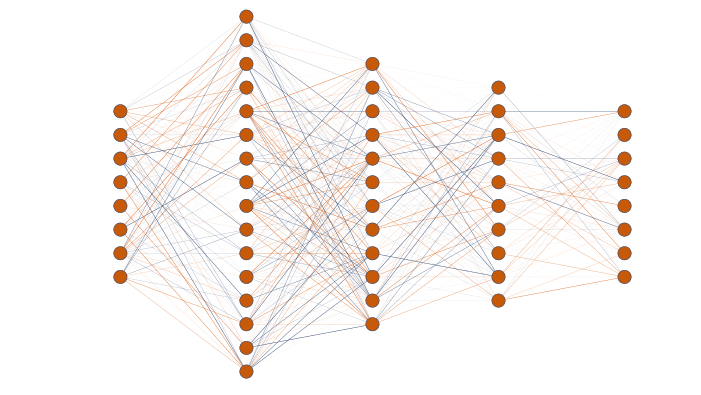

To tackle the problem we use a deep feedforward neural network, built with the tensorflow package. The image above is a faithful representation of the model used. We report here all the specifics:

| Layer (type) | Output Shape | Param |

|---|---|---|

| normalization | (None, 10) | 21 |

| dense (Dense) | (None, 64) | 704 |

| dense_1 (Dense) | (None, 20) | 1300 |

| dense_2 (Dense) | (None, 10) | 210 |

| dense_3 (Dense) | (None, 10) | 110 |

We recall that in tensorflow language a dense layer is a fully connected layers of artificial neurons. In the example we show a possible set of hyperparameters and the training procedure.

We think that a naive feedforward neural network is not the best architecture to analyze our data. The input data re a time-series, where each value actually depend from the previous one, and the target state is also a time-series. This data structure suggests that the most suitable architecture should be recurrent neural network, for example LSTM (Long Short Term Memory). However, these models are much more complex than the feedforward networks. For this reason we decided to leave the exploration of RNN for further studies.

Given the error landscape we must be able to postprocess the data. This (at least) is very simple!

It is sufficient to compute the classical parity

Furthermore, we apply another (brutal) post-processing to the state. We know that the correct states are

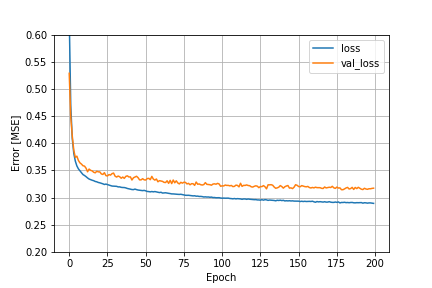

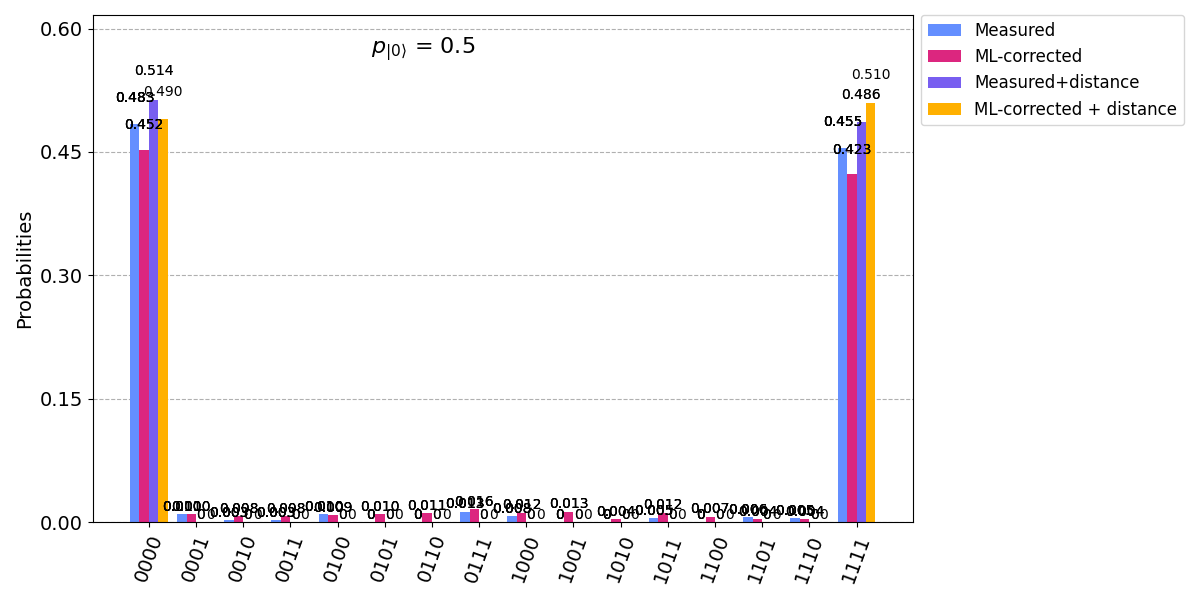

We finally arrived at the end of this journey. First, we can show that the machine learning agent is able to correct (sometimes) the errors that occurs. The loss function of the optimization is shown below:

As we see, the network is able to learn something from the dataset. However, given the behaviour of the training loss (which is smaller than the validation) we can expect some overfitting.

The tough part arrives when we compute the accuracy on the test set: the outcome is between

- Measured, the measured shot without any postprocessing;

- ML-corrected, the measurement results after the application of our ML agent

- Measured+distance, the measured shots where it is applied the postprocessing with the distance defined in the above sections

- ML-corrected+distance, the measured shots where we apply both the ML correction and the distance correction.

As we notice, all the approaches give comparable results, we do not see any significative difference between the counts. The state still displays an high fidelity with the expected output, even if we apply several layers of measurements that should make the execution time approach the relaxation time

With circuit_animation/circuit_music.py you can make python play a nice jingle. Eventually you could relate the "NOISE" to the actual measured circuit errors.

The presentation can be found here.

- marcob says: It was a very exciting experience! I'm settled in Italy, and so have a 6 hour lag w.r.t. ET timezone. However, the sheer excitment and the desire to overcome the challange let me continue to work until 3 am, and wake up at 7! I'm a PhD and I'm currently working on QECC, so the QuTech challange was really perfect for me. It was not trivial at all to come up with an interesting idea, but it was definitly fun! Btw, it was also my first hackaton ever.

- alice says: It was really fun! This is the first time I partecipate in a hackaton and I really enjoyed this experience! I think that the challenge was hard, but we worked in complete synergy to develop our best idea and to have also a lot of fun!

- marcot says: Participating in the iQuHack hackathon was a really enjoyable opportunity. I came in without any previous knowledge about Quantum Computing and, as some sort of Hadamard applied to my knowledge, now my understanding of Quantum Computing is in a superposition between |know nothing> and |know everything>, challenge like these provides the perfect measurement! I felt that the time was a bit strict for the Q-error correction challenge but “Oh, I get by with a little help from my friend”ly and expert teammates.