I wish I could speak many languages. Wait. Actually I do. But only 4 or 5 languages with limited proficiency. Instead, can I create a voice model that can copy any voice in any language? Possibly! A while ago, me and my colleage Dabi opened a simple voice conversion project. Based on it, I expanded the idea to cross-languages. I found it's very challenging with my limited knowledge. Unfortunately, the results I have for now are not good, but hopefully it will be helpful for some people.

February 2018

Author: Kyubyong Park (kbpark.linguist@gmail.com)

Version: 1.0

- NumPy >= 1.11.1

- TensorFlow >= 1.3

- librosa

- tqdm

- scipy

- Training 1: TIMIT

- Training 2: CMU ARCTIC SLT

- Conversion Sample Files: 50LANGUAGES MP3 audio files

- Train 1: MFCCs of TIMIT speakers -> Triphone PPGs

- Train 2: MFCCs of ARTCTIC speaker -> Triphone PPGs -> linear spectrogram

- Convert: MFCCs of Any speakers -> Triphone PPGs -> linear spectrogram -> (Griffin-Lim) -> wav file

(To see what PPGs are, consult this)

- STEP 0. Prepare datasets

- STEP 1. Run

python train1.pyfor phoneme recognition model. - STEP 2. Run

python train2.pyfor speech synthesis model.

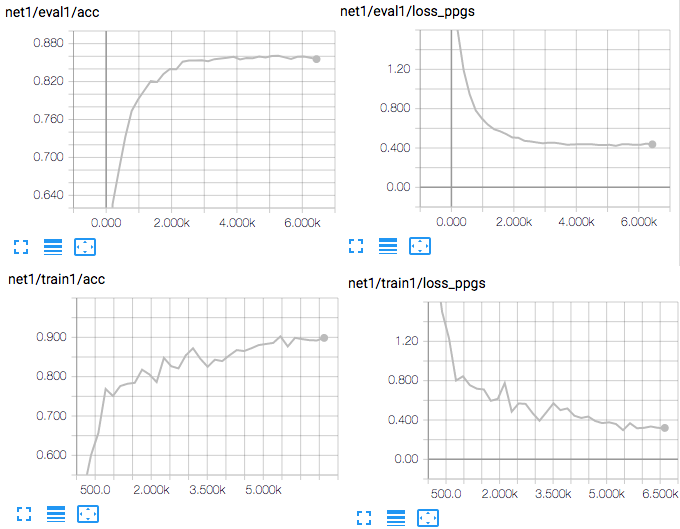

- Training 1

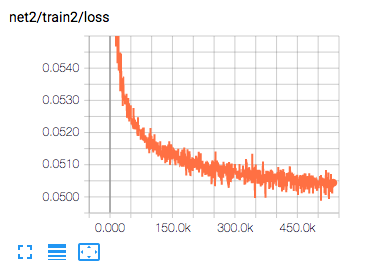

- Training 2

- Run

python convert.pyand check the generated samples in50lang-outputfolder.

- Check here and compare original speech samples in 16 languages and their converted counterparts.

- Don't expect too much!

- L. Sun, S. Kang, K. Li, and H. Meng, “Personalized, cross-lingual TTS using phonetic posteriorgrams,” in Proc. INTERSPEECH, San Francisco, U.S.A., Sep. 2016, pp. 322–326.

- Dabi Ahn & Kyubyong Park, Voice Conversion with Non-Parallel Data. https://github.com/andabi/deep-voice-conversion