we describe the essential steps for training an ASR model for Egyptian Dialects. Namely:

- Data preprocessing

- Building tokenizers

- Training from scratch

- Inference and evaluation

To be able to use a dataset with the NeMo Toolkit, we first need to convert .csv files to .json manifests.

python convert_csv_json.py \

input_csv='train.csv' \

audio_folder='train' \

output_json='train.json'This document provides details on the preprocessing script used in the ASR Arabic project. The script processes Arabic text data by cleaning and normalizing it to prepare it for tokenization and model training.

The script performs the following operations:

- Remove Special Characters: It removes all punctuation and special characters except Arabic characters and spaces.

- Replace Diacritics: It replaces various forms of Arabic diacritics with their base characters.

- Remove Out-of-Vocabulary Characters: It removes any characters that are not Arabic characters or spaces.

- Purpose: Writes the processed manifest data to a new file.

- Arguments:

data(list): List of processed manifest data.original_path(str): The original file path of the manifest.output_dir(str): Directory to save the processed manifest. Default is/kaggle/working/.

- Returns: Path to the new processed manifest file.

- Purpose: Calculates the character set from the manifest data.

- Arguments:

manifest_data(list): List of manifest data.

- Returns: Character set with counts as a

defaultdict.

- Purpose: Removes special characters from the text, keeping only Arabic characters and spaces.

- Arguments:

data(dict): Dictionary containing text data.

- Returns: Updated dictionary with cleaned text.

- Purpose: Replaces Arabic diacritics with their base characters.

- Arguments:

data(dict): Dictionary containing text data.

- Returns: Updated dictionary with replaced diacritics.

- Purpose: Removes out-of-vocabulary characters, keeping only Arabic characters and spaces.

- Arguments:

data(dict): Dictionary containing text data.

- Returns: Updated dictionary with removed out-of-vocabulary characters.

- Purpose: Applies a list of preprocessing functions to the manifest data.

- Arguments:

manifest(list): List of manifest data.preprocessors(list): List of preprocessing functions to apply.

- Returns: Processed manifest data.

To run the data cleaning script:

python Data_preprocessing.pyWe used the NVIDIA NeMo Framework to build our tokenizer and train our model.

Subword tokenization is essential for ASR tasks because it offers several advantages over traditional word-based tokenization.

python /kaggle/working/process_asr_text_tokenizer.py \

--manifest="/kaggle/input/dataset-ja/train.json" \

--data_root="/kaggle/working/tokenizers" \

--vocab_size=64 \

--tokenizer="spe" \

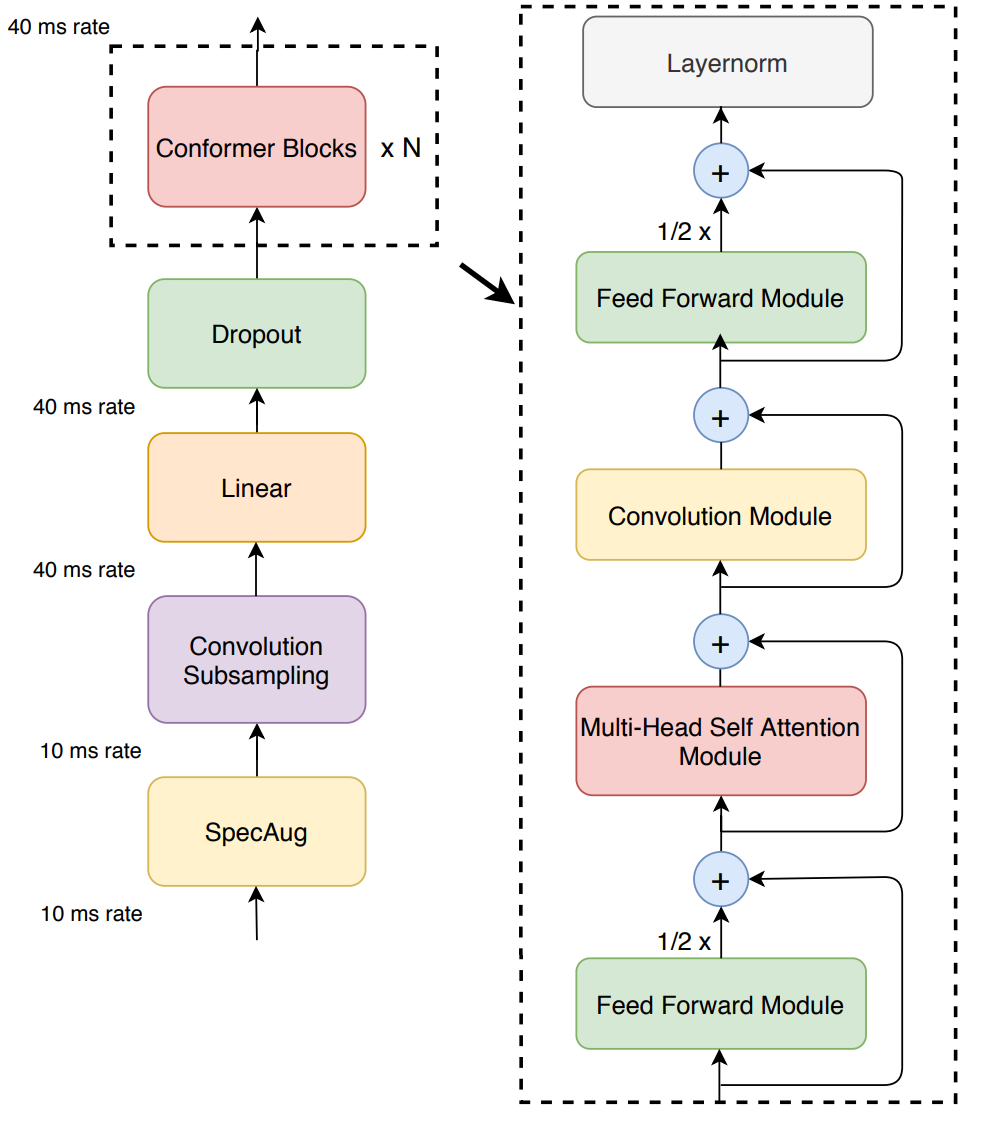

--spe_type="unigram"We used the architecture of the Conformer-CTC. Conformer-CTC is a CTC-based variant of the Conformer model. It uses CTC loss and decoding instead of RNNT/Transducer loss, making it a non-autoregressive model. The model combines self-attention and convolution modules for efficient learning.

python /kaggle/working/speech_to_text_ctc_bpe.pypython /kaggle/working/transcribe_speech.py \

model_path="/kaggle/working/ASR_squad.nemo" \

dataset_manifest="/kaggle/input/dataset-ja/test.json"python /kaggle/working/configs/speech_to_text_eval.py \

dataset_manifest="/kaggle/working/test_with_predictions.json" \

use_cer=False \

only_score_manifest=Truepython /kaggle/working/configs/speech_to_text_eval.py \

dataset_manifest="/kaggle/working/test_with_predictions.json" \

use_cer=True \

only_score_manifest=Truepython /kaggle/working/evaluation.py