This repository contains my solutions to six Deep Generative Models assignments, each exploring a different topic in generative models and neural networks. The notebooks feature implementations of key models such as CLIP, LSTMs, VAEs, GANs, Flow-Based Models, DDPMs, and EBMs. These solutions focus on real-world datasets, including MNIST, CelebA, and a custom captcha dataset, showcasing a variety of deep learning techniques.

- Homework 1: OpenAI CLIP Model (Food 101)

- Homework 2: Autoregressive Generative Models and Variational Autoencoders

- Homework 3: GANs and Flow-Based Models

- Homework 4: Denoising Diffusion and Energy-Based Models

In this notebook, I evaluate the OpenAI CLIP model. The focus is on understanding how CLIP associates text and images in a shared embedding space.

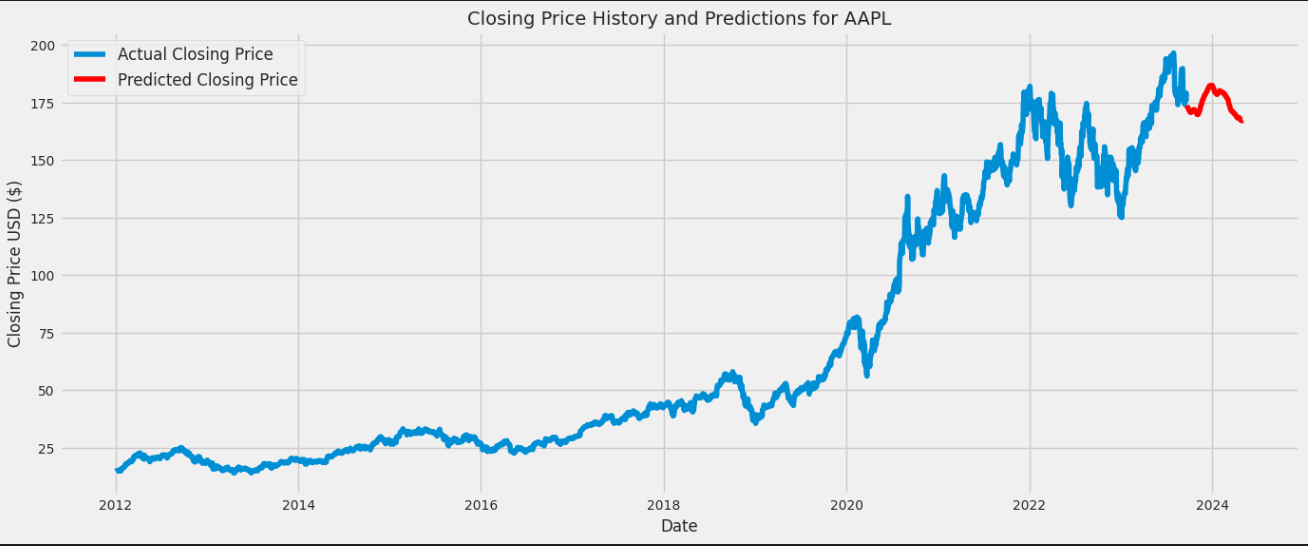

I implemented an LSTM-based autoregressive generative model to predict stock prices, using historical stock data. The model captures complex temporal dependencies by factorizing the joint probability of the time series using the chain rule of probability.

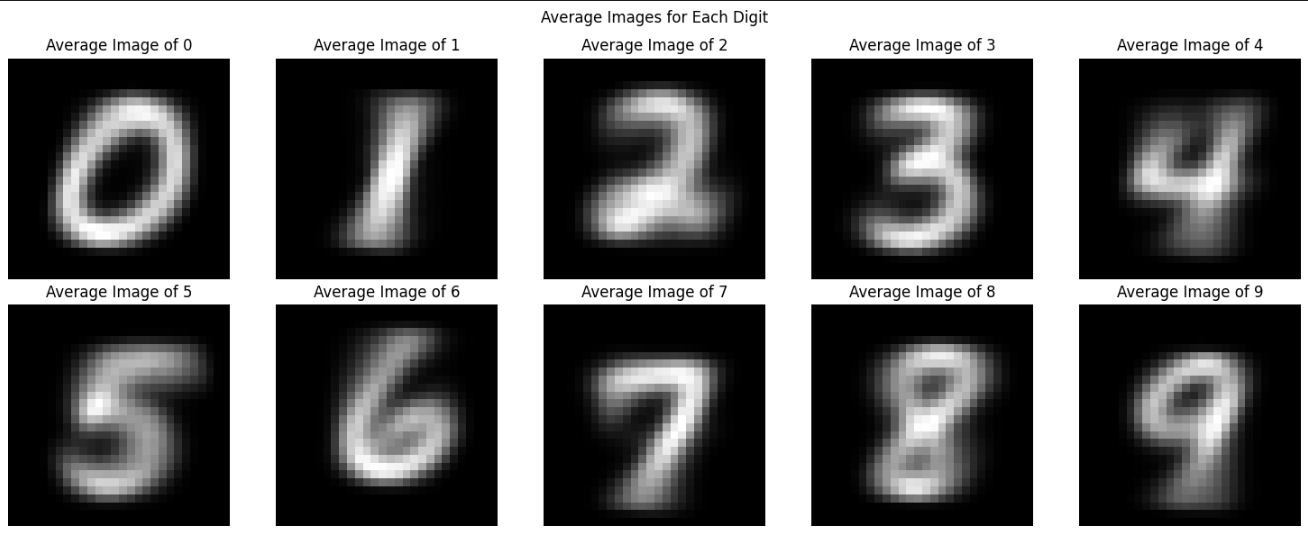

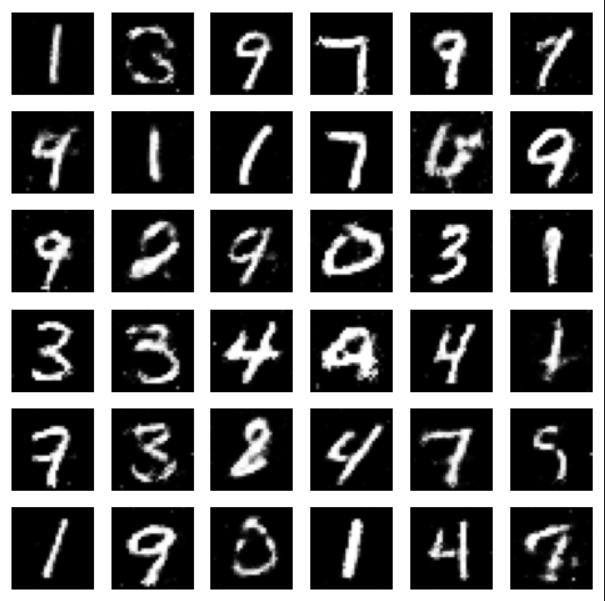

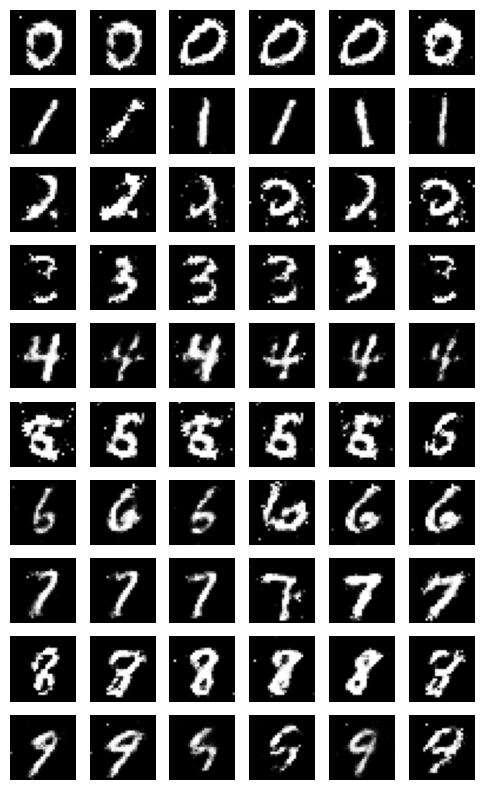

In this part, I implemented a Variational Autoencoder (VAE) to generate new handwritten digits using the MNIST dataset. The VAE model learns a latent space representation and generates new digits by sampling from the learned distribution.

This section includes implementations of Conditional GANs and Wasserstein GANs. The goal was to improve stability during training while generating high-quality images through adversarial learning.

I applied normalizing flows to enhance a VAE for more realistic image generation on the CelebA dataset. Flow-based models enable efficient and exact sampling, which improves the expressiveness of the latent space representation.

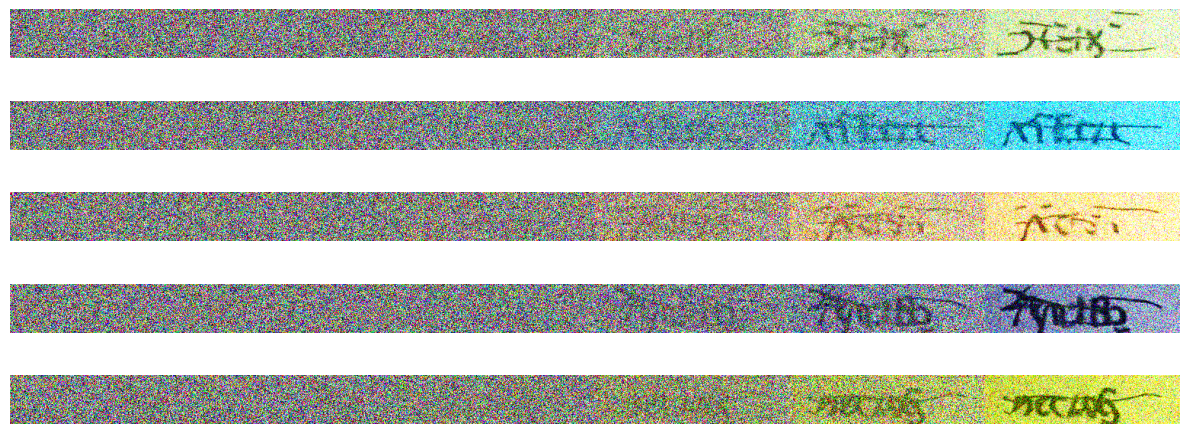

I implemented a Denoising Diffusion Probabilistic Model (DDPM) to generate new captcha images by progressively adding noise and learning to reverse this process. The captcha dataset consists of RGB images with corresponding text labels.

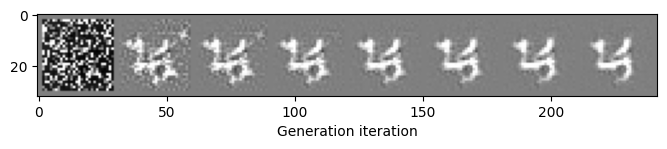

In this part, I implemented an Energy-Based Model (EBM) using contrastive divergence on the MNIST dataset. The model learns to generate new samples by optimizing energy functions based on training data.