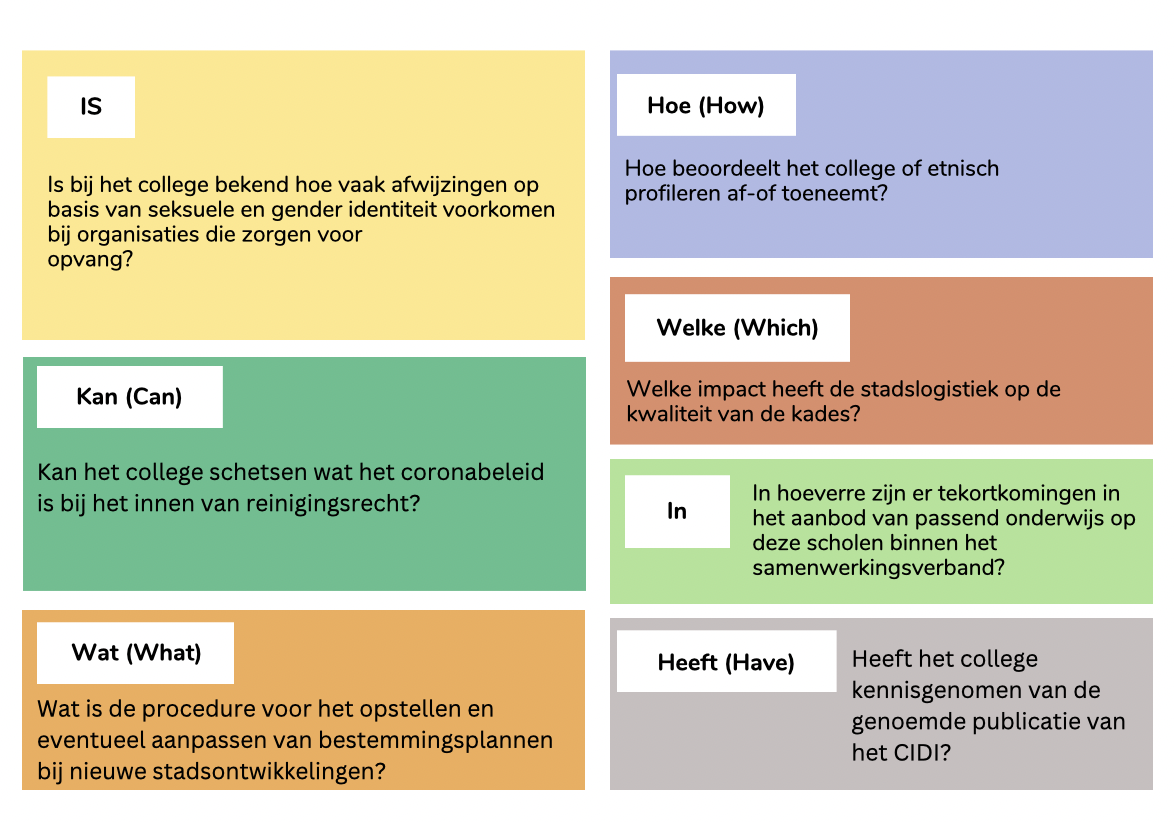

The city council can ask the municipal executive questions to clarify certain subjects. Answering those questions is a time-consuming and complex process. This project aims to aid the task by experimenting with different NLP modeling approaches and comparing the results regarding accuracy and execution times.

Therefore, the project aims to answer the following research question: To what extent can state-of-the art NLP models for (i) information retrieval and (ii) text generation aid the process of answering Amsterdam city council questions?

The planned implementation consists of two main steps: (1) retrieving: rank the answer-relevant documents from the websites (2) generating: create an answer based on the most relevant documents

You can find the published LFFQA dataset with questions, posed by the Amsterdam city council and answers by the Amsterdam municipal executive in the dates ranging from 2013 till 2022 at: questions

This project has the following folder structure:

-

notebooks: Folder containing the different stages of the pipeline:-

0_exploratory_data_analysis: Notebooks containing graphs and descriptive statistics of the dataquestions_answers_EDA: Explores the data in terms of the questions and the answers, their length, common words, common topicsURLs_EDA: Explores the most commonly referneced URLs in the municipal answers

-

1_supporting_documents_collection: Folder of notebooks containing supporting documents. You can collect the supporting documents from amsterdam.nl either all at once through1_1_collect_ams_at_onceor individually through1_2_1_collect_ams_subpages_individually.ipynb1_1_collect_ams_at_once: Collects all subpages and all nested subpages under amsterdam.nl under the section 'onderwepen'1_2_1_collect_ams_subpages_individuallyCollects all subpages and all nested subpages under amsterdam.nl under the section 'onderwepen' individually (section by section)1_2_2_combine_collected_ams_subpages: If the webpages have been collected and scraped individually, this notebook combines them all in a single .csv file 'combined.csv'

3_collect_update_URLs_from_references: This notebook needs to be ran -> it collects all URLs that are referenced in the municipal executive's answers but more importantly it updates the URLs, since some URLs have an outdated version in the answers. It saves the questions with updated URLs 'questions_updated_url.csv'. It also saves a file with questions containing only amsterdam.nl URLs which we use for ranking evaluation and a test set for text generation.

-

2_ranking_text_generation_pipeline: Notebooks containing different main steps and preprocessing steps of the pipeline0_get_amsterdamnl_only_questions: Extracts only the questions which in their answers refer to amsterdam.nl and saves them in the file amsterdam_questions.csv. This is going to be our test set. Also we will use it to evaluate the ranking step.1_ranking_comparison_tfidf_random_bm25: In this notebook we compare the three ranking strategies: TF-IDF, BM25 and Random sampling (TF-IDF resulting in being the best one).2_1_ranking_all_questions: Ranking all questions with the most accurate strategy (tfidf) and saving them in a .pickle file2_2_ranking_all_questions_nonfact_filtering: Filtering out non-factual questions and ranking all of them with the most accurate strategy (tfidf) and saving them in a .pickle file3_1_get_train_test_set: Filtering out amsterdam.nl references from the data, which results in our training sample3_2_get_train_test_set_nonfact_filtered: Filtering out amsterdam.nl references from the data - which is cleaned from non-factual questions, which results in our training sample4_train_mT5_models: We use this notebook to fine-tine mT5 to different tasks. We change the input format according to the task we fine-tune the model on5_compare_mT5_finetuned_models: We evaluate the results from all fine-tuned models

-

3_ideas_future_work_not_included_thesis: Notebooks containing different implementations and ideas that were not implemented in the final thesis, however, they might be useful for future work. (They are still work in progress.)

-

-

src: Folder for source files specific to this project -

src_clean: Folder for source files specific to this project -

media: Folder containing media files (icons, video)