UnifiedGEC: Integrating Grammatical Error Correction Approaches for Multi-languages with a Unified Framework

English | 简体中文

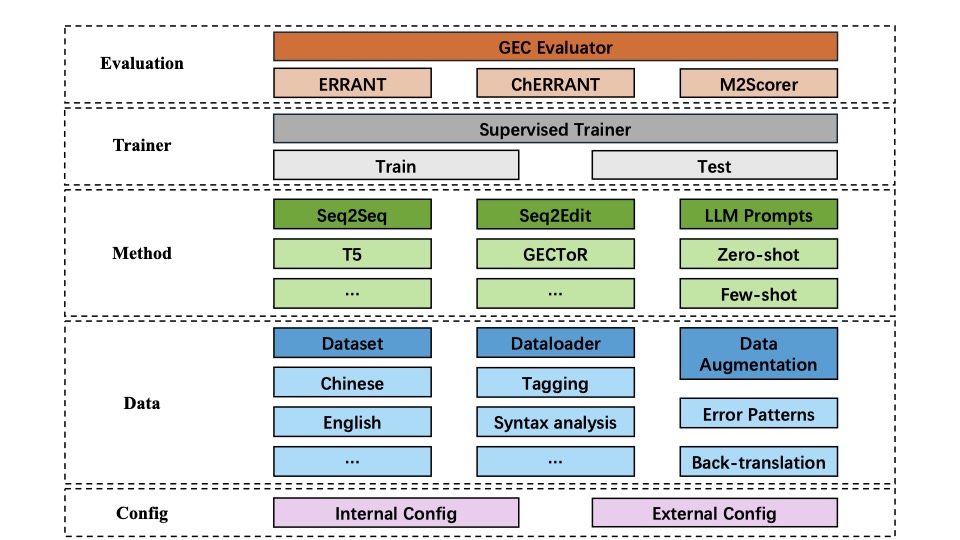

UnifiedGEC is an open-source, GEC-oriented framework, which integrates 5 GEC models of different architecture and 7 GEC datasets across different languages. The sturcture of our framework is shown in the picture. It provides abstract classes of dataset, dataloader, evaluator, model and trainer, allowing users to implement their own modules. This ensures excellent extensibility.

Our framework is user-friendly, and users can train a model on a dataset with a single command. Moreover, users are able to deal with low-resource tasks with our proposed data augmentation module, or use given prompts to conduct experiments on LLMs.

- User-friendly: UnifiedGEC provides users with a convenient way to use our framework. They can start training or inference easily with a command line specifying the model and dataset they need to use. They can also adjust parameters, or launch data augmentation or prompt modules through a single line of command.

- Modularized and extensible: UnifiedGEC consists of modules including dataset, dataloader, config and so on, and provides users with abstract classses of these modules. Users are allowed to implement their own modules through these classes.

- Comprehensive: UnifiedGEC has integrated 3 Seq2Seq models, 2 Seq2Edit models, 2 Chinese datasets, 2 English datasets and 3 datasets of other languages. We have conducted experiments on these datasets and evaluated the performance of integerated models, which provides users with a more comprehensive understanding of GEC tasks and models.

Complete structure of UnifiedGEC:

.

|-- gectoolkit # main code of UnifiedGEC

|-- config # internal config and implementation of Config Class

|-- data # Abstract Class of Dataset and Dataloader, and implementation of GEC Dataloader

|-- evaluate # Abstract Class of Evaluator and implementation of GEC Evaluator

|-- llm # prompts for LLMs

|-- model # Abstract Class of Model and code of integrated models

|-- module # reusable modules (e.g., Transformer Layer)

|-- properties # detailed external config of each model

|-- trainer # Abstract Class of Trainer and implementation of SupervisedTrainer

|-- utils # other tools used in our framework

|-- quick_start.py # code for launching the framework

|-- log # logs of training process

|-- checkpoint # results and checkpoints of training process

|-- dataset # preprocessed datasets in JSON format

|-- augmentation # data augmentation module

|-- data # dependencies of error patterns

|-- noise_pattern.py # code of error patterns

|-- translation.py # code of back-translation

|-- evaluation # evaluation module

|-- m2scorer # M2Scorer, for NLPCC18、CoNLL14、FCE

|-- errant # ERRANT, for AKCES、Falko-MERLIN、Cowsl2h

|-- cherrant # ChERRANT, for MuCGEC

|-- convert.py # script for convert output JSON file into corresponding format

|-- run_gectoolkit.py # code for launching the framework

We integrated 5 GEC models in our framework, which can be divided into two categories: Seq2Seq models and Seq2Edit models, as shown in table:

| type | model | reference |

|---|---|---|

| Seq2Seq | Transformer | (Vaswani et al., 2017) |

| T5 | (Xue et al., 2021) | |

| SynGEC | (Zhang et al., 2022) | |

| Seq2Edit | Levenshtein Transformer | (Gu et al., 2019) |

| GECToR | (Omelianchuk et al., 2020) |

We integrated 7 datasets of different languages in our framework, including Chinese, English, Spanish, Czech and German:

| dataset | language | reference |

|---|---|---|

| FCE | English | (Yannakoudakis et al., 2011) |

| CoNLL14 | English | (Ng et al., 2014) |

| NLPCC18 | Chinese | (Zhao et al., 2018) |

| MuCGEC | Chinese | (Zhang et al., 2022) |

| COWSL2H | Spanish | (Yamada et al., 2020) |

| Falko-MERLIN | German | (Boyd et al., 2014) |

| AKCES-GEC | Czech | (Náplava et al., 2019) |

Datasets integrated in UnifiedGEC are in JSON format:

[

{

"id": 0,

"source_text": "My town is a medium size city with eighty thousand inhabitants .",

"target_text": "My town is a medium - sized city with eighty thousand inhabitants ."

}

]Our preprocessed datasets can download here.

We use Python 3.8 in our experiments. Please install allennlp 1.3.0 first, then install other dependencies:

pip install allennlp==1.3.0

pip install -r requirements.txtNote: Errors may occur while installing jsonnet with pip. Users are suggested to use conda install jsonnet to finish installation.

Please create directories for logs and checkpoints before using our framework:

mkdir log

mkdir checkpointUsers can launch our framework through command line:

python run_gectoolkit.py -m $MODEL_NAME -d $DATASET_NAMERefer to ./gectoolkit/config/config.json for parameters related to training process, such as number of epochs, learning rate. Refer to ./gectoolkit/properties/models/ for detailed parameters of each model.

Models except Transformer require pre-trained models, so please download them and store them in the corresponding model directory under ./gectoolkit/properties/models/. We provide download links for some of pre-trained models, and users can also download them from Huggingface.

UnifiedGEC also support adjusting parameters via command line:

python run_gectoolkit.py -m $MODEL_NAME -d $DATASET_NAME --learning_rate $LROur framework allows users to add new datasets. The new dataset folder dataset_name/ should include three json files of train set, valid set and test set, and users need to place the folder in the dataset/ directory:

dataset

|-- dataset_name

|-- trainset.json

|-- validset.json

|-- testset.json

After that, users also need to add a configuration file dataset_name.json in the gectoolkit/properties/dataset directory, and the contents of the file can refer to other files in the same directory.

We provide users with two data augmentation methods (for Chinese and English):

- error patterns: add noises randomly to sentences

- back-translation: translate sentences into the other language, and then translate back to origin language

Users can use augment in command line to use our data augmentation module, and noise and translation are available values:

python run_gectoolkit.py -m $MODEL_NAME -d $DATASET_NAME --augment noiseUpon first use, our framework will generate augmented data and save the datasets in the local file, and the back-translation method requires a certain amount of time. UnifiedGEC will use generated data directly while subsequent executions.

We also provide prompts for LLMs (for Chinese and English), including zero-shot prompts and few-shot prompts.

Users can use prompts with use_llm in command lines,and specify the number of in-context learning examples with argument example_num.

python run_gectoolkit.py -m $MODEL_NAME -d $ DATASET_NAME --use_llm --example_num $EXAMPLE_NUMModel name used here should be those from huggingface, such as Qwen/Qwen-7B-chat.

We integrate mainstream evaluation tools for GEC tasks in our evaluation module, including M2Scorer, ERRANT and ChERRANT. Additionally, we also provide scripts for converting and ground truth of some datasets. During the process of training, UnifiedGEC calculate micro-level PRF for the results of models, so if users want to evaluate models in a macro way, they can use this evaluation module.

First, users should use our provided script to convert outputs of the models to the format required by scorers:

python convert.py --predict_file $PREDICT_FILE --dataset $DATASETCorrespondence between datasets and scorers:

| 数据集 | 评估工具 |

|---|---|

| CoNLL14、FCE、NLPCC18 | M2Scorer |

| AKCES-GEC、Falko-MERLIN、COWSL2H | ERRANT |

| MuCGEC | ChERRANT |

Official repository: https://github.com/nusnlp/m2scorer

For English datasets (CoNLL14、FCE),use M2scorer directly for evaluation:

cd m2scorer

m2scorer/m2scorer predict.txt m2scorer/conll14.goldFor Chinese datasets (NLPCC18),pkunlp tools for segmentation is required. We also provide converting scripts:

cd m2scorer

python pkunlp/convert_output.py --input_file predict.txt --output_file seg_predict.txt

m2scorer/m2scorer seg_predict.txt m2scorer/nlpcc18.goldOfficial repository: https://github.com/chrisjbryant/errant

Usage is referenced from official repository:

cd errant

errant_parallel -orig source.txt -cor target.txt -out ref.m2

errant_parallel -orig source.txt -cor predict.txt -out hyp.m2

errant_compare -hyp hyp.m2 -ref ref.m2Official repository: https://github.com/HillZhang1999/MuCGEC

Usage is referenced from official repository:

cd cherrant/ChERRANT

python parallel_to_m2.py -f ../hyp.txt -o hyp.m2 -g char

python compare_m2_for_evaluation.py -hyp hyp.m2 -ref ref.m2There are 5 models and 7 datasets across different languages integrated in UnifiedGEC, and there is the best performance of implemented models on Chinese and English datasets:

| model | dataset | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CoNLL14(EN) | FCE(EN) | NLPCC18(ZH) | MuCGEC(ZH) | |||||||||

| P | R | F0.5 | P | R | F0.5 | P | R | F0.5 | P | R | F0.5 | |

| Levenshtein Transformer | 13.5 | 12.6 | 13.3 | 6.3 | 6.9 | 6.4 | 12.6 | 8.5 | 10.7 | 6.6 | 6.4 | 6.6 |

| GECToR | 52.3 | 21.7 | 40.8 | 36.0 | 20.7 | 31.3 | 30.9 | 20.9 | 28.2 | 33.5 | 19.1 | 29.1 |

| Transformer | 24.1 | 15.5 | 21.7 | 20.8 | 15.9 | 19.6 | 22.3 | 20.8 | 22.0 | 19.7 | 9.2 | 16.0 |

| T5 | 36.6 | 39.5 | 37.1 | 29.2 | 29.4 | 29.3 | 32.5 | 21.1 | 29.4 | 30.2 | 14.4 | 24.8 |

| SynGEC | 50.6 | 51.8 | 50.9 | 59.5 | 52.7 | 58.0 | 36.0 | 36.8 | 36.2 | 22.3 | 26.2 | 23.6 |

The best performance of implemented models on datasets of other languages:

| model | dataset | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| AKCES-GEC(CS) | Falko-MERLIN(DE) | COWSL2H | |||||||

| P | R | F0.5 | P | R | F0.5 | P | R | F0.5 | |

| Levenshtein Transformer | 4.4 | 5.0 | 4.5 | 2.3 | 4.2 | 2.5 | 1.9 | 2.3 | 2.0 |

| GECToR | 46.8 | 8.9 | 25.3 | 50.8 | 20.5 | 39.2 | 24.4 | 12.9 | 20.7 |

| Transformer | 44.4 | 23.6 | 37.8 | 33.1 | 18.7 | 28.7 | 11.8 | 15.0 | 12.3 |

| T5 | 52.5 | 40.5 | 49.6 | 47.4 | 50.0 | 47.9 | 53.7 | 39.1 | 49.9 |

| SynGEC | 21.9 | 27.6 | 22.8 | 32.2 | 33.4 | 32.4 | 9.3 | 18.8 | 10.3 |

We conduct experiments on NLPCC18 and CoNLL14 datasets, and simulate low-resource cases by choosing 10% data from datasets (F0.5/delta F0.5):

| model | data augmentation methods | dataset | |||

|---|---|---|---|---|---|

| CoNLL14 | NLPCC18 | ||||

| F0.5 | delta | F0.5 | delta | ||

| Levenshtein Transformer | w/o augmentation | 9.5 | - | 6.0 | - |

| w/ error patterns | 6.4 | -3.1 | 4.9 | -1.1 | |

| w/ back-translation | 12.5 | 3.0 | 5.9 | -0.1 | |

| GECToR | w/o augmentation | 14.2 | - | 17.4 | - |

| w/ error patterns | 15.1 | 0.9 | 19.9 | 2.5 | |

| w/ back-translation | 16.7 | 2.5 | 19.4 | 2.0 | |

| Transformer | w/o augmentation | 12.6 | - | 9.5 | - |

| w/ error patterns | 14.5 | 1.9 | 9.9 | 0.4 | |

| w/ back-translation | 16.6 | 4.0 | 10.4 | 0.9 | |

| T5 | w/o augmentation | 31.7 | - | 26.3 | - |

| w/ error patterns | 32.0 | 0.3 | 27.0 | 0.7 | |

| w/ back-translation | 32.2 | 0.5 | 24.1 | -2.2 | |

| SynGEC | w/o augmentation | 47.7 | - | 32.4 | - |

| w/ error patterns | 48.2 | 0.5 | 34.9 | 2.5 | |

| w/ back-translation | 47.7 | 0.0 | 34.6 | 2.2 | |

We use Qwen1.5-14B-chat and Llama2-7B-chat and conduct experiments on NLPCC18 and CoNLL14 datasets (P/R/F0.5):

| Setting | Dataset | |||||

|---|---|---|---|---|---|---|

| CoNLL14 | NLPCC18 | |||||

| P | R | F0.5 | P | R | F0.5 | |

| zero-shot | 48.8 | 49.1 | 48.8 | 24.7 | 38.3 | 26.6 |

| few-shot | 50.4 | 50.2 | 50.4 | 24.8 | 39.8 | 26.8 |

UnifiedGEC uses Apache 2.0 License.