Repository for our image colourization 🖍🎨 project in the Deep Learning course (02456) at DTU.

You can find our poster here.

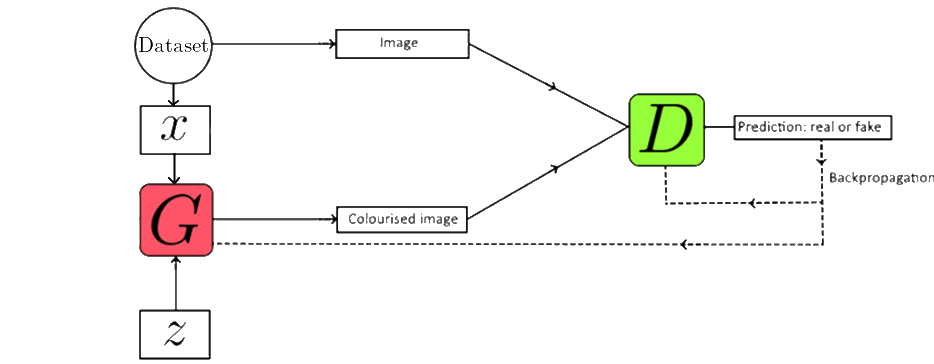

We use conditional Generative Adversarial Networks (GANs) to colourise black/white images. A GAN consists of a generator

The loss encourages the generator to generate better images and the discriminator to become better at discriminating between real and fake images. The last term is a regularisation term weighted by the value

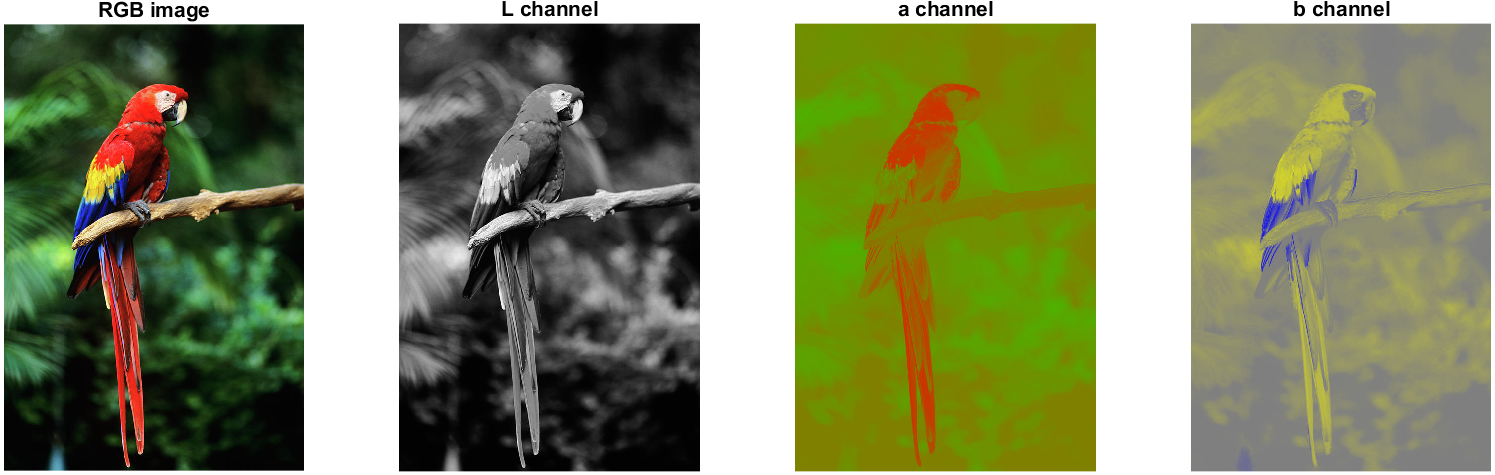

Images are represented in the Lab colour space, instead of the more common RGB colour space. The goal of the generator is then to predict the

Visualisation of L, A, and B colour channels for an example image.

- Data: use the places365 dataset (remove BW images)

- Make the baseline (GAN and L1-loss without transfer learning)

- Test difference between L1 and L2 loss on baseline model

- 2 backbones VGG19, Xception

- Quantitative evaluation (colourfulness, peak signal-to-noise ratio (PSNR))

- Qualitative human evaluation (by us) on 5 images each

- Use image labels as additional conditional data and assess improvement

- Evaluate how image label data improved the model

The Places365 dataset is available here:

$ are terminal commands

- open terminal in same folder as this project and type the following commands (you can paste them into the terminal with middle mouse click)

$ module load python3/3.9.6$ module load cuda/11.3$ python3 -m venv DeepLearning$ source DeepLearning/bin/activate$ pip3 install -r requirements.txt

Now everything should be setup. Submit shell script HPC/submit.sh to start training your model.