It contains the codes to attend AIM 2020 Relighting Challenge Track 1: One-to-one relighting

Our testing result can be found from here (google drive link)

- Linux

- Python 3

- NVIDIA GPU (11G memory or larger) + CUDA cuDNN

- Install PyTorch and dependencies from http://pytorch.org

- Install python libraries dominate.

pip install dominate, kornia- Clone this repo

- A few example test images are included in the

datasets/testfolder. - Please download trained model

- Test the model:

python test.pyThe test results will be saved to the folder: ./output.

- We use the VIDIT dataset (track 3). To train a model on the full dataset, please download it first.

- We generate the wide-range images (through the OpenCV package) to supervise the scene reconversion network, please download it from here

After downloading, please put them under the datasets folder, which are "datasets/track3_train" and "datasets/track3_HDR"

- Train a model at 512 x 512 resolution:

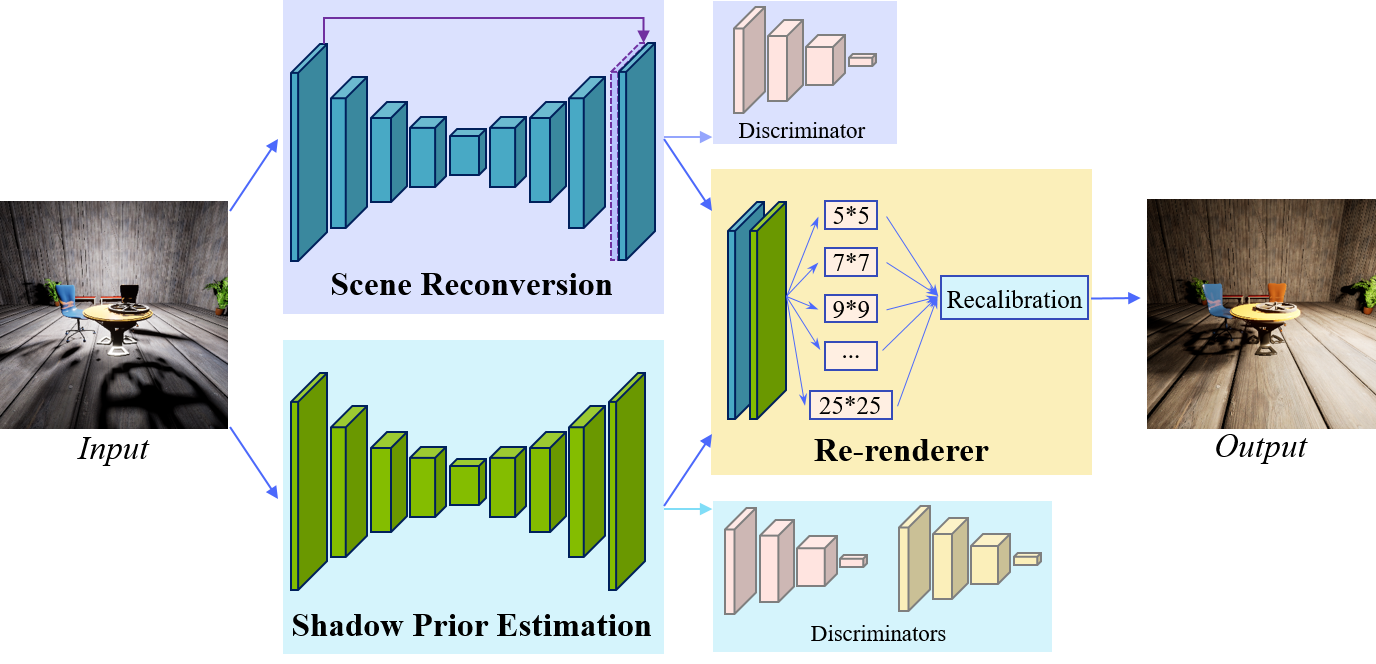

python train.py The training process includes three stages: Firstly, we trained the scene reconversion using paired input and wide-range target, accompanied with the discriminators. Secondly, we trained the shadow prior estimation network by paired input and target images with the other discriminators. Finally, we fixed the shadow prior estimation and trained the whole DRN with paired input and target images, using Laplace and VGG (*0.01) losses. The training images are resized to 512x512 because of the limitation of the GPU memory. All stages uses the Adam optimization with momentum 0.5 and learning rate 0.0002.

This code borrows heavily from pytorch-CycleGAN-and-pix2pix.