PyTorch Implementation of the papers

- Auto-Encoding Variational Bayes

- beta-VAE: Learning Basic Visual Concepts with a Constrained Variational Framework

- Andreas Spanopoulos (andrewspanopoulos@gmail.com)

- Demetrios Konstantinidis (demetris.konstantinidis@gmail.com)

- The directory src/models contains the models we have created so far. More are coming along the way.

- The python script src/main.py is the main executableg.

- The notebook directory contains a colab notebook that can be used for training and testing.

- In the mathematical_analysis directory there is a pdf where the basic mathematical concepts of the Variational Autoencoder are explained thoroughly.

- In the config directory there are some configuration files that can be used to create the models.

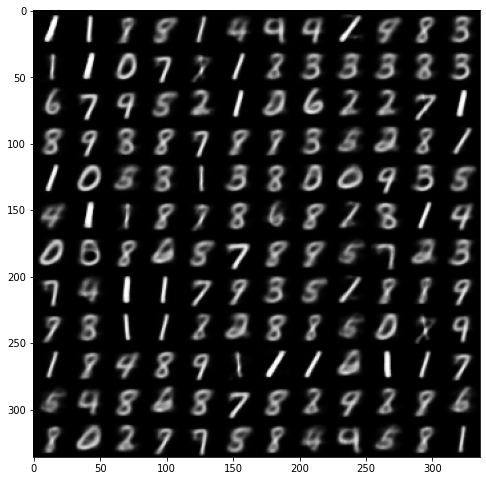

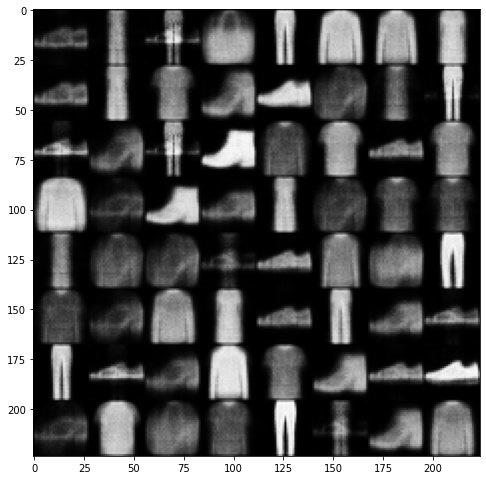

- In the tests directory there are the results we got from running the model with various configurations.

Currently two models are supported, a simple Variational Autoencoder and a Disentangled version (beta-VAE). The model implementations can be found in the src/models directory. These models were developed using PyTorch Lightning.

-

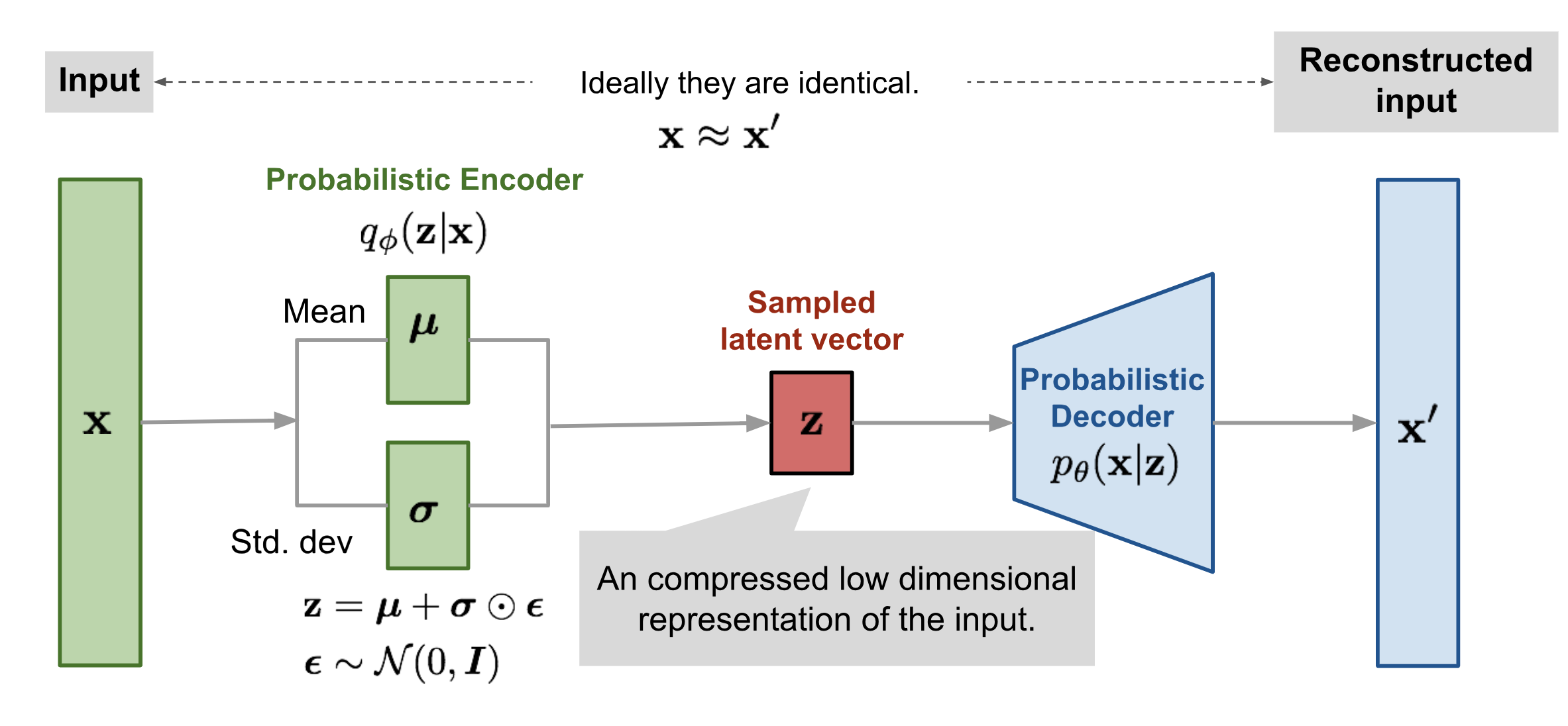

The Variational Autoencoder is a Generative Model. Its goal is to learn the distribution of a Dataset, and then generate new (unseen) data points from the same distribution. In the picture belowe we can see an overview of its architecture.

Note than in our implementation, before the main and std layers, convolutions have been applied to reduce the dimensionality of the data.

-

Another form of a Variational Autoencoder is the beta-VAE. The difference between the Vanilla VAE and the beta-VAE is in the loss function of the latter: The KL-Divergence term is multiplied with a hyperprameter beta. This introduces a disentanglement to the idea of the VAE, as in many cases it allows a smoother and more "continuous" transition of the output data, for small changes in the latent vector z. More information on this topic can be found in the sources section below.

Note that for a more in-depth explanation of how and why the VAE framework actually makes sense, some custom latex notes have been made in a pdf

here.

As stated above, I have written in Latex some notes about the mathematics behind the Variational Autoencoder. Initially, I started making these notes for myself. Later I realized that they could assist others in understanding the basic concepts of the VAE. Hence, I made them public in the repo. Special thanks to Ahlad Kumar for explaining complex mathematical topics that were not taught in our Bachelor. Also a big thanks to all the resources that have been listed in the end of the repo.

First install the appropriate libraries with the command

$ pip install -r requirements.txtTo execute the VAE, you have to navigate to the src directory and execute the following command

$ python3 main.py -c <config_file_path> -v <variation>The configuration file should have the following format:

[configuration]

dataset = <MNIST, FashionMNIST or CIFAR10>

path = <path_to_dataset>

[architecture]

conv_layers = <amount of convolutional layers>

conv_channels = <the convolutional channels as a list, e.g. [16,32,64]>

conv_kernel_sizes = <the convolutional kernel sizes as a list of tuples, e.g. [(7, 7), (5, 5), (3, 3)]>

conv_strides = <the convolutional strides as a list of tuples, e.g. [(1, 1), (1, 1), (1, 1)]>

conv_paddings = <the convolutional paddings as a list of tuples, e.g. [(1, 1), (1, 1), (1, 1)]>

z_dimension = <the dimension of the latent vector>

[hyperparameters]

epochs = <e.g. 10>

batch_size = <e.g. 32>

learning_rate = <starting learning rate, e.g. 0.000001>

beta = <beta parameter for B-VAE, e.g. 4>

Examples of configuration files can be found in the config directory.

Variation indicates what kind of Variational Autoencoder you want to train. Right now, the only options are:

- Vanilla VAE:

$ python3 main.py -c <config_file_path> -v VAE

- beta VAE:

$ python3 main.py -c <config_file_path> -v B-VAE

Keep in mind that for the second option, the beta parameter is required in the configuration file (in the section "hyperparameters").

Under the notebook directory exists a colab notebook. This is useful for experimentation/testing as it makes the usage of a GPU much easier.

Keep in mind that the core code remains the same, however the initialization of values is done manually.

- Papers:

- Online Articles:

- Online Lectures:

- Slides/Presentations:

- Theses: