- Thesis Project

- 1. Introduction

- 1.1. Current Solutions and State of the Art

- 2. Technology

- 3. Analysis Phase

- 4. Development Phase

- 5. How to use

- 6. Appendix

The scope of this thesis project is to develop an app for Hololens 2, which will assist users who face vision impairment (partial or complete vision loss) t and would like to navigate in a specific area, unknown to them.

- Assistive Technology Device: any item, piece of equipment, or product system, whether acquired commercially off the shelf, modified, or customized, that is used to increase, maintain, or improve functional capabilities of individuals with disabilities. (Technology Related Assistance to Individuals with Disabilities Act of 1988)1.

- Cane

- Help the user to navigate (alongside the tactile paving) and locate obstacles in its route

- Service Dogs

- Pick items up for their owners

- Guide their owners

- Remind medication intake

- Electronic Mobility Aids

- Use of ultrasonic waves for the detection of obstacles (ex. Ray Electronic Mobility Aid, UltraCane)

- Guidesense

- Wearable: a belt around the torso of the user -

- Sensor module with high-frequency radar technology - Signal goes through clothing materials - Vibration and/or voice feedback

- Mainly for outdoor use (alerts will be too frequent indoors)

- Tested by 25 visually impaired people

- Wayband

- Wearable (armband)

- Navigate user through a specific route - Provide haptic feedback to inform user for the correct direction

- NextGuide

- Smart cane

- 1 camera - 160° FoV

- Detection of obstacles - Use of haptic feedback (tectile poointer and vibration) - Different feedback based on the object detected - Optional audio cues - Guide to correct direction - Read out text

- biped

- Wearable: around the neck of the user - Weight: 900g - 3 cameras - 170° FoV - 15 meters distance - 6h battery

- Bluetooth connection with headphones - Companion app

- Detection of high and low-level obstacles - Objects detection (use of autonomous driving technology) - One-side detection (for users with hemispatial neglect) - Prediction of collision risk - Sound warning with spatial effect - Working during day and night - Basic GPS feature (uses smartphone's GPS)

- Cost: 2950€ (one-time payment) / 129€ (monthly payment for 36 months)

- The project started in 2020 - 5 collaborators, 250+ beta testers, 6 prototypes - It became available in September 2022

- Unitree Go1

- Weight: 12kg - Speed: 2.5-3.7 m/s

- Super-sensing system - Intelligent concomitant system - Side-following system - Built-in AI

- Cost: 2700$ - 3500$

- Video

The technology (devices, software), which was used during the development of the app is:

- Hololens 2

- We decided to use an AR device in order to take advantage of its compact design along with the hardware and software which has already been implemented for it, making the development process easier.

- Unity

- It was decided to proced with Unity instead of Javascript due to the vast range of documentation, tutorials and examples

- Mixed Reality Feature Tool

- Mixed Reality Toolikit Foundations (version 2.7.3)

- Mixed Reality Toolkit Standard Assets (version 2.7.3)

- Mixed Reality OpenXR Plugin (version 1.4.0)

- Hololens Emulator

...

...

...

...

...

...

| Voice Command | Description |

|---|---|

Scan |

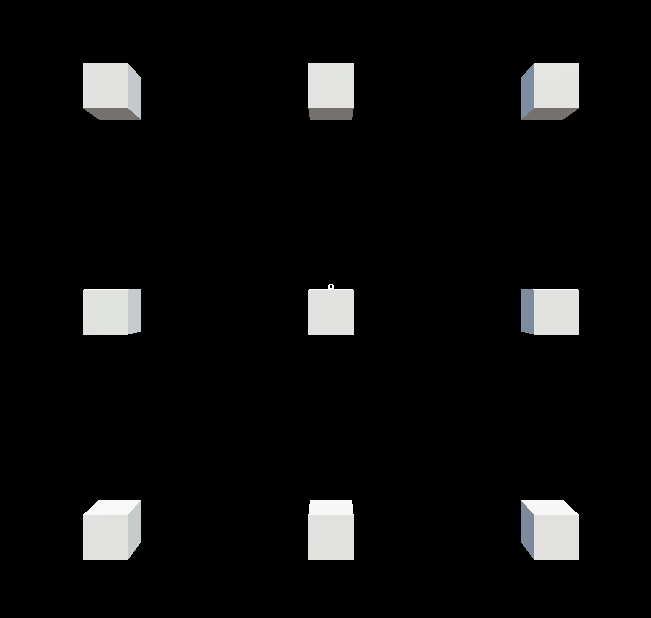

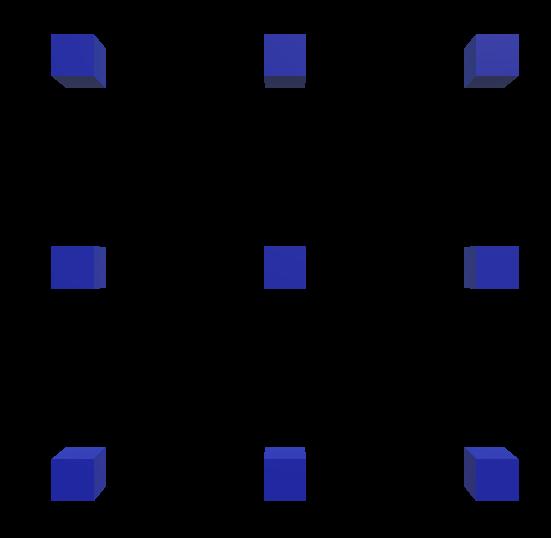

9 rays are casted from multiple positions (Raycasts Origin Point), as they are displayed in the image:The first row is placed at the height of user's gaze. The distance between each Origin Point with its adjacent ones is 0.5 units2. When a ray hits the mesh3 of the room, then an alert box is placed at the hit point in order to alert the user for an obstacle. One alert box correspond to each raycast. If the ray doesn't hit the mesh within 5 units2, then its alert box is deactivated. If the user uses the command again, the alert boxes are moved to the new hit points. Note: If the user turns his gaze, the alert boxes stay in their positions. For the alert boxes to follow the movement of the user's head, use the command Continuous Mode. |

Stop |

All alert boxes are deactivated. |

Continuous Mode |

When this mode is activated, then the rays (which have been selected to be activated for this mode) are casted in each frame. The alert boxes are placed on the hit points. Since the rays are casted in each update, this means that the alert boxes follow the movement of user's head. Using the same command, the mode is deactivated. Note: For now, only one ray is casted in this mode at the height of user's gaze. |

Hands Mode |

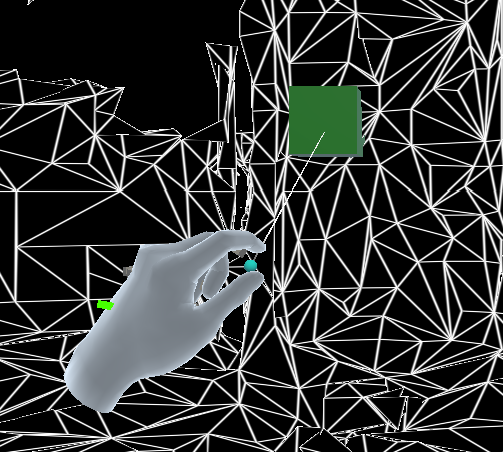

When this mode is activated, then, if the user place his hand in front of him, at the end of the pointer, which extends from his arm and works as a raycast, an alert box is placed in the hit point between the pointer and the mesh3.If no point of the mesh is hit by the pointer, the alert box is deactivated. If the user uses the same command, the mode is deactivated. Note: If the user does the pinch gesture, the sound of the alert box is temporarily muted. If the user "release" the gesture, the sound is unmuted. |

- Wear the Hololens 2 headset and launch the app

- On startup, a scan of the environment will be done (in the direction of user's gaze) in order to retrieve the mesh (spatial mapping). This scan is done in intervals of 3.5 seconds.

- The user can use any of the available voice commands.

- It is suggested the user to use frequently the

Scancommand.

- It is suggested the user to use frequently the

- Alert Box: A GameObject, to which has been attached an Audio Source that plays a sound in order to alert the user. In the app, they are visualized as a blue or green cube.\

- (Raycast) Origin Point: It is the point (coordinates) from which a ray is casted. In the app, it is visualized as a white box.\