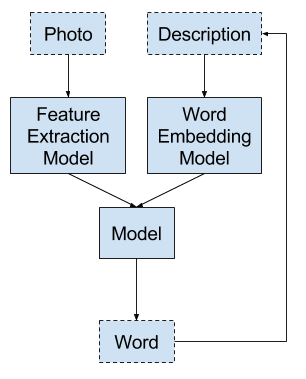

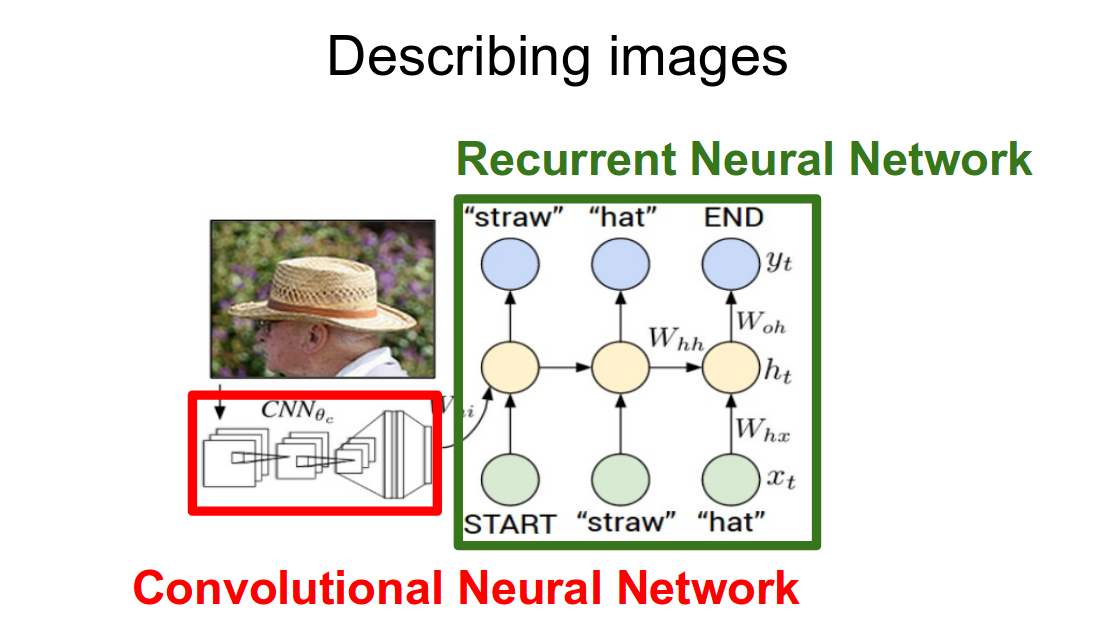

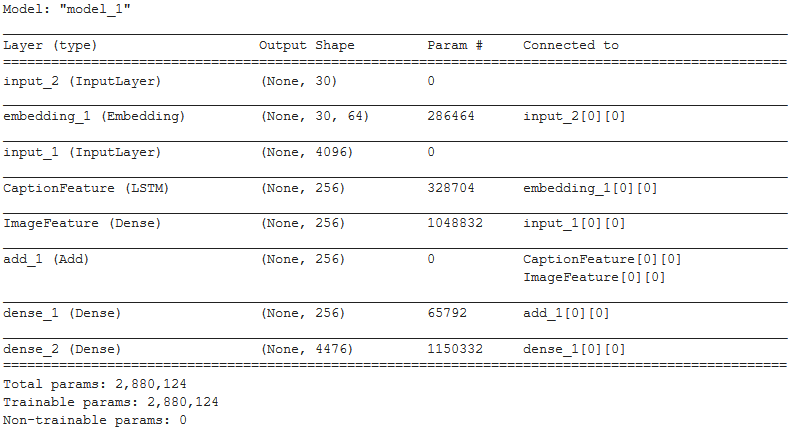

Image caption generator is a model which generates a caption that describes the contents of the image. It requires a model from computer vision to understand the content of the image and a language model from NLP to translate the understanding of the image into words.

Deep Learning Models have provided an excellent way to get results for examples of this problem.

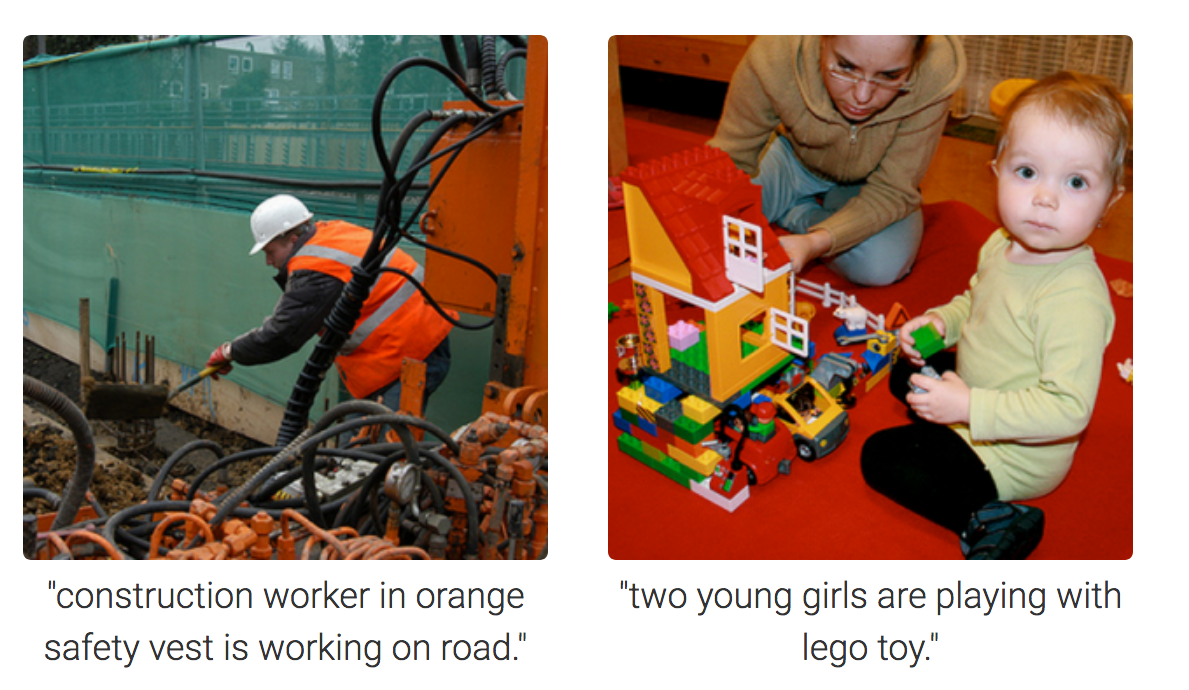

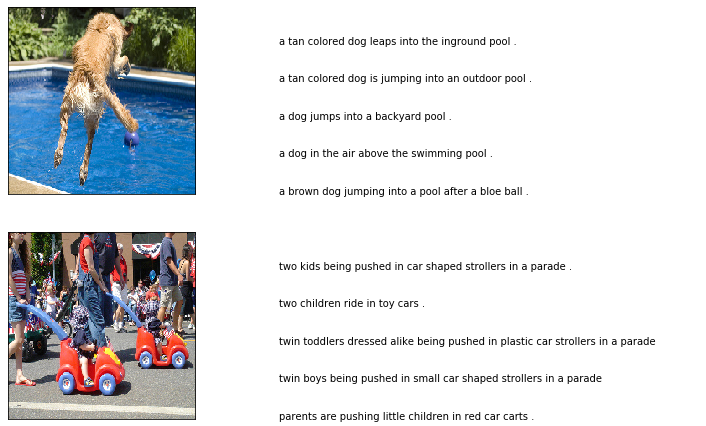

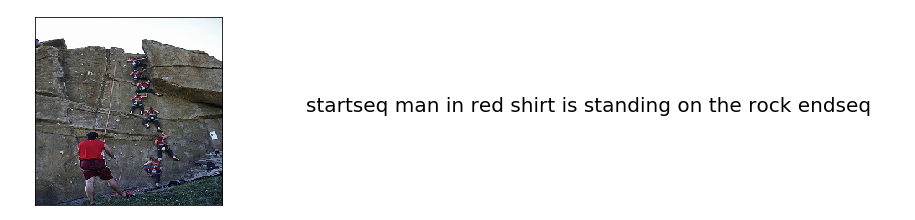

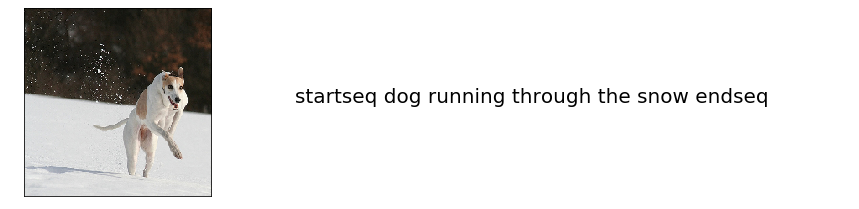

FLICKR_8K. This dataset includes around images along with 5 different captions written by different people for each image.

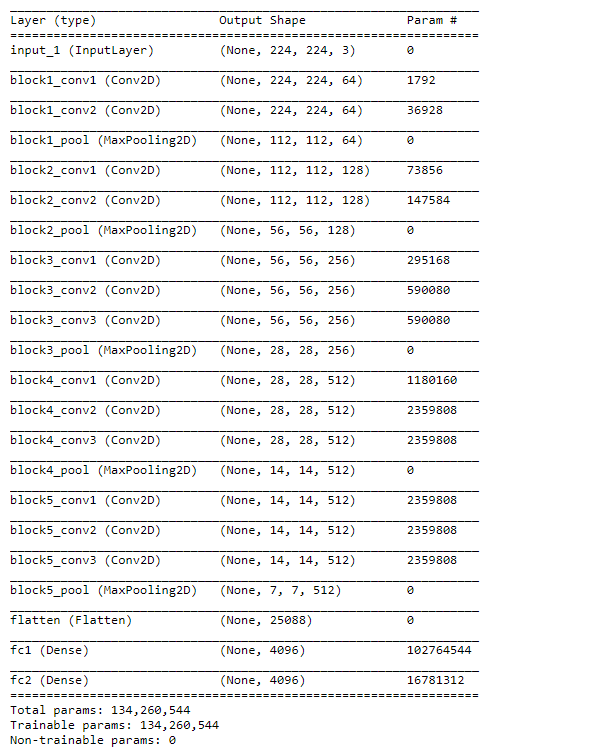

Pre trained VGG16 Model has been used to extract the features of the image.

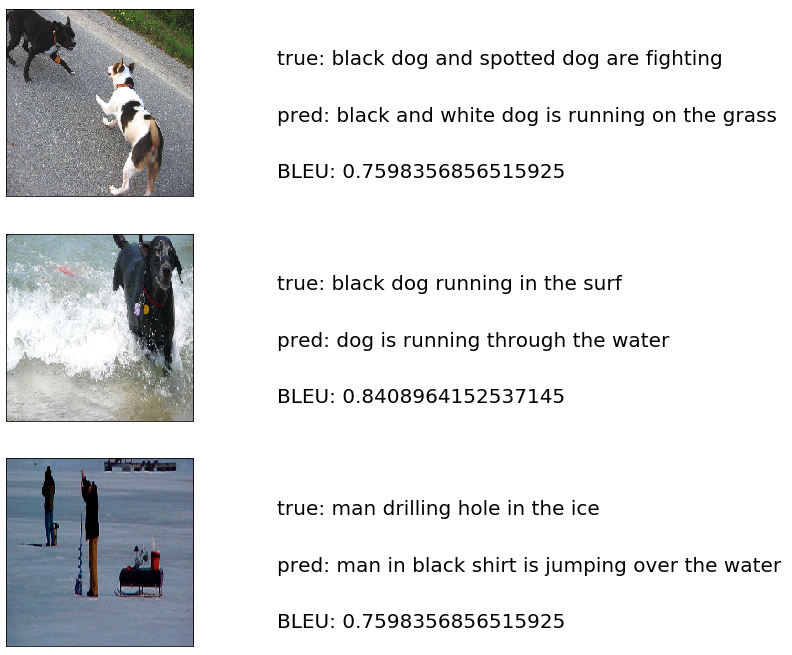

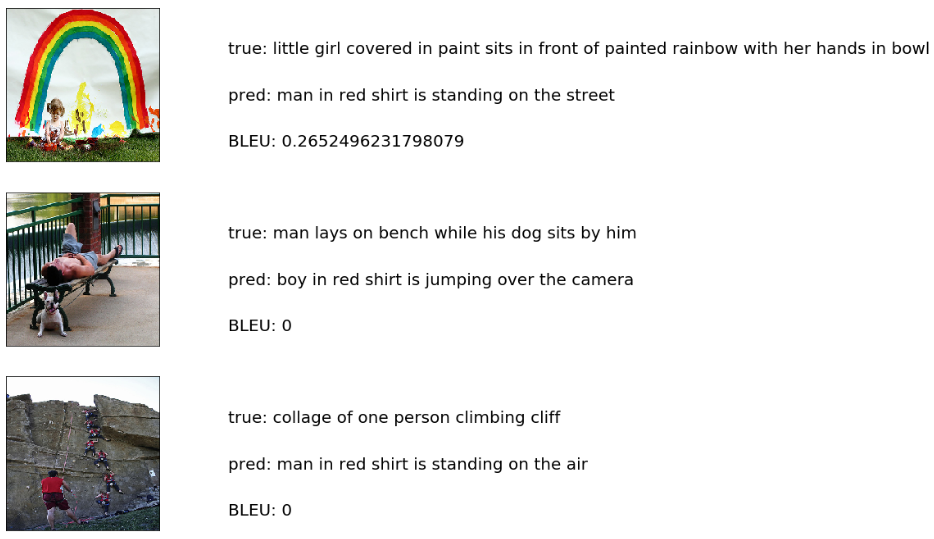

The Model has successfully generated captions for images. The performance of the model can be further improved by hyperparameter training.