This repository contains the code and data of Bi-link and Zeroshot Entity Linking for Fandomwiki.

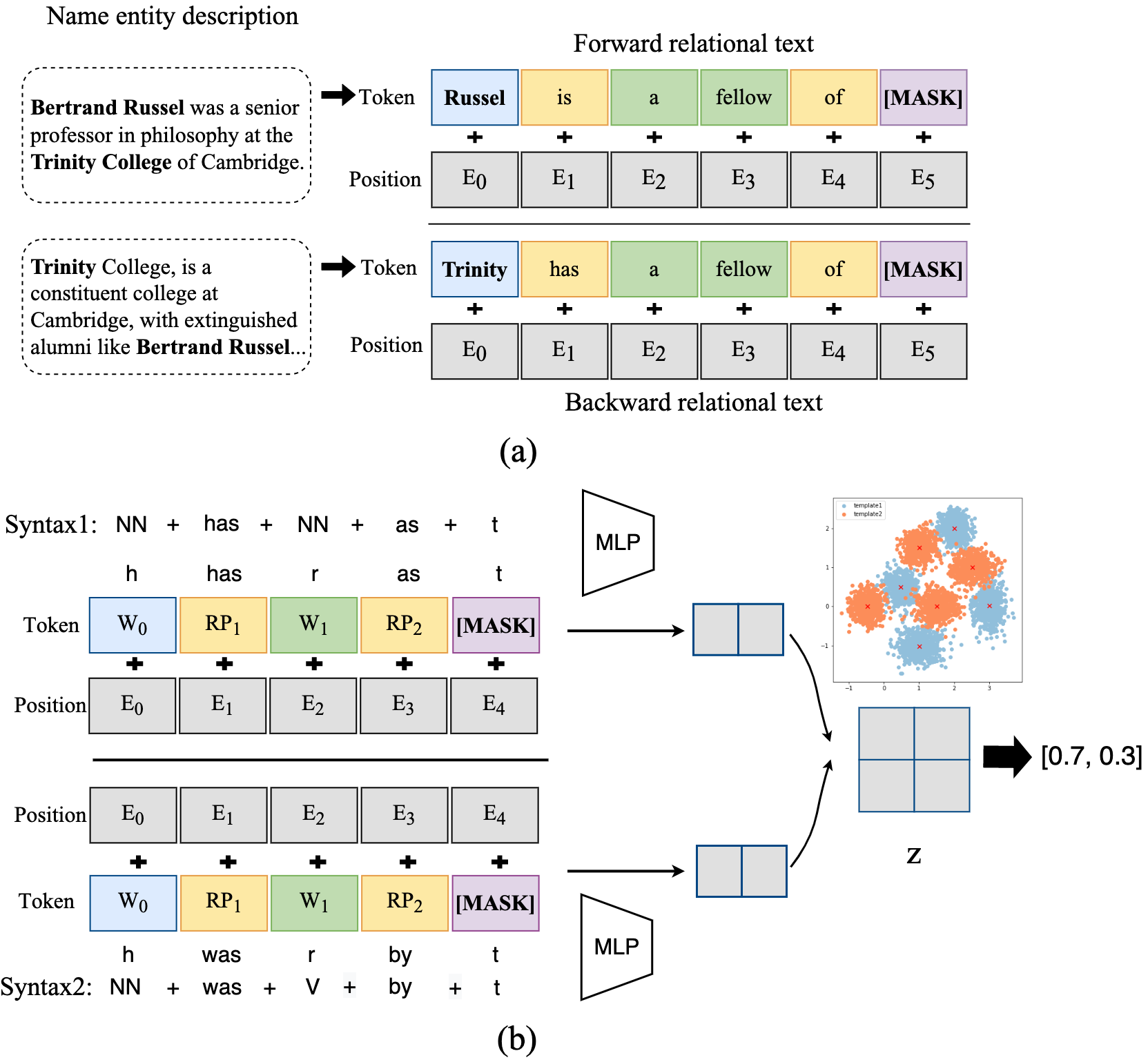

We propose Bi-Link, a contrastive learning framework with probabilistic syntax prompts for link predictions. Using grammatical knowledge of BERT, we efficiently search for relational prompts according to learnt syntactic patterns that generalize to large knowledge graphs. To better express symmetric relations, we design a symmetric link prediction model, establishing bidirectional linking between forward prediction and backward prediction. This bidirectional linking accommodates flexible self-ensemble strategies at test time. In our experiments, Bi-Link outperforms recent baselines on link prediction datasets (WN18RR, FB15K-237, and Wikidata5M).

*** UPDATE ***

We are now merging Bi-link and Zeroshot Entity Linking for Fandomwiki to the current repository. We also upload our zeroshot fandomwiki dataset.

- Knowledge graphs and hierarchical templates are in the data folder.

- Fandomwiki dataset is available here

Bi-link (Pytorch):

Zeroshot Entity Linking on Fandomwiki (PyTorch):

- Bi-encoders for entity linking via contrastive learning

- NSP: Next Sentence Prediction

- Bi-link

In Bi-link we first train a probabilistic syntax prompt searching model for relation data (triples) of knowledge graphs. With these syntax, we link the triples into a sentence and use pretrained language models (PLMs) for link prediction.

- Zeroshot Entity Linking on Fandomwiki

We first use tf-idf to retrieve top 64 candidate documents according to the mention in the entity description context. Then as for the cross-encoder baseline, which might be seen as a binary classification task, we treat the gold document as true label, and the rest of 63 documents as false label. As for the contrastive learning method, we treat the mention and reference documents as positive pairs, and the rest of 63 candidate documents as negative examples. Hyper-parameter tuning experiements are described in the submitted paper.

-

Bi-link

-

Zeroshot Entity Linking on Fandomwiki

-

Install PyTorch

-

Clone the master branch:

git clone -b master https://github.com/pengbohua/EntityLinkingForFandom/tree/master --depth 1

cd EntityLinkingForFandom- Prepare dataset for entity linking

cd entity_linking

unzip fandomwiki_data.zip- Quick Start

- For NSP Zeroshot Entity Linking on Fandomwiki

cd entity_linking

bash run_nsp.sh- For CL Zeroshot Entity Linking on Fandomwiki

cd entity_linking

bash run_cl.sh- For Bi-link

cd bi-link

bash eval.shThe whole dataset contains three directories。

- documents: contains entities' documents on 16 fields in fandomwiki

- mentions: contains train.json, valid.json, test.json. Each json file describes the information about an entity linked to the mention in another entity's description.

- tfidf_candidates: contains train_tfidfs.json, valid_tfidfs.json, test_tfidfs.json. Eash json file contains a given entity mention and its tf-idf top 64 retrieved results.