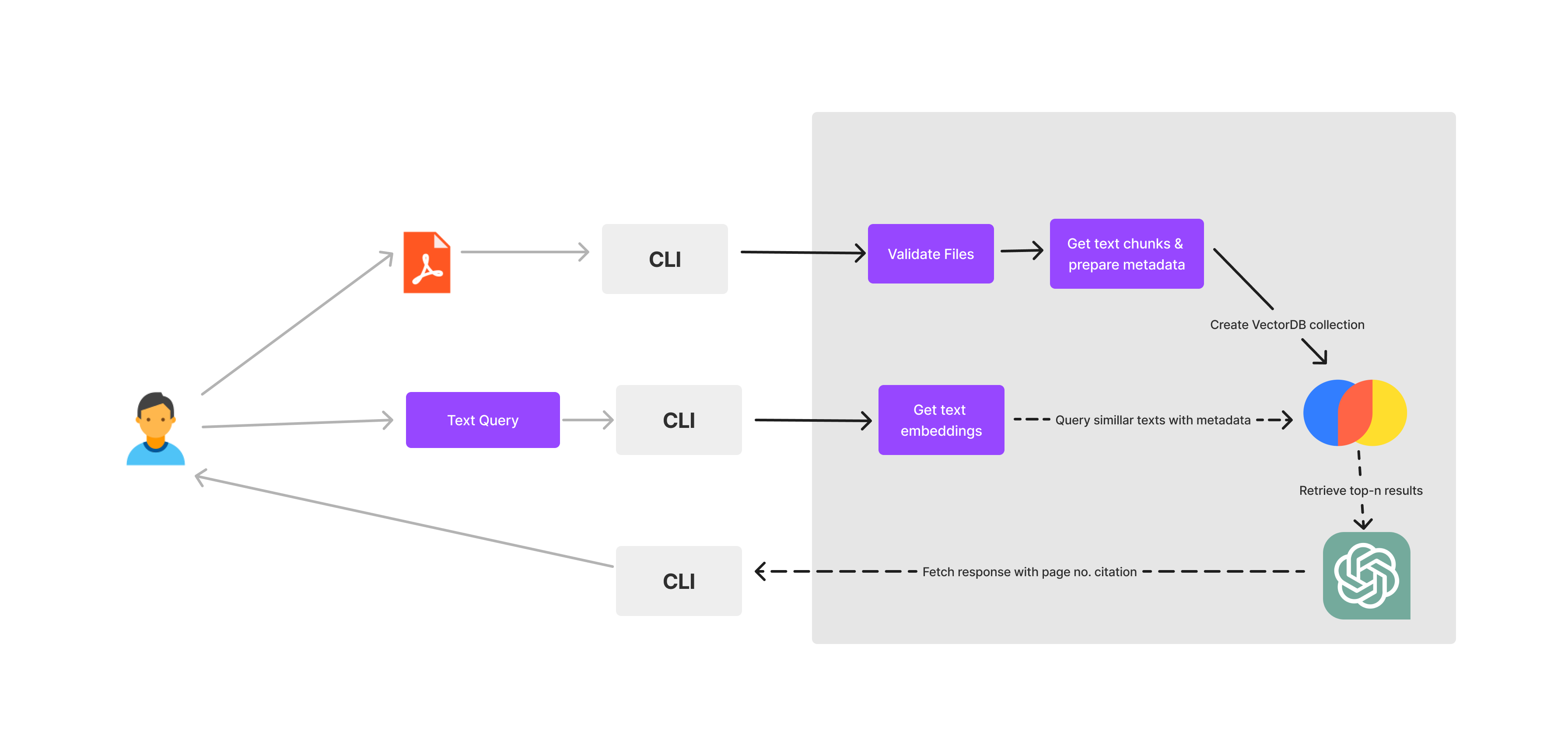

Interact with any PDF file from the terminal without using Langchain or LlamaIndex. At times you do not need frameworks like Langchain, this is a demo of how you can build a simple CLI chatbot without relying on LLM frameworks.

- Python Argparse for CLI

- ChromaDB as vector database

- OpenAI chatgpt turbo 3.5

https://www.analyticsvidhya.com/blog/2023/09/how-to-build-a-pdf-chatbot-without-langchain/

- Python 3.11+

- OpenAI API key

- Clone the repository:

git clone https://github.com/AnthonyRonning/pdf-cli-chatbot.git

cd pdf-cli-chatbot- Create and activate a virtual environment:

python -m venv .venv

source .venv/bin/activate # On Windows: .venv\Scripts\activate- Install dependencies:

pip install -r requirements.txt- Set up your OpenAI API key:

cp .env.example .env

# Edit .env and add your OpenAI API keypython cli.py -f path/to/your/document.pdfOptional: Customize chunk size (default is 200 words):

python cli.py -f document.pdf -v 300python cli.py -q "What is the main topic of this document?"Get more context by fetching multiple relevant chunks:

python cli.py -q "Explain the methodology used in the study" -n 5python cli.py -c True# Load a PDF

python cli.py -f bitcoin.pdf

# Ask questions

python cli.py -q "What problem does Bitcoin solve?" -n 3

python cli.py -q "How does the proof-of-work system work?" -n 5

# Clear collection before loading a new PDF

python cli.py -c True

# Load a different PDF

python cli.py -f research_paper.pdf

# Ask questions about the new PDF

python cli.py -q "What are the key findings?" -n 3-f, --file: Path to the PDF file to load-q, --question: Question to ask about the loaded PDF-n, --number: Number of relevant chunks to retrieve (default: 1)-v, --value: Words per chunk when processing PDF (default: 200)-c, --clear: Clear the existing collection (use when switching PDFs)