Agent-based-simulation project. This project simulates a house with some obstacules, dirt, a robot and several babies on the ground, all of which can generate dirt over time. The robot is an agent with the task of cleaning the house, and picking up the babies and leaving them in a corral. The environment can variate randomly every t units of time. Full specs and details about the environment, its elements and the robot are in spanish in the doc folder.

To use the project, clone it or download it to your local computer.

It is necessary to have python v-3.7.2

To run the project, simply open the console from the root location of the project and execute:

python main.pyIf you want to use the available parameters and thereby configurate the simulation, run the main script with the args listed by:

python main.py --helpAlso, use the previous command to know how to pass the parameters.

To see what happens in every turn of the simulation, run the program with the -i or --interactive arg:

python main.py -iThe program will offers you instructions. Here a example of interactive simulation at the start and end of a simulation:

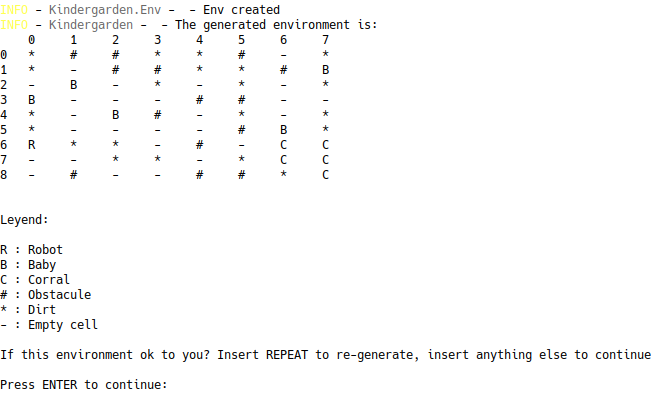

Start:

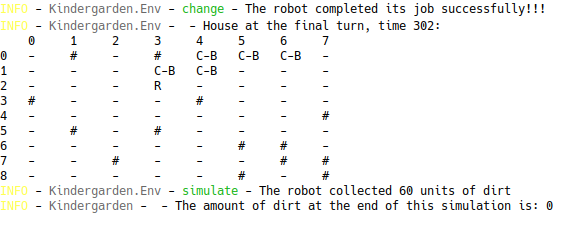

End:

Two types of agents were implemented: Simple-reflex based agent and Goal-based agent. By default the Simple-reflex based agent is used for the simulation. To simulate with the other, run the program with a the -p rule.

To run a suite of simulations, configure the sets of initial environments and the number of simulations in the simulate.sh script. Modify the simulation array to change the sets.

The results can be found in results/results.json. The data collected by a simulation is:

- Task completed by agent (

TrueorFalse) - Percentage of dirt at the end of this simulation

Check doc/Informe.pdf to see a full explanation of the ideas used to build the agents and the environment. The document is in Spanish.

- Miguel Tenorio Potrony -------> AntiD2ta

This project is under the License (MIT License) - see the file LICENSE.md for details.