If you use this code in any context, please cite the following paper:

@misc{oreshkin2022motion,

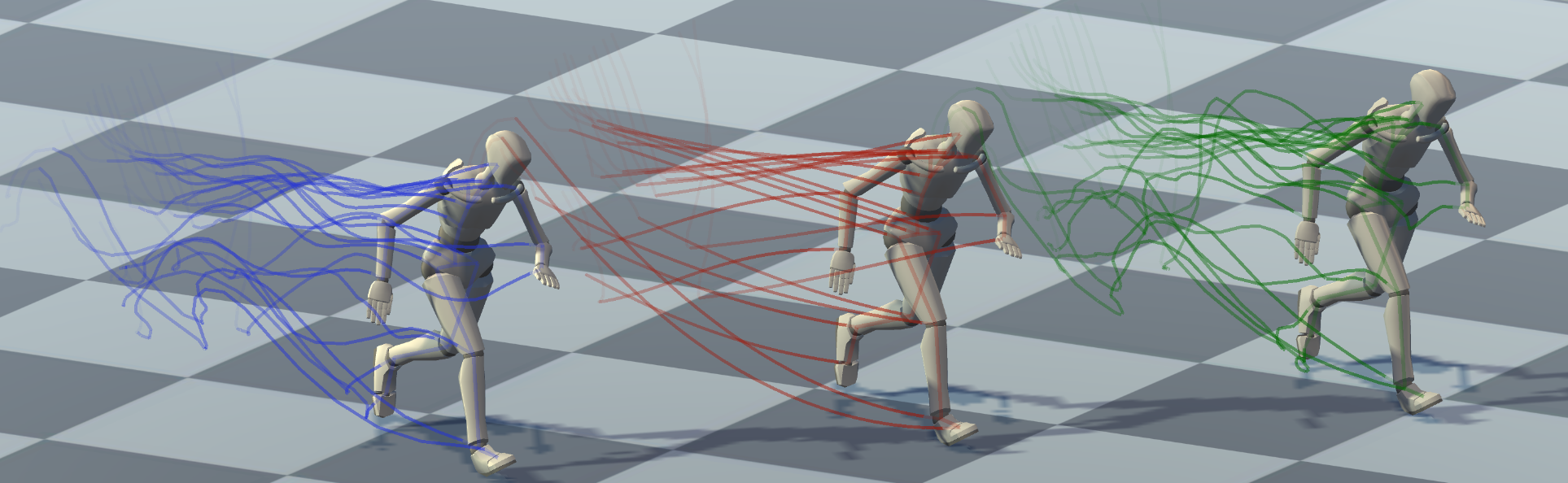

title={Motion Inbetweening via Deep $\Delta$-Interpolator},

author={Boris N. Oreshkin and Antonios Valkanas and Félix G. Harvey and Louis-Simon Ménard and Florent Bocquelet and Mark J. Coates},

year={2022},

eprint={2201.06701},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

mkdir workspace

cd workspace

git clone https://github.com/boreshkinai/delta-interpolator

docker build -f Dockerfile -t delta_interpolator:$USER .

nvidia-docker run -p 8888:8888 -p 6006:6006 -v ~/workspace/delta-interpolator:/workspace/delta-interpolator -t -d --shm-size="1g" --name delta_interpolator_$USER delta_interpolator:$USER

docker exec -i -t delta_interpolator_$USER /bin/bash

Once inside docker container, this launches the training session for the proposed model. Checkpoints and tensorboard logs are stored in ./logs/lafan/transformer

python run.py --config=src/configs/transformer.yaml

This evaluates zero-velocity and the interpolator models for LaFAN1

python run.py --config=src/configs/interpolator.yaml

python run.py --config=src/configs/zerovel.yaml

To run the Anidance benchmark experiments run:

python run.py --config=src/configs/transformer_infill.yaml

For zero-velocity and interpolator baselines run:

python run.py --config=src/configs/interpolator_anidance.yaml

python run.py --config=src/configs/zerovel_anidance.yaml

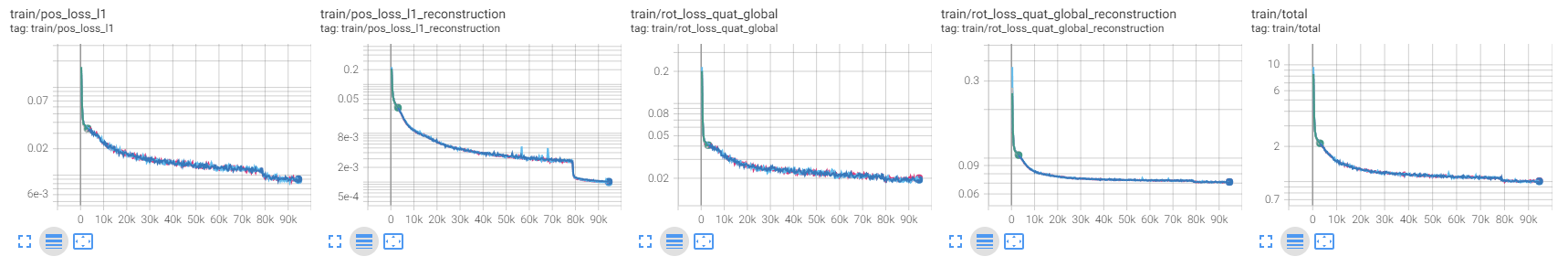

Training losses eveolve as follows:

http://your_server_ip:18888/notebooks/LaFAN1Results.ipynb

The notebook password is default

https://storage.googleapis.com/delta-interpolator/pretrained_model.zip