This is the code for RL agents using logic.

pip install -r requirements.txtfrom the nsfr folder:

pip install -e . # installs in dev modeYou also need to install QT-5 for Threefish and Loot:

apt-get install qt5-defaultExample to play with a trained ppo agent

python3 play.py -s 0 -alg ppo -m getout -env getout

The trained model can be found in folder: models/getout or models/threefish

Example to train an logic agent for getout env using 'getout_human_assisted' rules.

python3 train.py -s 0 -alg logic -m getout -env getout -r 'getout_human_assisted'

Models will be saved to folder: src/checkpoints

Models that use to run should be moved to folder: src/models

Description of Args

- --algorithm -alg:

The algorithm to use for playing or training, choice: ppo, logic.

- --mode -m:

Game mode to play or train with, choice: getout, threefish, loot.

- --environment -env:

the specific environment for playing or training,

getout contains key, door and one enemy.

getoutplus has one more enemy.

threefish contains one bigger fish and one smaller fish.

threefishcolor contains one red fish and one green fish. agent need to avoid red fish and eat green fish.

loot contains 2 pairs of key and door.

lootcolor contains 2 pairs of key and door with different color than in loot.

lootplus contains 3 pairs of key and door.

- --rules -r:

rules is required when train logic agent.

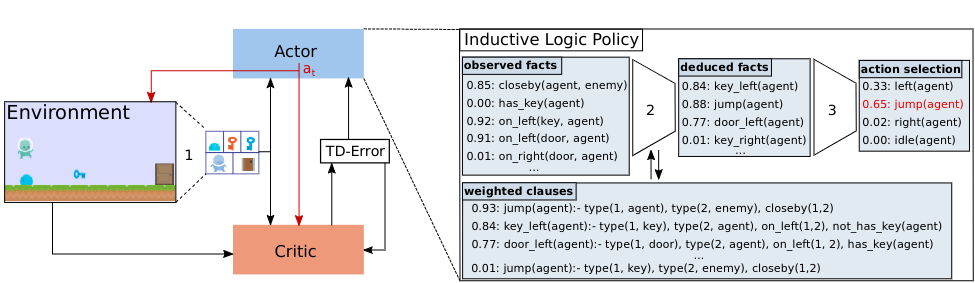

Logic agent require a set of data which provide the first order logic rules.

e.g. 'getout_human_assisted' indicate the rules is human-generated.

So for new rules, just need to be added to the choice of argument '--rules' and dataset.

dataset can be found in folder: src/nsfr/data

'--rules' is also for some situation like using reward shaping:

e.g. 'ppo_simple_policy' can be helpful when train ppo agent of getout

- --recovery -re:

The data will be saved automatically to the folder of models. If the training crashed, you can set '-re' to True to continue the training by input the last saved model.

- --plot -p:

If True, plot the weight as a image.

Image willbe saved to folder: src/image

- --log -l:

if True, the state information and rewards of this run will be saved to folder: src/logs (for playing only)

- --render:

If True, render the game.

(for playing only)

Using Beam Search to find a set of rules

python3 beam_search.py -m getout -r getout_root -t 3 -n 8 --scoring True -d getout.json

Without scoring:

python3 beam_search.py -m threefish -r threefishm_root -t 3 -n 8

- --t: Number of rule expansion of clause generation.

- --n: The size of the beam.

- --scoring: To score the searched rules, a dataset of states information is required.

- -d: The name of dataset to be used for scoring.