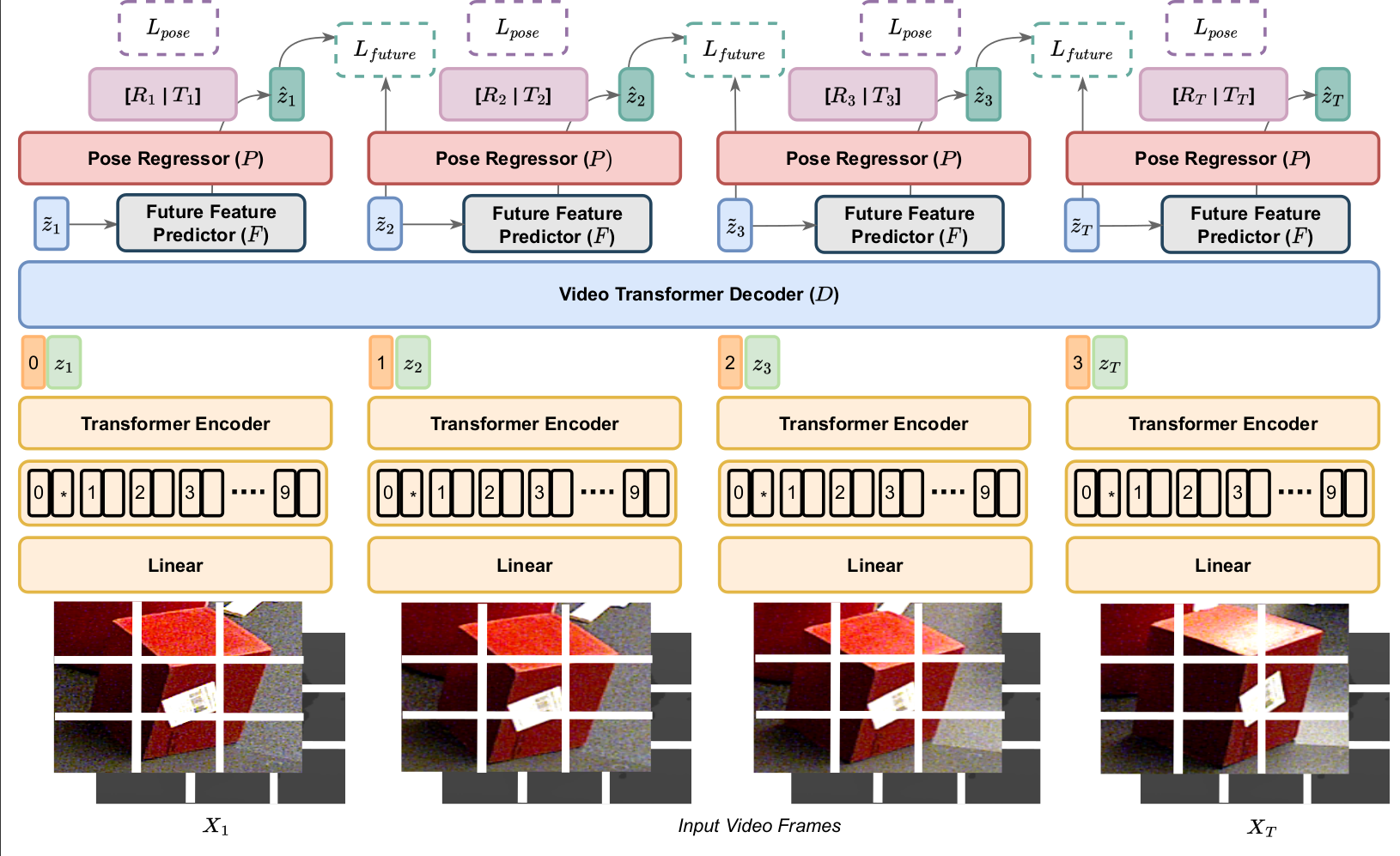

This directory contains implementation of paper Video based Object 6D Pose Estimation using Transformers.

Accepted into NeuRIPS 2022 Workshop on Vision Transformers: Theory and Applications.

If this code helps with your work, please cite:

@article{beedu2022video,

title={Video based Object 6D Pose Estimation using Transformers},

author={Beedu, Apoorva and Alamri, Huda and Essa, Irfan},

journal={arXiv preprint arXiv:2210.13540},

year={2022}

}Please install all the requirements using requirements.txt

pip3 install -r requirements.txt

Create a ./evaluation_results_video, wandb, logs, output and model folders.

Arguments and their defaults are in arguments.py

backboneswin or beituse_depthTo use ground-truth depth during trainingrestore_filename of the file in --model_dir_path containing weights to reload before traininglrLearning rate for the optimiserbatch_sizeBatch size for the datasetworkersnum_workersenv_nameenvironment name for wandb, which is also the checkpoint name

Download the entire YCB dataset from https://rse-lab.cs.washington.edu/projects/posecnn/

The data folder looks like

train_eval.py

dataloader.py

├── data

│ ├── YCB

│ │ └── data

│ │ ├── 0000

│ │ └── 0001

│ │ └── models

│ │ └── train.txt

│ │ └── keyframe.txt

│ │ └── val.txt

python3 train_eval.py --batch_size=8 --lr=0.0001 --backbone=swin --predict_future=1 --use_depth=1 --video_length=5 --workers=12This directory contains implementation for estimating 6D object poses from videos.

Please install all the requirements using requirements.txt

pip3 install -r requirements.txt

Create a ./evaluation_results_video, wandb, logs, output and model folders.

Arguments and their defaults are in arguments.py

backboneswin or beituse_depthTo use ground-truth depth during trainingrestore_filename of the file in --model_dir_path containing weights to reload before traininglrLearning rate for the optimiserbatch_sizeBatch size for the datasetworkersnum_workersenv_nameenvironment name for wandb, which is also the checkpoint name

Download the entire YCB dataset from https://rse-lab.cs.washington.edu/projects/posecnn/

Download the checkpoint from https://drive.google.com/drive/folders/1lQh3G7KN-SHb7B-NYpqWj55O1WD4E9s6?usp=sharing

Add the checkpoint to ./model/Videopose/last_checkpoint_0000.pt, and pass the argument --restore_file=Videopose during training to start from a checkpoint. If no start_epoch is mentioned, the training will restart from the last checkpoint.

The data folder looks like

train_eval.py

dataloader.py

├── data

│ ├── YCB

│ │ └── data

│ │ ├── 0000

│ │ └── 0001

│ │ └── models

│ │ └── train.txt

│ │ └── keyframe.txt

│ │ └── val.txt

The project uses wandb for visualisation.

Main branch uses -posecnn.mat files, that I manually generated for every frame in the dataset using Posecnn repository. If you do not have those files, v1 is the branch to use.

python3 train_eval.py --batch_size=8 --lr=0.0001 --backbone=swin --predict_future=1 --use_depth=1 --video_length=5 --workers=12Evaluation currently runs only on one GPU.

python3 train_eval.py --batch_size=8 --backbone=swin --predict_future=1 --use_depth=1 --video_length=5 --workers=12 --restore_file=Videopose --split=evalThe command will create several mat files for the keyframes and also saves images into a folder. To evaluate the mat files, please use the YCBToolBox.