A PyTorch implementation of Tacotron2, described in Natural TTS Synthesis By Conditioning Wavenet On Mel Spectrogram Predictions, an end-to-end text-to-speech(TTS) neural network architecture, which directly converts character text sequence to speech.

- https://github.com/kaituoxu/Tacotron2 is refereced with pytorch_sound

- Differences

- Use log mel spectrogram and Waveglow Vocoder to synthesize audios

- Change dimension of tensors from (N, T, C) to (N, C, T)

- N : batch size, C : channels, T : time steps

- Add stop status on inference time.

- Add thiner pre-net to get more accurate attention.

- And little bit different text encoder.

- Ubuntu 16.04

- Python 3.6

- PyTorch 1.2.0

- 2 GPUs

- Install above external repos

You should see first README.md of pytorch_sound, to prepare dataset.

$ pip install git+https://github.com/Appleholic/pytorch_sound

- Install package

$ pip install -e .- Train

$ python tacotron2_pytorch/train.py [YOUR_META_DIR] [SAVE_DIR] [SAVE_PREFIX] [[OTHER OPTIONS...]]- Synthesize (one sample)

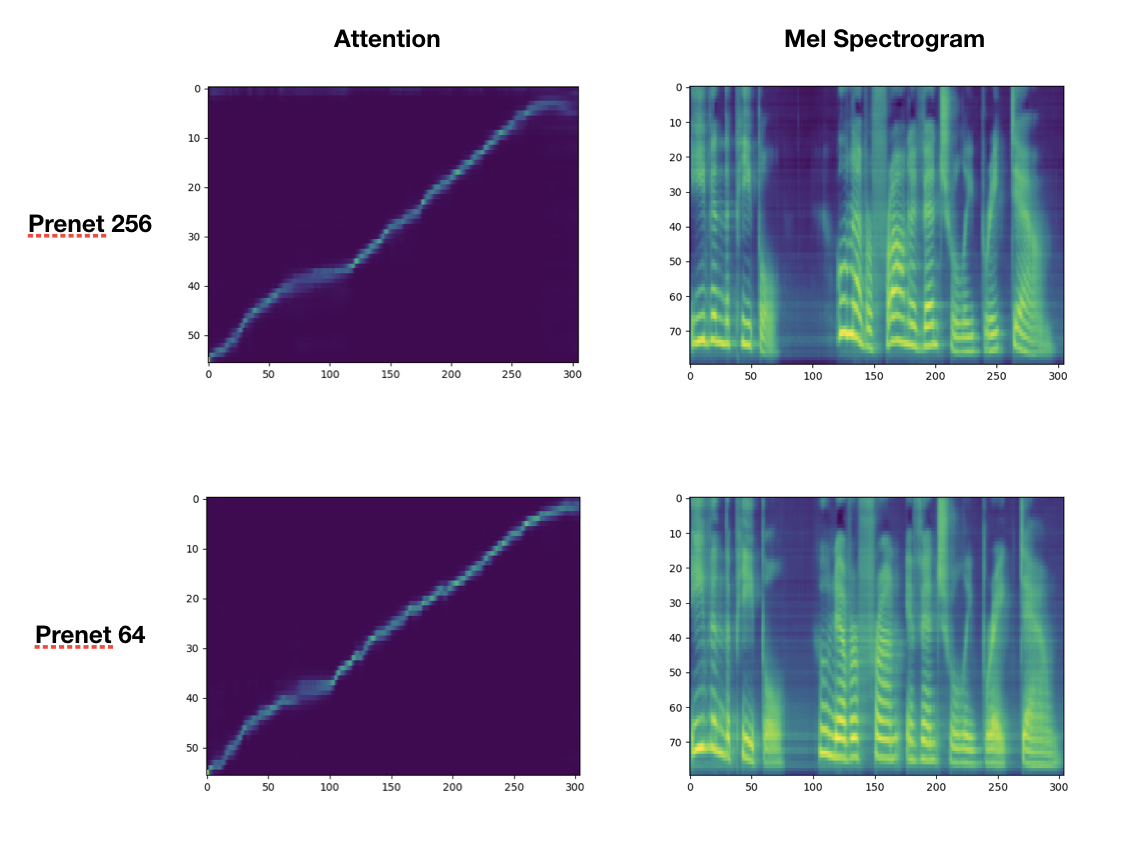

- It writes audio, wave plot, attention and mel spectrogram image.

$ python tacotron2_pytorch/synthesize.py [TEXT] [PRETRAINED_PATH] [MODEL_NAME] [SAVE_DIRECTORY]- When inference time, spectrogram got several stripes. It might be occurred by hard drop out. (Not appear on training time)

- Stop token is not working well on inference time.

- Error case and resolve them: Torchhub waveglow

- Automatically downloaded checkpoint file is crashed with using hubconf.py on Nvidia DeepLearningExample

- Download directly from nvidia waveglow checkpoint 32fp code, and copy that into '$HOME/.cache/torch/checkpoints'

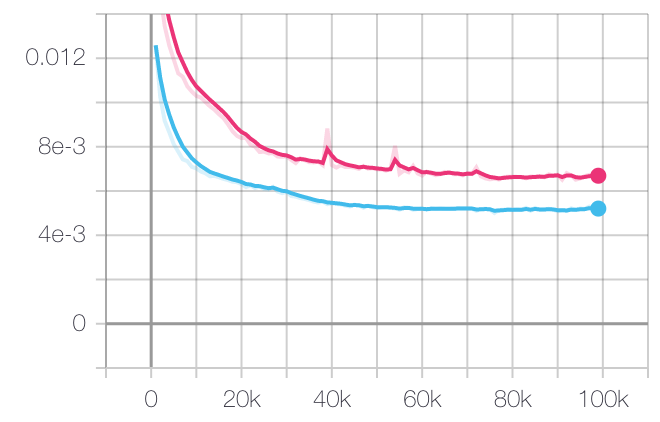

- Total Validation Loss

- Sum of 2 MSE Losses (linear, linear + post) and stop BCE Loss

- red : pre net 64 dim, blue : pre net 256 dim

- 100,000 steps

- Attention, Mel Spectrogram Sample