- All commands should be run from inside the root directory that this

README.mdfile exists! - We highly suggest using

screenfor all experiment runs. - Don't run scripts in parallel. This artifact is explicitly serial.

- We expect some variations on performance numbers and we remind the reader that our performance numbers were obtained on a bare-metal machine with a

3.0 GHz AMD EPYC 7302P 16-Core CPUand128 GB RAM, running64-bit Ubuntu 18.04 LTS (kernel version 4.15.0-162)with anSSD disk. If the experiments are to be conducted inside a container or a virtual machine, performance variations are expected.

- Run

./conf/config.sh - Run

./conf/build.sh

Make sure that both scripts finish with the message Done! in the end. You can also validate that everything worked properly by running echo $? after every script. If the return value is 0, you are ready to proceed.

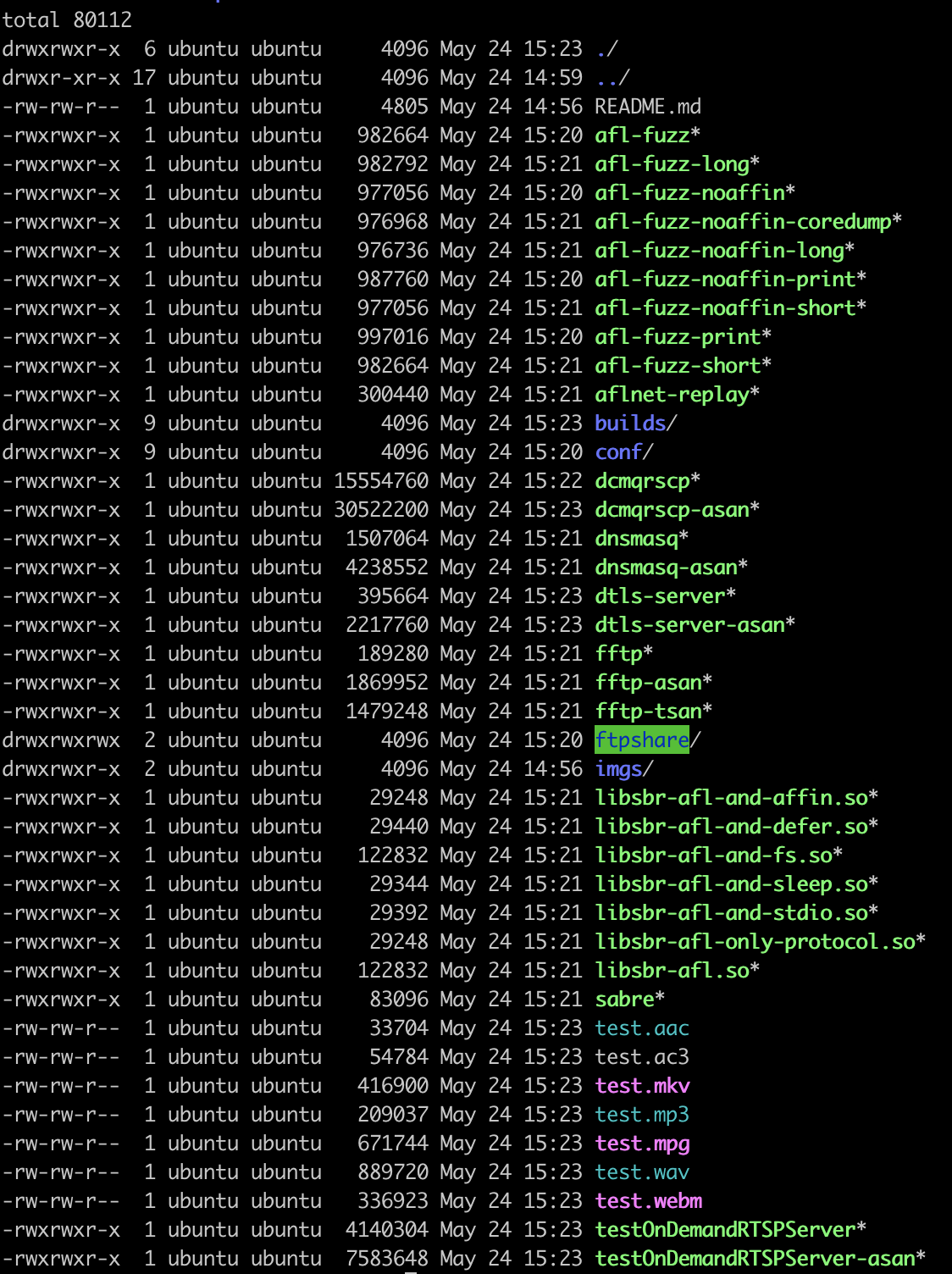

After both scripts are executed, the SnapFuzz root directory should include the following files:

Before we generate the real benchmark data, we can run some preliminary numbers just to make sure everything works properly. There are two main scripts:

./conf/run-orig.shthat launches the native AFLNet experiments./conf/run-snapfuzz.shthat launchers the SnapFuze experiments

To make some tests runs of 1,000 iterations that last only but a short period of time, use the following commands:

./conf/run-orig.sh -s dicom./conf/run-orig.sh -s dns./conf/run-orig.sh -s dtls./conf/run-orig.sh -s ftp./conf/run-orig.sh -s rtsp

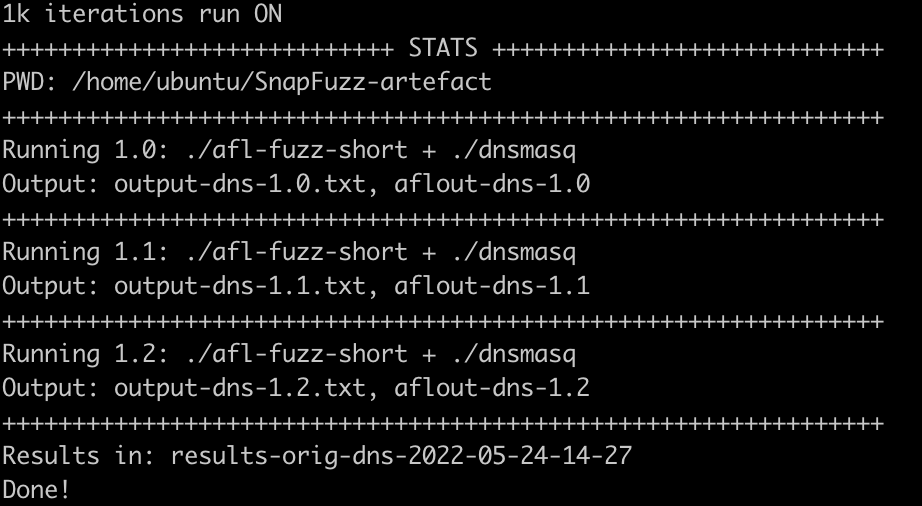

Make sure that each command has run successfully by verifying that a new directory under the name schema of results-orig-{project name}-{date} has been created.

In the following image you can see a successful run of the dns benchmark:

NOTE: Our run scripts perform some checks after each run but if something feels off, you can inspect the results-orig-{project name}-{date}/output-{project name}-{iteration}.txt file for errors.

The same goes for the SnapFuzz experiments:

./conf/run-snapfuzz.sh -s dicom./conf/run-snapfuzz.sh -s dns./conf/run-snapfuzz.sh -s dtls./conf/run-snapfuzz.sh -s ftp./conf/run-snapfuzz.sh -s rtsp

After the above runs are done, you should be able to see new directores under the name schema of results-orig-{project name}-{date} for the native AFLNet experiments and results-snapfuzz-{project name}-{date} for the SnapFuzz experiments.

You can cd in each of the directories and see the stats with:

../conf/stats.py

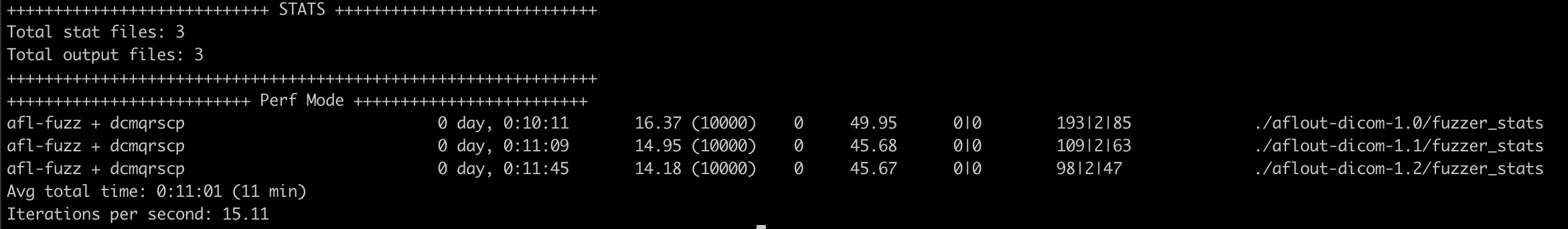

An example output is provided in the following picture. The only important information is the Avg total time line which provides explicit information on the average time required for one fuzzing campaign to execute. By collecting each average total time for each project and compairing them then with SnapFuzz, we can conclude on the total speedups reported in our paper.

Now we are ready to generate the paper data. The baseline data include 1 milion iterations and we need to run each experiment seperately. Be warned that the actual baseline data are extremely slow and each iteration run will take a day or more. Nevertheless, to reproduce our results you run the following:

./conf/run-orig.sh dicom./conf/run-orig.sh dns./conf/run-orig.sh dtls./conf/run-orig.sh ftp./conf/run-orig.sh rtsp

The same goes for the SnapFuzz data. SnapFuzz is of course significantly faster and thus a couple of hours should be enough for each iteration. We follow a similar strategy as above:

./conf/run-snapfuzz.sh dicom./conf/run-snapfuzz.sh dns./conf/run-snapfuzz.sh dtls./conf/run-snapfuzz.sh ftp./conf/run-snapfuzz.sh rtsp

After the above runs are done, you should be able to see new directores under the name schema of results-snapfuzz-{project_name}-{date}. You can cd in each of the directories and see the stats with:

../conf/stats.py

To generate the SnapFuzz data we follow a similar strategy as above:

./conf/run-snapfuzz.sh -l dicom./conf/run-snapfuzz.sh -l dns./conf/run-snapfuzz.sh -l dtls./conf/run-snapfuzz.sh -l ftp./conf/run-snapfuzz.sh -l rtsp

After the above runs are done, you should be able to see new directores under the name schema of results-snapfuzz-{project_name}-{date}. You can cd in each of the directories and see the stats with:

../conf/stats.py

NOTE: The 24h results don't have any special directory indicator from the standard SnapFuzz results.

After all 1 milion iterations experiments are done, you can validate our speedup results reported in Table 1 in the paper by simply dividing the Avg total time reported by the stats.py for each baseline experiment with the corresponding SnapFuzz one.

As running our full suite of 1 milion iterations is taking a very long time, the reviewers could sample our results with high confidence by just comparing the results from the preliminary test runs.